|

Extract, Transform And Load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process became a popular concept in the 1970s and is often used in dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, engineering, mathematical, technological and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology and software engineering. The term "computing" is also synonymous with counting and calculating. In earlier times, it was used in reference to the action performed by mechanical computing machines, and before that, to human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. Computing is intimately tied to the representation of numbers, though mathematical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JSON

JSON (JavaScript Object Notation, pronounced ; also ) is an open standard file format and data interchange format that uses human-readable text to store and transmit data objects consisting of attribute–value pairs and arrays (or other serializable values). It is a common data format with diverse uses in electronic data interchange, including that of web applications with servers. JSON is a language-independent data format. It was derived from JavaScript, but many modern programming languages include code to generate and parse JSON-format data. JSON filenames use the extension .json. Any valid JSON file is a valid JavaScript (.js) file, even though it makes no changes to a web page on its own. Douglas Crockford originally specified the JSON format in the early 2000s. He and Chip Morningstar sent the first JSON message in April 2001. Naming and pronunciation The 2017 international standard (ECMA-404 and ISO/IEC 21778:2017) specifies "Pronounced , as in ' Jaso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pivot Table

A pivot table is a table of grouped values that aggregates the individual items of a more extensive table (such as from a database, spreadsheet, or business intelligence program) within one or more discrete categories. This summary might include sums, averages, or other statistics, which the pivot table groups together using a chosen aggregation function applied to the grouped values. Although ''pivot table'' is a generic term, Microsoft held a trademark on the term in the United States from 1994 to 2020. History In their book ''Pivot Table Data Crunching'', Bill Jelen and Mike Alexander refer to Pito Salas as the "father of pivot tables". While working on a concept for a new program that would eventually become Lotus Improv, Salas noted that spreadsheets have patterns of data. A tool that could help the user recognize these patterns would help to build advanced data models quickly. With Improv, users could define and store sets of categories, then change views by dragging cat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transpose

In linear algebra, the transpose of a matrix is an operator which flips a matrix over its diagonal; that is, it switches the row and column indices of the matrix by producing another matrix, often denoted by (among other notations). The transpose of a matrix was introduced in 1858 by the British mathematician Arthur Cayley. In the case of a logical matrix representing a binary relation R, the transpose corresponds to the converse relation RT. Transpose of a matrix Definition The transpose of a matrix , denoted by , , , A^, , , or , may be constructed by any one of the following methods: # Reflect over its main diagonal (which runs from top-left to bottom-right) to obtain #Write the rows of as the columns of #Write the columns of as the rows of Formally, the -th row, -th column element of is the -th row, -th column element of : :\left mathbf^\operatorname\right = \left mathbf\right. If is an matrix, then is an matrix. In the case of square matric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surrogate Key

A surrogate key (or synthetic key, pseudokey, entity identifier, factless key, or technical key) in a database is a unique identifier for either an ''entity'' in the modeled world or an ''object'' in the database. The surrogate key is ''not'' derived from application data, unlike a ''natural'' (or ''business'') key. Definition There are at least two definitions of a surrogate: ; Surrogate (1) – Hall, Owlett and Todd (1976): A surrogate represents an ''entity'' in the outside world. The surrogate is internally generated by the system but is nevertheless visible to the user or application. ; Surrogate (2) – Wieringa and De Jonge (1991): A surrogate represents an ''object'' in the database itself. The surrogate is internally generated by the system and is invisible to the user or application. The ''Surrogate (1)'' definition relates to a data model rather than a storage model and is used throughout this article. See Date (1998). An important distinction between a su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Record Linkage

Record linkage (also known as data matching, data linkage, entity resolution, and many other terms) is the task of finding records in a data set that refer to the same entity across different data sources (e.g., data files, books, websites, and databases). Record linkage is necessary when joining different data sets based on entities that may or may not share a common identifier (e.g., database key, URI, National identification number), which may be due to differences in record shape, storage location, or curator style or preference. A data set that has undergone RL-oriented reconciliation may be referred to as being ''cross-linked''. Naming conventions "Record linkage" is the term used by statisticians, epidemiologists, and historians, among others, to describe the process of joining records from one data source with another that describe the same entity. However, many other terms are used for this process. Unfortunately, this profusion of terminology has led to few cross-r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Join (relational Algebra)

In database theory, relational algebra is a theory that uses algebraic structures with a well-founded semantics for modeling data, and defining queries on it. The theory was introduced by Edgar F. Codd. The main application of relational algebra is to provide a theoretical foundation for relational databases, particularly query languages for such databases, chief among which is SQL. Relational databases store tabular data represented as relations. Queries over relational databases often likewise return tabular data represented as relations. The main purpose of the relational algebra is to define operators that transform one or more input relations to an output relation. Given that these operators accept relations as input and produce relations as output, they can be combined and used to express potentially complex queries that transform potentially many input relations (whose data are stored in the database) into a single output relation (the query results). Unary operators ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

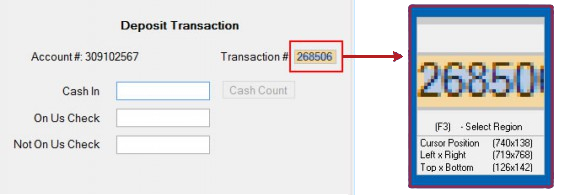

Null (SQL)

In SQL, null or NULL is a special marker used to indicate that a data value does not exist in the database. Introduced by the creator of the relational database model, E. F. Codd, SQL null serves to fulfil the requirement that all ''true relational database management systems (RDBMS)'' support a representation of "missing information and inapplicable information". Codd also introduced the use of the lowercase Greek omega (ω) symbol to represent null in database theory. In SQL, NULL is a reserved word used to identify this marker. A null should not be confused with a value of 0. A null value indicates a lack of a value, which is not the same thing as a value of zero. For example, consider the question "How many books does Adam own?" The answer may be "zero" (we ''know'' that he owns ''none'') or "null" (we ''do not know'' how many he owns). In a database table, the column reporting this answer would start out with no value (marked by Null), and it would not be updated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Cleansing

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall. After cleansing, a data set should be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleaning differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at the time of entry, rather than on batches of data. The actual pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Screen-scraping

Data scraping is a technique where a computer program extracts data from human-readable output coming from another program. Description Normally, data transfer between programs is accomplished using data structures suited for automated processing by computers, not people. Such interchange formats and protocols are typically rigidly structured, well-documented, easily parsed, and minimize ambiguity. Very often, these transmissions are not human-readable at all. Thus, the key element that distinguishes data scraping from regular parsing is that the output being scraped is intended for display to an end-user, rather than as an input to another program. It is therefore usually neither documented nor structured for convenient parsing. Data scraping often involves ignoring binary data (usually images or multimedia data), display formatting, redundant labels, superfluous commentary, and other information which is either irrelevant or hinders automated processing. Data scraping i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Spider

A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request bots to index only parts of a website, or nothing at all. The number of Internet pages is extremely large; ev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ISAM

ISAM (an acronym for indexed sequential access method) is a method for creating, maintaining, and manipulating computer files of data so that records can be retrieved sequentially or randomly by one or more keys. Indexes of key fields are maintained to achieve fast retrieval of required file records in Indexed files. IBM originally developed ISAM for mainframe computers, but implementations are available for most computer systems. The term ''ISAM'' is used for several related concepts: *The IBM ISAM product and the algorithm it employs. *A database system where an application developer directly uses an application programming interface to search indexes in order to locate records in data files. In contrast, a relational database uses a query optimizer which automatically selects indexes. *An indexing algorithm that allows both sequential and keyed access to data. Most databases use some variation of the B-tree for this purpose, although the original IBM ISAM and VSAM implement ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |