|

Differentiable Neural Computer

In artificial intelligence, a differentiable neural computer (DNC) is a memory augmented artificial neural network, neural network architecture (MANN), which is typically (but not by definition) recurrent in its implementation. The model was published in 2016 by Alex Graves (computer scientist), Alex Graves et al. of DeepMind. Applications DNC indirectly takes inspiration from Von Neumann architecture, Von-Neumann architecture, making it likely to outperform conventional architectures in tasks that are fundamentally algorithmic that cannot be learned by finding a decision boundary. So far, DNCs have been demonstrated to handle only relatively simple tasks, which can be solved using conventional programming. But DNCs don't need to be programmed for each problem, but can instead be trained. This attention span allows the user to feed complex data structures such as Graph (abstract data type), graphs sequentially, and recall them for later use. Furthermore, they can learn aspects ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DNC Training Recall Task

DNC may refer to: Business *Delaware North, a global food service and hospitality company formerly known as Delaware North Companies *Den norske Creditbank, a now-defunct Norwegian commercial bank Politics *Democratic National Committee, the principal campaign and fund-raising organization affiliated with the United States Democratic Party *Democratic National Convention, a series of national conventions held every four years since 1832 by the United States Democratic Party * Daigaku Nyushi Center, a colloquial term for the National Center for University Entrance Examinations, a Japanese government agency *Director of Naval Communications, a former United States Navy staff post *Director of Naval Construction, a former senior post in the British Admiralty *Do not call list, a registry of telephone numbers in several western countries whose owners have opted out of unsolicited telephone marketing calls **Do Not Call Register (Australia) **National Do Not Call List (Canada) **Nationa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Recurrent Neural Network

Recurrent neural networks (RNNs) are a class of artificial neural networks designed for processing sequential data, such as text, speech, and time series, where the order of elements is important. Unlike feedforward neural networks, which process inputs independently, RNNs utilize recurrent connections, where the output of a neuron at one time step is fed back as input to the network at the next time step. This enables RNNs to capture temporal dependencies and patterns within sequences. The fundamental building block of RNNs is the ''recurrent unit'', which maintains a ''hidden state''—a form of memory that is updated at each time step based on the current input and the previous hidden state. This feedback mechanism allows the network to learn from past inputs and incorporate that knowledge into its current processing. RNNs have been successfully applied to tasks such as unsegmented, connected handwriting recognition, speech recognition, natural language processing, and neural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Softmax Function

The softmax function, also known as softargmax or normalized exponential function, converts a tuple of real numbers into a probability distribution of possible outcomes. It is a generalization of the logistic function to multiple dimensions, and is used in multinomial logistic regression. The softmax function is often used as the last activation function of a neural network to normalize the output of a network to a probability distribution over predicted output classes. Definition The softmax function takes as input a tuple of real numbers, and normalizes it into a probability distribution consisting of probabilities proportional to the exponentials of the input numbers. That is, prior to applying softmax, some tuple components could be negative, or greater than one; and might not sum to 1; but after applying softmax, each component will be in the interval (0, 1), and the components will add up to 1, so that they can be interpreted as probabilities. Furthermore, the la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sigmoid Function

A sigmoid function is any mathematical function whose graph of a function, graph has a characteristic S-shaped or sigmoid curve. A common example of a sigmoid function is the logistic function, which is defined by the formula :\sigma(x) = \frac = \frac = 1 - \sigma(-x). Other sigmoid functions are given in the #Examples, Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as a synonym for "logistic function". Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of ''x'') and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (''y'' axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cosine Similarity

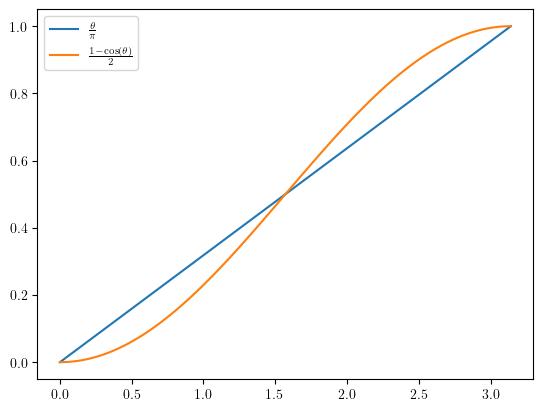

In data analysis, cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. The cosine similarity always belongs to the interval 1, +1 For example, two proportional vectors have a cosine similarity of +1, two orthogonal vectors have a similarity of 0, and two opposite vectors have a similarity of −1. In some contexts, the component values of the vectors cannot be negative, in which case the cosine similarity is bounded in ,1/math>. For example, in information retrieval and text mining, each word is assigned a different coordinate and a document is represented by the vector of the numbers of occurrences of each word in the document. Cosine similarity then gives a u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Identity Matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere. It has unique properties, for example when the identity matrix represents a geometric transformation, the object remains unchanged by the transformation. In other contexts, it is analogous to multiplying by the number 1. Terminology and notation The identity matrix is often denoted by I_n, or simply by I if the size is immaterial or can be trivially determined by the context. I_1 = \begin 1 \end ,\ I_2 = \begin 1 & 0 \\ 0 & 1 \end ,\ I_3 = \begin 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end ,\ \dots ,\ I_n = \begin 1 & 0 & 0 & \cdots & 0 \\ 0 & 1 & 0 & \cdots & 0 \\ 0 & 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \end. The term unit matrix has also been widely used, but the term ''identity matrix'' is now standard. The term ''unit matrix'' is ambiguous, because it is also used for a matrix of on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weight Matrix

Weighted least squares (WLS), also known as weighted linear regression, is a generalization of ordinary least squares and linear regression in which knowledge of the unequal variance of observations (''heteroscedasticity'') is incorporated into the regression. WLS is also a specialization of generalized least squares, when all the off-diagonal entries of the covariance matrix of the errors, are null. Formulation The fit of a model to a data point is measured by its residual, r_i , defined as the difference between a measured value of the dependent variable, y_i and the value predicted by the model, f(x_i, \boldsymbol\beta): r_i(\boldsymbol\beta) = y_i - f(x_i, \boldsymbol\beta). If the errors are uncorrelated and have equal variance, then the function S(\boldsymbol\beta) = \sum_i r_i(\boldsymbol\beta)^2, is minimised at \boldsymbol\hat\beta, such that \frac(\hat\boldsymbol\beta) = 0. The Gauss–Markov theorem shows that, when this is so, \hat is a best linear unbiased est ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Content-based Addressing

Content-addressable memory (CAM) is a special type of computer memory used in certain very-high-speed searching applications. It is also known as associative memory or associative storage and compares input search data against a table of stored data, and returns the address of matching data. CAM is frequently used in networking devices where it speeds up forwarding information base and routing table operations. This kind of associative memory is also used in cache memory. In associative cache memory, both address and content is stored side by side. When the address matches, the corresponding content is fetched from cache memory. History Dudley Allen Buck invented the concept of content-addressable memory in 1955. Buck is credited with the idea of ''recognition unit''. Hardware associative array Unlike standard computer memory, random-access memory (RAM), in which the user supplies a memory address and the RAM returns the data word stored at that address, a CAM is designed su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

YouTube

YouTube is an American social media and online video sharing platform owned by Google. YouTube was founded on February 14, 2005, by Steve Chen, Chad Hurley, and Jawed Karim who were three former employees of PayPal. Headquartered in San Bruno, California, it is the second-most-visited website in the world, after Google Search. In January 2024, YouTube had more than 2.7billion monthly active users, who collectively watched more than one billion hours of videos every day. , videos were being uploaded to the platform at a rate of more than 500 hours of content per minute, and , there were approximately 14.8billion videos in total. On November 13, 2006, YouTube was purchased by Google for $1.65 billion (equivalent to $ billion in ). Google expanded YouTube's business model of generating revenue from advertisements alone, to offering paid content such as movies and exclusive content produced by and for YouTube. It also offers YouTube Premium, a paid subs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Turing Complete

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher and theoretical biologist. He was highly influential in the development of theoretical computer science, providing a formalisation of the concepts of algorithm and computation with the Turing machine, which can be considered a model of a general-purpose computer. Turing is widely considered to be the father of theoretical computer science. Born in London, Turing was raised in southern England. He graduated from King's College, Cambridge, and in 1938, earned a doctorate degree from Princeton University. During World War II, Turing worked for the Government Code and Cypher School at Bletchley Park, Britain's codebreaking centre that produced Ultra intelligence. He led Hut 8, the section responsible for German naval cryptanalysis. Turing devised techniques for speeding the breaking of German ciphers, including improvements to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gradient Descent

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a trajectory that maximizes that function; the procedure is then known as ''gradient ascent''. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |