|

Data Parallelism

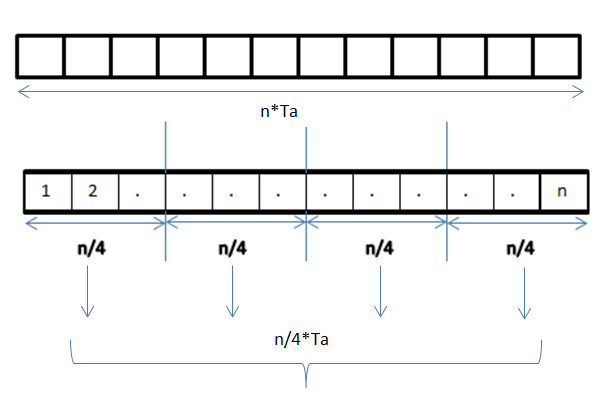

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. The Locality of reference, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Vs

In mathematics, a sequence is an enumerated collection of mathematical object, objects in which repetitions are allowed and order theory, order matters. Like a Set (mathematics), set, it contains Element (mathematics), members (also called ''elements'', or ''terms''). The number of elements (possibly infinite number, infinite) is called the ''length'' of the sequence. Unlike a set, the same elements can appear multiple times at different positions in a sequence, and unlike a set, the order does matter. Formally, a sequence can be defined as a function (mathematics), function from natural numbers (the positions of elements in the sequence) to the elements at each position. The notion of a sequence can be generalized to an indexed family, defined as a function from an ''arbitrary'' index set. For example, (M, A, R, Y) is a sequence of letters with the letter "M" first and "Y" last. This sequence differs from (A, R, M, Y). Also, the sequence (1, 1, 2, 3, 5, 8), which contains the nu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dot Product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a Scalar (mathematics), scalar as a result". It is also used for other symmetric bilinear forms, for example in a pseudo-Euclidean space. Not to be confused with scalar multiplication. is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors), and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two Euclidean vector, vectors is widely used. It is often called the inner product (or rarely the projection product) of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space (see ''Inner product space'' for more). It should not be confused with the cross product. Algebraically, the dot product is the sum of the Product (mathematics), products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RaftLib

RaftLib is a portable parallel processing system that aims to provide extreme performance while increasing programmer productivity. It enables a programmer to assemble a massively parallel program (both local and distributed) using simple iostream-like operators. RaftLib handles threading, memory allocation, memory placement, and auto-parallelization of compute kernels. It enables applications to be constructed from chains of compute kernels forming a task and pipeline parallel compute graph. Programs are authored in C++ (although other language bindings are planned). Example Here is a Hello World example for demonstration purposes: #include #include #include #include class hi : public raft::kernel ; int main( int argc, char **argv ) References External links The RaftLib Project PageRaftLib User WikiProject GitHub RepositoryCPPNow RaftLib Tutorial SessionParallel BZip2 Implementation Using RaftLib {{Parallel computing C++ programming language family ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Threading Building Blocks

oneAPI Threading Building Blocks (oneTBB; formerly Threading Building Blocks or TBB) is a C++ template library developed by Intel for parallel programming on multi-core processors. Using TBB, a computation is broken down into tasks that can run in parallel. The library manages and schedules threads to execute these tasks. Overview A oneTBB program creates, synchronizes, and destroys graphs of dependent tasks according to ''algorithms'', i.e. high-level parallel programming paradigms (a.k.a. Algorithmic Skeletons). Tasks are then executed respecting graph dependencies. This approach groups TBB in a family of techniques for parallel programming aiming to decouple the programming from the particulars of the underlying machine. oneTBB implements work stealing to balance a parallel workload across available processing cores in order to increase core utilization and therefore scaling. Initially, the workload is evenly divided among the available processor cores. If one core com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenACC

OpenACC (for ''open accelerators'') is a programming standard for parallel computing developed by Cray, CAPS, Nvidia and PGI. The standard is designed to simplify parallel programming of heterogeneous CPU/ GPU systems. As in OpenMP, the programmer can annotate C, C++ and Fortran source code to identify the areas that should be accelerated using compiler directives and additional functions. Like OpenMP 4.0 and newer, OpenACC can target both the CPU and GPU architectures and launch computational code on them. OpenACC members have worked as members of the OpenMP standard group to merge into OpenMP specification to create a common specification which extends OpenMP to support accelerators in a future release of OpenMP. These efforts resulted in a technical report for comment and discussion timed to include the annual Supercomputing Conference (November 2012, Salt Lake City) and to address non-Nvidia accelerator support with input from hardware vendors who participate in Ope ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs. CUDA was created by Nvidia in 2006. When it was first introduced, the name was an acronym for ''Compute Unified Device Architecture'', but Nvidia later dropped the common use of the acronym and now rarely expands it. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications. CUDA is designed to work with programming languages such as C, C++, Fortran, Python and Julia. This accessibility makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Message Passing Interface

The Message Passing Interface (MPI) is a portable message-passing standard designed to function on parallel computing architectures. The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran. There are several open-source MPI implementations, which fostered the development of a parallel software industry, and encouraged development of portable and scalable large-scale parallel applications. History The message passing interface effort began in the summer of 1991 when a small group of researchers started discussions at a mountain retreat in Austria. Out of that discussion came a Workshop on Standards for Message Passing in a Distributed Memory Environment, held on April 29–30, 1992 in Williamsburg, Virginia. Attendees at Williamsburg discussed the basic features essential to a standard message-passing interface and established a working group to continu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Circuit Simulation

Electronic circuit simulation uses mathematical models to replicate the behavior of an actual electronic device or circuit. Simulation software allows for the modeling of circuit operation and is an invaluable analysis tool. Due to its highly accurate modeling capability, many colleges and universities use this type of software for the teaching of electronics technician and electronics engineering programs. Electronics simulation software engages its users by integrating them into the learning experience. These kinds of interactions actively engage learners to analyze, synthesize, organize, and evaluate content and result in learners constructing their own knowledge. Simulating a circuit’s behavior before actually building it can greatly improve design efficiency by making faulty designs known as such, and providing insight into the behavior of electronic circuit designs. In particular, for integrated circuits, the tooling ( photomasks) is expensive, breadboards are impr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Load Balancing (computing)

In computing, load balancing is the process of distributing a set of tasks over a set of resources (computing units), with the aim of making their overall processing more efficient. Load balancing can optimize response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle. Load balancing is the subject of research in the field of parallel computers. Two main approaches exist: static algorithms, which do not take into account the state of the different machines, and dynamic algorithms, which are usually more general and more efficient but require exchanges of information between the different computing units, at the risk of a loss of efficiency. Problem overview A load-balancing algorithm always tries to answer a specific problem. Among other things, the nature of the tasks, the algorithmic complexity, the hardware architecture on which the algorithms will run as well as required error tolerance, must be taken into account. Therefore com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SPMD

In computing, single program, multiple data (SPMD) is a term that has been used to refer to computational models for exploiting parallelism whereby multiple processors cooperate in the execution of a program in order to obtain results faster. The term SPMD was introduced in 1983 and was used to denote two different computational models: # by Michel Auguin (University of Nice Sophia-Antipolis) and François Larbey (Thomson/Sintra), as a "''fork-and-join''" and data-parallel approach where the ''parallel tasks ("single program")'' are split-up and run simultaneously ''in lockstep'' on multiple ''SIMD processors'' with different inputs, and # by Frederica Darema (IBM), where "''all (processors) processes begin executing the same program... but through synchronization directives ... self-schedule themselves to execute different instructions and act on different data''" and enabling MIMD parallelization of a given program, and is a more general approach than data-parallel a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudocode

In computer science, pseudocode is a description of the steps in an algorithm using a mix of conventions of programming languages (like assignment operator, conditional operator, loop) with informal, usually self-explanatory, notation of actions and conditions. Although pseudocode shares features with regular programming languages, it is intended for human reading rather than machine control. Pseudocode typically omits details that are essential for machine implementation of the algorithm, meaning that pseudocode can only be verified by hand. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The reasons for using pseudocode are that it is easier for people to understand than conventional programming language code and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications to document ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |