|

Constraint-based Grammar

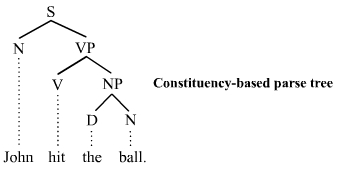

Model-theoretic grammars, also known as constraint-based grammars, contrast with generative grammars in the way they define sets of sentences: they state constraints on syntactic structure rather than providing operations for generating syntactic objects. A generative grammar provides a set of operations such as rewriting, insertion, deletion, movement, or combination, and is interpreted as a definition of the set of all and only the objects that these operations are capable of producing through iterative application. A model-theoretic grammar simply states a set of conditions that an object must meet, and can be regarded as defining the set of all and only the structures of a certain sort that satisfy all of the constraints. The approach applies the mathematical techniques of model theory to the task of syntactic description: a grammar is a theory in the logician's sense (a consistent set of statements) and the well-formed structures are the models that satisfy the theory. Exampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Grammar

Generative grammar, or generativism , is a linguistic theory that regards linguistics as the study of a hypothesised innate grammatical structure. It is a biological or biologistic modification of earlier structuralist theories of linguistics, deriving ultimately from glossematics. Generative grammar considers grammar as a system of rules that generates exactly those combinations of words that form grammatical sentences in a given language. It is a system of explicit rules that may apply repeatedly to generate an indefinite number of sentences which can be as long as one wants them to be. The difference from structural and functional models is that the object is base-generated within the verb phrase in generative grammar. This purportedly cognitive structure is thought of as being a part of a universal grammar, a syntactic structure which is caused by a genetic mutation in humans. Generativists have created numerous theories to make the NP VP (NP) analysis work in natural la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Theory

In mathematical logic, model theory is the study of the relationship between formal theories (a collection of sentences in a formal language expressing statements about a mathematical structure), and their models (those structures in which the statements of the theory hold). The aspects investigated include the number and size of models of a theory, the relationship of different models to each other, and their interaction with the formal language itself. In particular, model theorists also investigate the sets that can be defined in a model of a theory, and the relationship of such definable sets to each other. As a separate discipline, model theory goes back to Alfred Tarski, who first used the term "Theory of Models" in publication in 1954. Since the 1970s, the subject has been shaped decisively by Saharon Shelah's stability theory. Compared to other areas of mathematical logic such as proof theory, model theory is often less concerned with formal rigour and closer in spirit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theory

A theory is a rational type of abstract thinking about a phenomenon, or the results of such thinking. The process of contemplative and rational thinking is often associated with such processes as observational study or research. Theories may be scientific, belong to a non-scientific discipline, or no discipline at all. Depending on the context, a theory's assertions might, for example, include generalized explanations of how nature works. The word has its roots in ancient Greek, but in modern use it has taken on several related meanings. In modern science, the term "theory" refers to scientific theories, a well-confirmed type of explanation of nature, made in a way consistent with the scientific method, and fulfilling the criteria required by modern science. Such theories are described in such a way that scientific tests should be able to provide empirical support for it, or empirical contradiction ("falsify") of it. Scientific theories are the most reliable, rigorous, and compr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structure (mathematical Logic)

In universal algebra and in model theory, a structure consists of a set along with a collection of finitary operations and relations that are defined on it. Universal algebra studies structures that generalize the algebraic structures such as groups, rings, fields and vector spaces. The term universal algebra is used for structures with no relation symbols. Model theory has a different scope that encompasses more arbitrary theories, including foundational structures such as models of set theory. From the model-theoretic point of view, structures are the objects used to define the semantics of first-order logic. For a given theory in model theory, a structure is called a model if it satisfies the defining axioms of that theory, although it is sometimes disambiguated as a ''semantic model'' when one discusses the notion in the more general setting of mathematical models. Logicians sometimes refer to structures as " interpretations", whereas the term "interpretation" generally has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformational Grammar

In linguistics, transformational grammar (TG) or transformational-generative grammar (TGG) is part of the theory of generative grammar, especially of natural languages. It considers grammar to be a system of rules that generate exactly those combinations of words that form grammatical sentences in a given language and involves the use of defined operations (called transformations) to produce new sentences from existing ones. The method is commonly associated with American linguist Noam Chomsky. Generative algebra was first introduced to general linguistics by the structural linguist Louis Hjelmslev although the method was described before him by Albert Sechehaye in 1908. Chomsky adopted the concept of transformations from his teacher Zellig Harris, who followed the American descriptivist separation of semantics from syntax. Hjelmslev's structuralist conception including semantics and pragmatics is incorporated into functional grammar. Historical context Transformational analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

George Lakoff

George Philip Lakoff (; born May 24, 1941) is an American cognitive linguistics, cognitive linguist and philosopher, best known for his thesis that people's lives are significantly influenced by the conceptual metaphors they use to explain complex phenomena. The conceptual metaphor thesis, introduced in his and Mark Johnson (philosopher), Mark Johnson's 1980 book ''Metaphors We Live By'' has found applications in a number of academic disciplines. Applying it to politics, literature, philosophy and mathematics has led Lakoff into territory normally considered basic to political science. In his 1996 book ''Moral Politics'', Lakoff described Conservatism, conservative voters as being influenced by the "strict father model" as a central metaphor for such a complex phenomenon as the State (polity), state, and Liberalism, liberal/Progressivism, progressive voters as being influenced by the "nurturant parent model" as the folk psychology, folk psychological metaphor for this complex phen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relational Grammar

In linguistics, relational grammar (RG) is a syntactic theory which argues that primitive grammatical relations provide the ideal means to state syntactic rules in universal terms. Relational grammar began as an alternative to transformational grammar. Grammatical relations hierarchy In relational grammar, constituents that serve as the arguments to predicates are numbered in what is called the grammatical relations (GR) hierarchy. This numbering system corresponds loosely to the notions of subject, direct object and indirect object. The numbering scheme is subject → (1), direct object → (2) and indirect object → (3). Other constituents (such as oblique, genitive, and object of comparative) are called ''nonterms'' (N). The predicate is marked (P). According to Geoffrey K. Pullum (1977), the GR hierarchy directly corresponds to the accessibility hierarchy: A schematic representation of a clause in this formalism might look like: Other features * Strata * Chomage (s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Phrase Structure Grammar

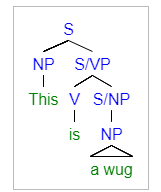

Generalized phrase structure grammar (GPSG) is a framework for describing the syntax and semantics of natural languages. It is a type of constraint-based phrase structure grammar. Constraint based grammars are based around defining certain syntactic processes as ungrammatical for a given language and assuming everything not thus dismissed is grammatical within that language. Phrase structure grammars base their framework on constituency relationships, seeing the words in a sentence as ranked, with some words dominating the others. For example, in the sentence "The dog runs", "runs" is seen as dominating "dog" since it is the main focus of the sentence. This view stands in contrast to dependency grammars, which base their assumed structure on the relationship between a single word in a sentence (the sentence head) and its dependents. Origins GPSG was initially developed in the late 1970s by Gerald Gazdar. Other contributors include Ewan Klein, Ivan Sag, and Geoffrey Pullum. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ronald Kaplan

Ronald M. Kaplan (born 1946) has served as a Vice President at Amazon.com and Chief Scientist for Amazon Search (A9.com). He was previously Vice President and Distinguished Scientist at Nuance Communications and director of Nuance' Natural Language and Artificial Intelligence Laboratory. Prior to that he served as Chief Scientist and a Principal Researcher at the Powerset division of Microsoft Bing. He is also an Adjunct Professor in the Linguistics Department at Stanford University and a Principal of Stanford's Center for the Study of Language and Information (CSLI). He was previously a Research Fellow at the Palo Alto Research Center (formerly the Xerox Palo Alto Research Center), where he was the manager of research in Natural Language Theory and Technology. He received his bachelor's degree (1968) in Mathematics and Language Behavior from the University of California, Berkeley and his Master's (1970) and Ph.D. (1975) in Social Psychology from Harvard University. As a grad ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Head-driven Phrase Structure Grammar

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science ( data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG grammar includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatical rules (schema). More r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constraint Handling Rules

Constraint Handling Rules (CHR) is a declarative, rule-based programming language, introduced in 1991 by Thom Frühwirth at the time with European Computer-Industry Research Centre (ECRC) in Munich, Germany.Thom Frühwirth. ''Theory and Practice of Constraint Handling Rules''. Special Issue on Constraint Logic Programming (P. Stuckey and K. Marriott, Eds.), Journal of Logic Programming, Vol 37(1-3), October 1998. Originally intended for constraint programming, CHR finds applications in grammar induction, type systems, abductive reasoning, multi-agent systems, natural language processing, compilation, scheduling, spatial-temporal reasoning, testing, and verification. A CHR program, sometimes called a ''constraint handler'', is a set of rules that maintain a ''constraint store'', a multi-set of logical formulas. Execution of rules may add or remove formulas from the store, thus changing the state of the program. The order in which rules "fire" on a given constraint store is non- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |