|

Complexity

Complexity characterizes the behavior of a system or model whose components interact in multiple ways and follow local rules, leading to non-linearity, randomness, collective dynamics, hierarchy, and emergence. The term is generally used to characterize something with many parts where those parts interact with each other in multiple ways, culminating in a higher order of emergence greater than the sum of its parts. The study of these complex linkages at various scales is the main goal of complex systems theory. The intuitive criterion of complexity can be formulated as follows: a system would be more complex if more parts could be distinguished, and if more connections between them existed. , a number of approaches to characterizing complexity have been used in science; Zayed ''et al.'' reflect many of these. Neil Johnson states that "even among scientists, there is no unique definition of complexity – and the scientific notion has traditionally been conveyed using partic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Complex Systems Theory

A complex system is a system composed of many components that may interact with one another. Examples of complex systems are Earth's global climate, organisms, the human brain, infrastructure such as power grid, transportation or communication systems, complex software and electronic systems, social and economic organizations (like cities), an ecosystem, a living cell, and, ultimately, for some authors, the entire universe. The behavior of a complex system is intrinsically difficult to model due to the dependencies, competitions, relationships, and other types of interactions between their parts or between a given system and its environment. Systems that are " complex" have distinct properties that arise from these relationships, such as nonlinearity, emergence, spontaneous order, adaptation, and feedback loops, among others. Because such systems appear in a wide variety of fields, the commonalities among them have become the topic of their independent area of research. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity

In computer science, the computational complexity or simply complexity of an algorithm is the amount of resources required to run it. Particular focus is given to computation time (generally measured by the number of needed elementary operations) and memory storage requirements. The complexity of a problem is the complexity of the best algorithms that allow solving the problem. The study of the complexity of explicitly given algorithms is called analysis of algorithms, while the study of the complexity of problems is called computational complexity theory. Both areas are highly related, as the complexity of an algorithm is always an upper bound on the complexity of the problem solved by this algorithm. Moreover, for designing efficient algorithms, it is often fundamental to compare the complexity of a specific algorithm to the complexity of the problem to be solved. Also, in most cases, the only thing that is known about the complexity of a problem is that it is lower than the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Chaos Theory

Chaos theory is an interdisciplinary area of Scientific method, scientific study and branch of mathematics. It focuses on underlying patterns and Deterministic system, deterministic Scientific law, laws of dynamical systems that are highly sensitive to initial conditions. These were once thought to have completely random states of disorder and irregularities. Chaos theory states that within the apparent randomness of chaotic complex systems, there are underlying patterns, interconnection, constant feedback loops, repetition, self-similarity, fractals and self-organization. The butterfly effect, an underlying principle of chaos, describes how a small change in one state of a deterministic nonlinear system can result in large differences in a later state (meaning there is sensitive dependence on initial conditions). A metaphor for this behavior is that a butterfly flapping its wings in Brazil can cause or prevent a tornado in Texas. Text was copied from this source, which is avai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Emergence

In philosophy, systems theory, science, and art, emergence occurs when a complex entity has properties or behaviors that its parts do not have on their own, and emerge only when they interact in a wider whole. Emergence plays a central role in theories of integrative levels and of complex systems. For instance, the phenomenon of life as studied in biology is an emergent property of chemistry and physics. In philosophy, theories that emphasize emergent properties have been called emergentism. In philosophy Philosophers often understand emergence as a claim about the etiology of a system's properties. An emergent property of a system, in this context, is one that is not a property of any component of that system, but is still a feature of the system as a whole. Nicolai Hartmann (1882–1950), one of the first modern philosophers to write on emergence, termed this a ''categorial novum'' (new category). Definitions This concept of emergence dates from at least the time of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Self-organization

Self-organization, also called spontaneous order in the social sciences, is a process where some form of overall order and disorder, order arises from local interactions between parts of an initially disordered system. The process can be spontaneous when sufficient energy is available, not needing control by any external agent. It is often triggered by seemingly random Statistical fluctuations, fluctuations, amplified by positive feedback. The resulting organization is wholly decentralized, :wikt:distribute, distributed over all the components of the system. As such, the organization is typically Robustness, robust and able to survive or self-healing material, self-repair substantial perturbation theory, perturbation. Chaos theory discusses self-organization in terms of islands of predictability in a sea of chaotic unpredictability. Self-organization occurs in many physics, physical, chemistry, chemical, biology, biological, robotics, robotic, and cognitive systems. Examples of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

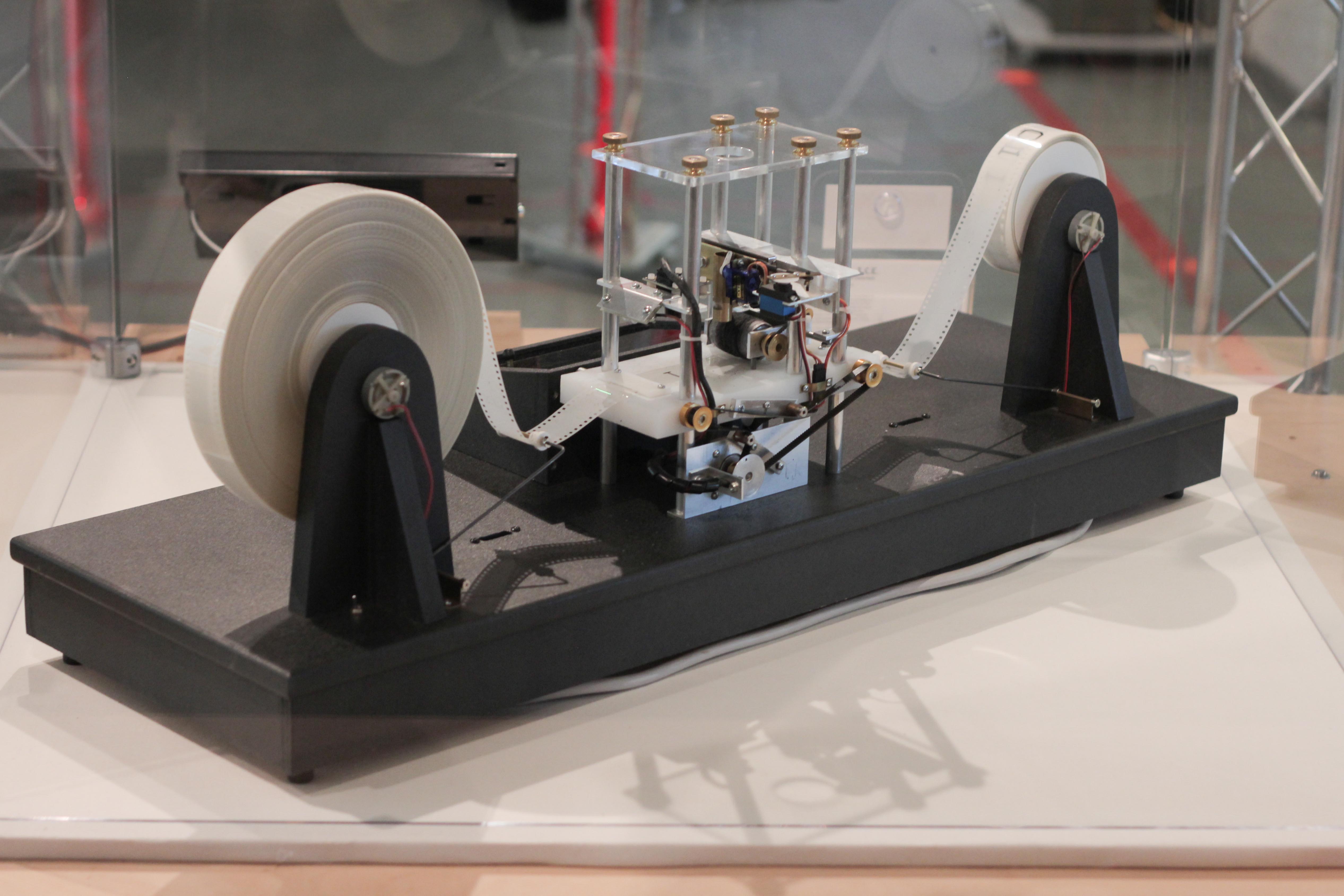

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Simplicity

Simplicity is the state or quality of being wikt:simple, simple. Something easy to understand or explain seems simple, in contrast to something complicated. Alternatively, as Herbert A. Simon suggests, something is simple or Complexity, complex depending on the way we choose to describe it. In some uses, the label "simplicity" can imply beauty, purity, or clarity. In other cases, the term may suggest a lack of nuance or complexity relative to what is required. The concept of simplicity is related to the field of epistemology and philosophy of science (e.g., in Occam's razor). Religions also reflect on simplicity with concepts such as divine simplicity. In human Lifestyle (sociology), lifestyles, simplicity can denote freedom from excessive possessions or distractions, such as having a simple living style. In some cases, the term may have negative connotations, as when referring to someone as a simpleton. In philosophy of science There is a widespread philosophical presumption t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

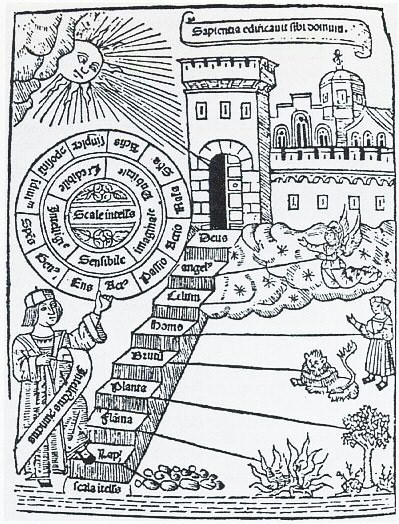

Hierarchy

A hierarchy (from Ancient Greek, Greek: , from , 'president of sacred rites') is an arrangement of items (objects, names, values, categories, etc.) that are represented as being "above", "below", or "at the same level as" one another. Hierarchy is an important concept in a wide variety of fields, such as architecture, philosophy, design, mathematics, computer science, organizational theory, systems theory, systematic biology, and the social sciences (especially political science). A hierarchy can link entities either directly or indirectly, and either vertically or diagonally. The only direct links in a hierarchy, insofar as they are hierarchical, are to one's immediate superior or to one of one's subordinates, although a system that is largely hierarchical can also incorporate alternative hierarchies. Hierarchical links can extend "vertically" upwards or downwards via multiple links in the same direction, following a path (graph theory), path. All parts of the hierarchy that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

System

A system is a group of interacting or interrelated elements that act according to a set of rules to form a unified whole. A system, surrounded and influenced by its open system (systems theory), environment, is described by its boundaries, structure and purpose and is expressed in its functioning. Systems are the subjects of study of systems theory and other systems sciences. Systems have several common properties and characteristics, including structure, function(s), behavior and interconnectivity. Etymology The term ''system'' comes from the Latin word ''systēma'', in turn from Greek language, Greek ''systēma'': "whole concept made of several parts or members, system", literary "composition"."σύστημα" , Henry George Liddell, Robert Scott, ''A Greek–English Lexicon'', on Pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Model (abstract)

The term conceptual model refers to any model that is formed after a wikt:concept#Noun, conceptualization or generalization process. Conceptual models are often abstractions of things in the real world, whether physical or social. Semantics, Semantic studies are relevant to various stages of process of concept formation, concept formation. Semantics is fundamentally a study of concepts, the meaning that thinking beings give to various elements of their experience. Overview Concept models and conceptual models The value of a conceptual model is usually directly proportional to how well it corresponds to a past, present, future, actual or potential state of affairs. A concept model (a model of a concept) is quite different because in order to be a good model it need not have this real world correspondence. In artificial intelligence, conceptual models and conceptual graphs are used for building expert systems and knowledge-based systems; here the analysts are concerned to repres ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Random Access Machine

In computer science, random-access machine (RAM or RA-machine) is a model of computation that describes an abstract machine in the general class of register machines. The RA-machine is very similar to the counter machine but with the added capability of 'indirect addressing' of its registers. The 'registers' are intuitively equivalent to main memory of a common computer, except for the additional ability of registers to store natural numbers of any size. Like the counter machine, the RA-machine contains the execution instructions in the finite-state portion of the machine (the so-called Harvard architecture). The RA-machine's equivalent of the universal Turing machinewith its program in the registers as well as its datais called the random-access stored-program machine or RASP-machine. It is an example of the so-called von Neumann architecture and is closest to the common notion of a computer. Together with the Turing machine and counter-machine models, the RA-machine and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |