|

Clinically Significant

In medicine and psychology, clinical significance is the practical importance of a treatment effect—whether it has a real genuine, palpable, noticeable effect on daily life. Types of significance Statistical significance Statistical significance is used in hypothesis testing, whereby the null hypothesis (that there is no relationship between Dependent and independent variables, variables) is tested. A level of significance is selected (most commonly ''α'' = 0.05 or 0.01), which signifies the probability of incorrectly rejecting a true null hypothesis. If there is a significant difference between two groups at ''α'' = 0.05, it means that there is only a 5% probability of obtaining the observed results under the assumption that the difference is entirely due to chance (i.e., the null hypothesis is true); it gives no indication of the magnitude or clinical importance of the difference. When statistically significant results are achieved, they favor rejection of the null hypothesis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependent And Independent Variables

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest (e.g. human population size) to predict future values (the dependent variable). Of the two, it is always the dependent variable whose variation is being studied, by altering inputs, also known as regressors in a statistical context. In an experiment, any variable that can be attributed a value without attributing a value to any other variable is called an in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Level Of Significance

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unluck ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Effect Size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of a parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes complement statistical hypothesis testing, and play an important role in power analyses, sample size planning, and in meta-analyses. The cluster of data-analysis methods concerning effect sizes is referred to as estimation statistics. Effect size is an essential component when evaluating the strength of a statistical claim, and it is the first item (magnitude) in the MAGI ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Number Needed To Treat

The number needed to treat (NNT) or number needed to treat for an additional beneficial outcome (NNTB) is an epidemiological measure used in communicating the effectiveness of a health-care intervention, typically a treatment with medication. The NNT is the average number of patients who need to be treated to prevent one additional bad outcome (e.g. the number of patients that need to be treated for one of them to benefit compared with a control in a clinical trial). It is defined as the inverse of the absolute risk reduction, and computed as 1/(I_u - I_e), where I_e is the incidence in the treated (exposed) group, and I_u is the incidence in the control (unexposed) group. This calculation implicitly assumes monotonicity, that is, no individual can be harmed by treatment. The modern approach, based on counterfactual conditionals, relaxes this assumption and yields bounds on NNT. A type of effect size, the NNT was described in 1988 by McMaster University's Laupacis, Sackett and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Preventive Fraction

In epidemiology, preventable fraction among the unexposed (PFu), is the proportion of incidents in the unexposed group that could be prevented by exposure. It is calculated as PF_u = (I_u - I_e)/I_u = 1 - RR, where I_e is the incidence in the exposed group, I_u is the incidence in the unexposed group, and RR is the relative risk. It is a synonym of the relative risk reduction. It is used when an exposure reduces the risk, as opposed to increasing it, in which case its symmetrical notion is attributable fraction among the exposed. Numerical example See also * Population Impact Measures * Preventable fraction for the population In epidemiology, preventable fraction for the population (PFp), is the proportion of incidents in the population that could be prevented by exposing the whole population. It is calculated as PF_p = (I_p - I_e)/I_p, where I_e is the incidence in the ... References {{Clinical research studies Epidemiology Medical statistics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Psychological Assessment

Psychological evaluation is a method to assess an individual's behavior, personality, cognitive abilities, and several other domains. A common reason for a psychological evaluation is to identify psychological factors that may be inhibiting a person's ability to think, behave, or regulate emotion functionally or constructively. It is the mental equivalent of physical examination. Other psychological evaluations seek to better understand the individual's unique characteristics or personality to predict things like workplace performance or customer relationship management. History Modern ''Psychological evaluation'' has been around for roughly 200 years, with roots that stem as far back as 2200 B.C.Gregory, R. J. (2010). Psychological testing: history, principles, and applications. (7th ed., pp. 1-29 inclusive). Boston, MA: Allyn & Bacon. It started in China, and many psychologists throughout Europe worked to develop methods of testing into the 1900s. The first tests focused on apt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

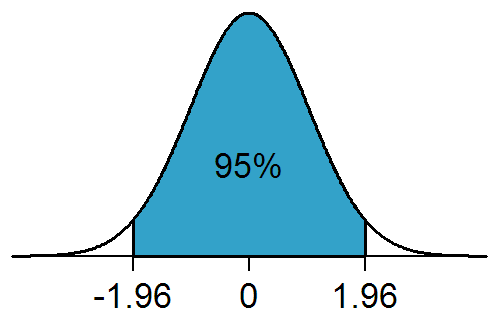

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significanc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error

The standard error (SE) of a statistic (usually an estimate of a parameter) is the standard deviation of its sampling distribution or an estimate of that standard deviation. If the statistic is the sample mean, it is called the standard error of the mean (SEM). The sampling distribution of a mean is generated by repeated sampling from the same population and recording of the sample means obtained. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely around the population mean. Therefore, the relationship between the standard error of the mean and the standard deviation is such that, for a given sample size, the standard error of the mean equals the standard deviation divided by the square root of the sample size. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression To The Mean

In statistics, regression toward the mean (also called reversion to the mean, and reversion to mediocrity) is the fact that if one sample of a random variable is extreme, the next sampling of the same random variable is likely to be closer to its mean. Furthermore, when many random variables are sampled and the most extreme results are intentionally picked out, it refers to the fact that (in many cases) a second sampling of these picked-out variables will result in "less extreme" results, closer to the initial mean of all of the variables. Mathematically, the strength of this "regression" effect is dependent on whether or not all of the random variables are drawn from the same distribution, or if there are genuine differences in the underlying distributions for each random variable. In the first case, the "regression" effect is statistically likely to occur, but in the second case, it may occur less strongly or not at all. Regression toward the mean is thus a useful concept to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cohen's H

In statistics, Cohen's ''h'', popularized by Jacob Cohen, is a measure of distance between two proportions or probabilities. Cohen's ''h'' has several related uses: * It can be used to describe the difference between two proportions as "small", "medium", or "large". * It can be used to determine if the difference between two proportions is " meaningful". * It can be used in calculating the sample size for a future study. When measuring differences between proportions, Cohen's ''h'' can be used in conjunction with hypothesis testing. A "statistically significant" difference between two proportions is understood to mean that, given the data, it is likely that there is a difference in the population proportions. However, this difference might be too small to be meaningful—the statistically significant result does not tell us the size of the difference. Cohen's ''h'', on the other hand, quantifies the size of the difference, allowing us to decide if the difference is meaningful. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_-_Galton_1889_diagram.png)