|

Connectionism

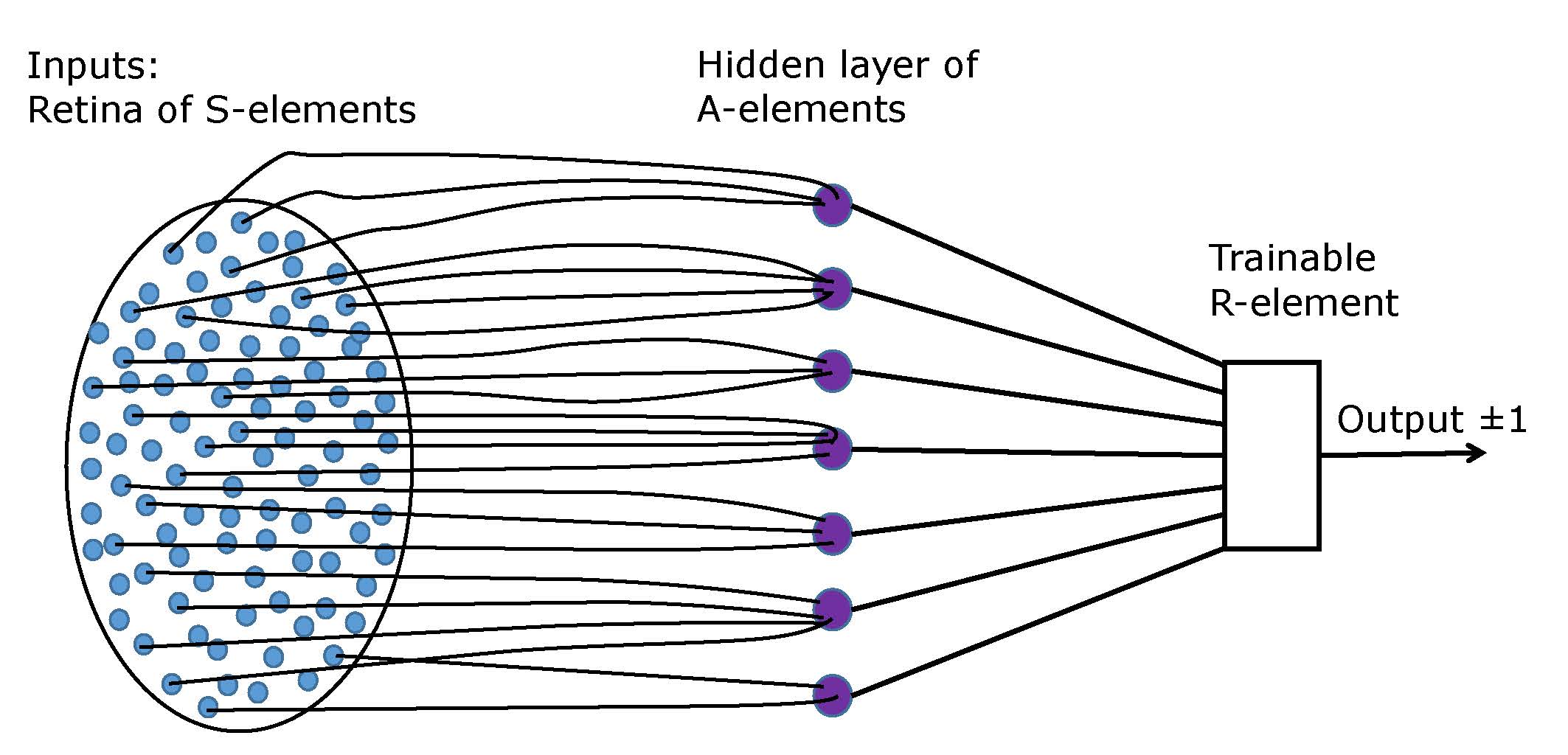

Connectionism is an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many "waves" since its beginnings. The first wave appeared 1943 with Warren Sturgis McCulloch and Walter Pitts both focusing on comprehending neural circuitry through a formal and mathematical approach, and Frank Rosenblatt who published the 1958 paper "The Perceptron: A Probabilistic Model For Information Storage and Organization in the Brain" in ''Psychological Review'', while working at the Cornell Aeronautical Laboratory. The first wave ended with the 1969 book about the limitations of the original perceptron idea, written by Marvin Minsky and Seymour Papert, which contributed to discouraging major funding agencies in the US from investing in connectionist research. With a few noteworthy deviations, most connectionist research entered a period of inactivity until the mid-1980 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frank Rosenblatt

Frank Rosenblatt (July 11, 1928July 11, 1971) was an American psychologist notable in the field of artificial intelligence. He is sometimes called the father of deep learning for his pioneering work on artificial neural networks. Life and career Rosenblatt was born into a Jewish family in New Rochelle, New York as the son of Dr. Frank and Katherine Rosenblatt. After graduating from The Bronx High School of Science in 1946, he attended Cornell University, where he obtained his Bachelor of Arts, A.B. in 1950 and his Doctor of Philosophy, Ph.D. in 1956. For his PhD thesis he built a custom-made computer, the Electronic Profile Analyzing Computer (EPAC), to perform multidimensional analysis for psychometrics. He used it between 1951 and 1953 to analyze psychometric data collected for his PhD thesis. The data were collected from a paid, 600 item survey of more than 200 Cornell undergraduates. The total computational cost was 2.5 million arithmetic operations, necessitating the use of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GOFAI

In the philosophy of artificial intelligence, GOFAI ("Good old fashioned artificial intelligence") is classical symbolic AI, as opposed to other approaches, such as neural networks, situated robotics, narrow symbolic AI or neuro-symbolic AI. The term was coined by philosopher John Haugeland in his 1985 book ''Artificial Intelligence: The Very Idea''. Haugeland coined the term to address two questions: * Can GOFAI produce human level artificial intelligence in a machine? * Is GOFAI the primary method that brains use to display intelligence? AI founder Herbert A. Simon speculated in 1963 that the answers to both these questions was "yes". His evidence was the performance of programs he had co-written, such as Logic Theorist and the General Problem Solver, and his psychological research on human problem solving. AI research in the 1950s and 60s had an enormous influence on intellectual history: it inspired the cognitive revolution, led to the founding of the academic field of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Sussex

The University of Sussex is a public university, public research university, research university located in Falmer, East Sussex, England. It lies mostly within the city boundaries of Brighton and Hove. Its large campus site is surrounded by the South Downs National Park, and provides convenient access to central Brighton away. The university received its royal charter in August 1961, the first of the plate glass university generation. More than a third of its students are enrolled in postgraduate programmes and approximately a third of staff are from outside the United Kingdom. Sussex has a diverse community of nearly 20,000 students, with around one in three being foreign students, and over 1,000 academics, representing over 140 different nationalities. The annual income of the institution for 2023–24 was £379.6 million of which £39.9 million was from research grants and contracts, with an expenditure of £291.3 million. Sussex counts five Nobel Prize winners, 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Function

A logistic function or logistic curve is a common S-shaped curve ( sigmoid curve) with the equation f(x) = \frac where The logistic function has domain the real numbers, the limit as x \to -\infty is 0, and the limit as x \to +\infty is L. The exponential function with negated argument (e^ ) is used to define the standard logistic function, depicted at right, where L=1, k=1, x_0=0, which has the equation f(x) = \frac and is sometimes simply called the sigmoid. It is also sometimes called the expit, being the inverse function of the logit. The logistic function finds applications in a range of fields, including biology (especially ecology), biomathematics, chemistry, demography, economics, geoscience, mathematical psychology, probability, sociology, political science, linguistics, statistics, and artificial neural networks. There are various generalizations, depending on the field. History The logistic function was introduced in a series of three papers by Pier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising model), named after Ludwig Boltzmann, is a spin glass, spin-glass model with an external field, i.e., a Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model, that is a stochastic Ising model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as a Markov random field. Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their Parallelism (computing), parallelism and the resemblance of their dynamics to simple physical processes. Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hebbian Theory

Hebbian theory is a neuropsychological theory claiming that an increase in synaptic efficacy arises from a presynaptic cell's repeated and persistent stimulation of a postsynaptic cell. It is an attempt to explain synaptic plasticity, the adaptation of neurons during the learning process. Hebbian theory was introduced by Donald Hebb in his 1949 book '' The Organization of Behavior.'' The theory is also called Hebb's rule, Hebb's postulate, and cell assembly theory. Hebb states it as follows: Let us assume that the persistence or repetition of a reverberatory activity (or "trace") tends to induce lasting cellular changes that add to its stability. ... When an axon of cell ''A'' is near enough to excite a cell ''B'' and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that ''A''’s efficiency, as one of the cells firing ''B'', is increased. The theory is often summarized as "Neurons that fire togethe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Rule

Learning is the process of acquiring new understanding, knowledge, behaviors, skills, values, attitudes, and preferences. The ability to learn is possessed by humans, non-human animals, and some machines; there is also evidence for some kind of learning in certain plants. Some learning is immediate, induced by a single event (e.g. being burned by a hot stove), but much skill and knowledge accumulate from repeated experiences. The changes induced by learning often last a lifetime, and it is hard to distinguish learned material that seems to be "lost" from that which cannot be retrieved. Human learning starts at birth (it might even start before) and continues until death as a consequence of ongoing interactions between people and their environment. The nature and processes involved in learning are studied in many established fields (including educational psychology, neuropsychology, experimental psychology, cognitive sciences, and pedagogy), as well as emerging fields of knowl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

A large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pretrained transformers (GPTs), which are largely used in generative chatbots such as ChatGPT or Gemini. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained in. History Before the emergence of transformer-based models in 2017, some language models were considered large relative to the computational and data constraints of their time. In the early 1990s, IBM's statistical models pioneered word alignment techniques for machine translation, laying the groundwork for corpus-based language modeling. A sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector (mathematics)

In mathematics and physics, vector is a term that refers to quantities that cannot be expressed by a single number (a scalar), or to elements of some vector spaces. Historically, vectors were introduced in geometry and physics (typically in mechanics) for quantities that have both a magnitude and a direction, such as displacements, forces and velocity. Such quantities are represented by geometric vectors in the same way as distances, masses and time are represented by real numbers. The term ''vector'' is also used, in some contexts, for tuples, which are finite sequences (of numbers or other objects) of a fixed length. Both geometric vectors and tuples can be added and scaled, and these vector operations led to the concept of a vector space, which is a set equipped with a vector addition and a scalar multiplication that satisfy some axioms generalizing the main properties of operations on the above sorts of vectors. A vector space formed by geometric vectors is called a Euc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theory Of Mind

In psychology and philosophy, theory of mind (often abbreviated to ToM) refers to the capacity to understand other individuals by ascribing mental states to them. A theory of mind includes the understanding that others' beliefs, desires, intentions, emotions, and thoughts may be different from one's own. Possessing a functional theory of mind is crucial for success in everyday human social interactions. People utilize a theory of mind when analyzing, Value judgment, judging, and inferring other people's behaviors. Theory of mind was first conceptualized by researchers evaluating the presence of theory of mind in animals. Today, theory of mind research also investigates factors affecting theory of mind in humans, such as whether drug and alcohol consumption, language development, cognitive delays, age, and culture can affect a person's capacity to display theory of mind. It has been proposed that deficits in theory of mind may occur in people with autism, anorexia nervosa, schi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |