|

Coding Gain

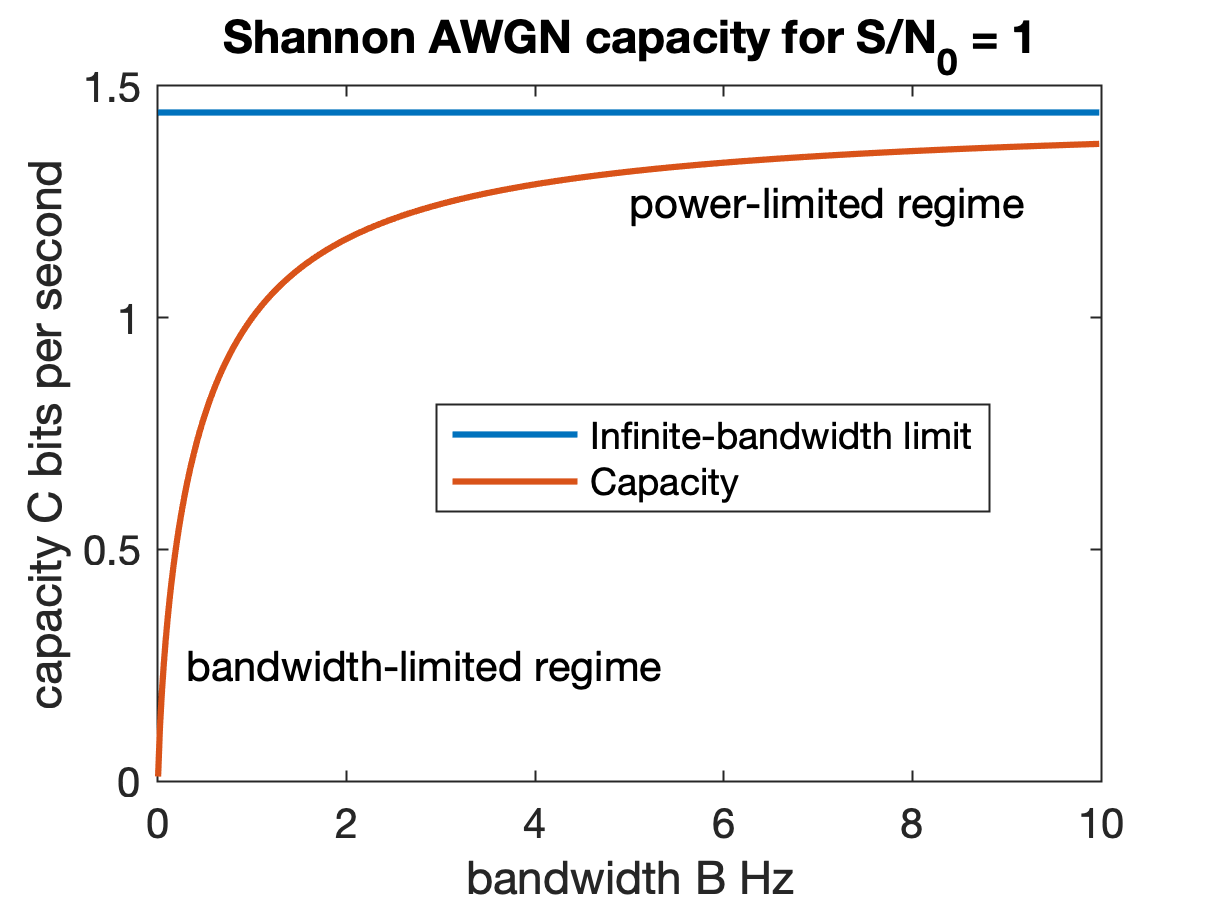

In coding theory, telecommunications engineering and other related engineering problems, coding gain is the measure in the difference between the signal-to-noise ratio (SNR) levels between the uncoded system and coded system required to reach the same bit error rate (BER) levels when used with the error correcting code (ECC). Example If the uncoded BPSK system in AWGN environment has a bit error rate (BER) of 10−2 at the SNR level 4 dB, and the corresponding coded (e.g., BCH) system has the same BER at an SNR of 2.5 dB, then we say the ''coding gain'' = , due to the code used (in this case BCH). Power-limited regime In the ''power-limited regime'' (where the nominal spectral efficiency \rho \le 2 /2D or b/s/Hz ''i.e.'' the domain of binary signaling), the effective coding gain \gamma_\mathrm(A) of a signal set A at a given target error probability per bit P_b(E) is defined as the difference in dB between the E_b/N_0 required to achieve the target P_b(E) with A an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and computer data storage, data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error detection and correction, Error control (or ''channel coding'') # Cryptography, Cryptographic coding # Line code, Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, DEFLATE data compression makes files small ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pulse-amplitude Modulation

Pulse-amplitude modulation (PAM) is a form of signal modulation in which the message information is encoded in the amplitude of a pulse train interrupting the carrier frequency. Demodulation is performed by detecting the amplitude level of the carrier at every single period. Types There are two types of pulse amplitude modulation: * In ''single polarity PAM'', a suitable fixed DC bias is added to the signal to ensure that all the pulses are positive. * In ''double polarity PAM'', the pulses are both positive and negative. Pulse-amplitude modulation is widely used in modulating signal transmission of digital data, with non- baseband applications having been largely replaced by pulse-code modulation, and, more recently, by pulse-position modulation. The number of possible pulse amplitudes in analog PAM is theoretically infinite. Digital PAM reduces the number of pulse amplitudes to some natural number. Uses Ethernet Some versions of the Ethernet communication standard are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Eb/N0

In digital communication or data transmission, E_b/N_0 (energy per bit to noise power spectral density ratio) is a normalized signal-to-noise ratio (SNR) measure, also known as the "SNR per bit". It is especially useful when comparing the bit error rate (BER) performance of different digital modulation schemes without taking bandwidth into account. As the description implies, E_b is the signal energy associated with each user data bit; it is equal to the signal power divided by the user bit rate (''not'' the channel symbol rate). If signal power is in watts and bit rate is in bits per second, E_b is in units of joules (watt-seconds). N_0 is the noise spectral density, the noise power in a 1 Hz bandwidth, measured in watts per hertz or joules. These are the same units as E_b so the ratio E_b/N_0 is dimensionless; it is frequently expressed in decibels. E_b/N_0 directly indicates the power efficiency of the system without regard to modulation type, error correction coding or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Channel Capacity

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Reed–Muller Code

Reed–Muller codes are error-correcting codes that are used in wireless communications applications, particularly in deep-space communication. Moreover, the proposed 5G standard relies on the closely related polar codes for error correction in the control channel. Due to their favorable theoretical and mathematical properties, Reed–Muller codes have also been extensively studied in theoretical computer science. Reed–Muller codes generalize the Reed–Solomon codes and the Walsh–Hadamard code. Reed–Muller codes are linear block codes that are locally testable, locally decodable, and list decodable. These properties make them particularly useful in the design of probabilistically checkable proofs. Traditional Reed–Muller codes are binary codes, which means that messages and codewords are binary strings. When ''r'' and ''m'' are integers with 0 ≤ ''r'' ≤ ''m'', the Reed–Muller code with parameters ''r'' and ''m'' is denoted as RM(''r'', ''m''). When ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Linear Block Code

In coding theory, block codes are a large and important family of error-correcting codes that encode data in blocks. There is a vast number of examples for block codes, many of which have a wide range of practical applications. The abstract definition of block codes is conceptually useful because it allows coding theorists, mathematicians, and computer scientists to study the limitations of ''all'' block codes in a unified way. Such limitations often take the form of ''bounds'' that relate different parameters of the block code to each other, such as its rate and its ability to detect and correct errors. Examples of block codes are Reed–Solomon codes, Hamming codes, Hadamard codes, Expander codes, Golay codes, Reed–Muller codes and Polar codes. These examples also belong to the class of linear codes, and hence they are called linear block codes. More particularly, these codes are known as algebraic block codes, or cyclic block codes, because they can be generated using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Error Function

In mathematics, the error function (also called the Gauss error function), often denoted by , is a function \mathrm: \mathbb \to \mathbb defined as: \operatorname z = \frac\int_0^z e^\,\mathrm dt. The integral here is a complex Contour integration, contour integral which is path-independent because \exp(-t^2) is Holomorphic function, holomorphic on the whole complex plane \mathbb. In many applications, the function argument is a real number, in which case the function value is also real. In some old texts, the error function is defined without the factor of \frac. This nonelementary integral is a sigmoid function, sigmoid function that occurs often in probability, statistics, and partial differential equations. In statistics, for non-negative real values of , the error function has the following interpretation: for a real random variable that is normal distribution, normally distributed with mean 0 and standard deviation \frac, is the probability that falls in the range . ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Union Bound

In probability theory, Boole's inequality, also known as the union bound, says that for any finite or countable set of events, the probability that at least one of the events happens is no greater than the sum of the probabilities of the individual events. This inequality provides an upper bound on the probability of occurrence of at least one of a countable number of events in terms of the individual probabilities of the events. Boole's inequality is named for its discoverer, George Boole. Formally, for a countable set of events ''A''1, ''A''2, ''A''3, ..., we have :\left(\bigcup_^ A_i \right) \le \sum_^ (A_i). In measure-theoretic terms, Boole's inequality follows from the fact that a measure (and certainly any probability measure) is ''σ''- sub-additive. Thus Boole's inequality holds not only for probability measures , but more generally when is replaced by any finite measure. Proof Proof using induction Boole's inequality may be proved for finite collections of n e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Quadrature Amplitude Modulation

Quadrature amplitude modulation (QAM) is the name of a family of digital modulation methods and a related family of analog modulation methods widely used in modern telecommunications to transmit information. It conveys two analog message signals, or two digital bit streams, by changing (''modulating'') the amplitudes of two carrier waves, using the amplitude-shift keying (ASK) digital modulation scheme or amplitude modulation (AM) analog modulation scheme. The two carrier waves are of the same frequency and are out of phase with each other by 90°, a condition known as orthogonality or Quadrature phase, quadrature. The transmitted signal is created by adding the two carrier waves together. At the receiver, the two waves can be coherently separated (demodulated) because of their orthogonality. Another key property is that the modulations are low-frequency/low-bandwidth waveforms compared to the carrier frequency, which is known as the In-phase and quadrature components#Narrowband ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Spectral Efficiency

Spectral efficiency, spectrum efficiency or bandwidth efficiency refers to the information rate that can be transmitted over a given bandwidth in a specific communication system. It is a measure of how efficiently a limited frequency spectrum is utilized by the physical layer protocol, and sometimes by the medium access control (the channel access protocol). Guowang Miao, Jens Zander, Ki Won Sung, and Ben Slimane, Fundamentals of Mobile Data Networks, Cambridge University Press, , 2016. Link spectral efficiency The link spectral efficiency of a digital communication system is measured in '' bit/ s/ Hz'', or, less frequently but unambiguously, in ''(bit/s)/Hz''. It is the net bit rate (useful information rate excluding error-correcting codes) or maximum throughput divided by the bandwidth in hertz of a communication channel or a data link. Alternatively, the spectral efficiency may be measured in ''bit/symbol'', which is equivalent to ''bits per channel use'' (''bpcu''), i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Telecommunications Engineering

Telecommunications engineering is a subfield of electronics engineering which seeks to design and devise systems of communication at a distance. The work ranges from basic circuit design to strategic mass developments. A telecommunication engineer is responsible for designing and overseeing the installation of telecommunications equipment and facilities, such as complex electronic switching system, and other plain old telephone service facilities, optical fiber cabling, IP networks, and microwave transmission systems. Telecommunications engineering also overlaps with broadcast engineering. Telecommunication is a diverse field of engineering connected to electronic, civil and systems engineering. Ultimately, telecom engineers are responsible for providing high-speed data transmission services. They use a variety of equipment and transport media to design the telecom network infrastructure; the most common media used by wired telecommunications today are twisted pair, co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

BCH Code

In coding theory, the Bose–Chaudhuri–Hocquenghem codes (BCH codes) form a class of cyclic error-correcting codes that are constructed using polynomials over a finite field (also called a '' Galois field''). BCH codes were invented in 1959 by French mathematician Alexis Hocquenghem, and independently in 1960 by Raj Chandra Bose and D. K. Ray-Chaudhuri. The name ''Bose–Chaudhuri–Hocquenghem'' (and the acronym ''BCH'') arises from the initials of the inventors' surnames (mistakenly, in the case of Ray-Chaudhuri). One of the key features of BCH codes is that during code design, there is a precise control over the number of symbol errors correctable by the code. In particular, it is possible to design binary BCH codes that can correct multiple bit errors. Another advantage of BCH codes is the ease with which they can be decoded, namely, via an algebraic method known as syndrome decoding. This simplifies the design of the decoder for these codes, using small ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |