|

Bimatrix Game

In game theory, a bimatrix game is a simultaneous game for two players in which each player has a finite number of possible actions. The name comes from the fact that the normal form of such a game can be described by two matrices - matrix A describing the payoffs of player 1 and matrix B describing the payoffs of player 2. Player 1 is often called the "row player" and player 2 the "column player". If player 1 has m possible actions and player 2 has n possible actions, then each of the two matrices has m rows by n columns. When the row player selects the i-th action and the column player selects the j-th action, the payoff to the row player is A ,j/math> and the payoff to the column player is B ,j/math>. The players can also play mixed strategies. A mixed strategy for the row player is a non-negative vector x of length m such that: \sum_^m x_i = 1. Similarly, a mixed strategy for the column player is a non-negative vector y of length n such that: \sum_^n y_j = 1. When the players ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Game Theory

Game theory is the study of mathematical models of strategic interactions among rational agents. Myerson, Roger B. (1991). ''Game Theory: Analysis of Conflict,'' Harvard University Press, p.&nbs1 Chapter-preview links, ppvii–xi It has applications in all fields of social science, as well as in logic, systems science and computer science. Originally, it addressed two-person zero-sum games, in which each participant's gains or losses are exactly balanced by those of other participants. In the 21st century, game theory applies to a wide range of behavioral relations; it is now an umbrella term for the science of logical decision making in humans, animals, as well as computers. Modern game theory began with the idea of mixed-strategy equilibria in two-person zero-sum game and its proof by John von Neumann. Von Neumann's original proof used the Brouwer fixed-point theorem on continuous mappings into compact convex sets, which became a standard method in game theory and mathema ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simultaneous Game

In game theory, a simultaneous game or static game is a game where each player chooses their action without knowledge of the actions chosen by other players. Simultaneous games contrast with sequential games, which are played by the players taking turns (moves alternate between players). In other words, both players normally act at the same time in a simultaneous game. Even if the players do not act at the same time, both players are uninformed of each other's move while making their decisions. Normal form representations are usually used for simultaneous games.Mailath, G., Samuelson, L. and Swinkels, J., 1993. Extensive Form Reasoning in Normal Form Games. Econometrica, nline61(2), pp.273-278. Available at: ccessed 30 October 2020 Given a continuous game, players will have different information sets if the game is simultaneous than if it is sequential because they have less information to act on at each step in the game. For example, in a two player continuous game that is se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal-form Game

In game theory, normal form is a description of a ''game''. Unlike extensive form, normal-form representations are not graphical ''per se'', but rather represent the game by way of a matrix. While this approach can be of greater use in identifying strictly dominated strategies and Nash equilibria, some information is lost as compared to extensive-form representations. The normal-form representation of a game includes all perceptible and conceivable strategies, and their corresponding payoffs, for each player. In static games of complete, perfect information, a normal-form representation of a game is a specification of players' strategy spaces and payoff functions. A strategy space for a player is the set of all strategies available to that player, whereas a strategy is a complete plan of action for every stage of the game, regardless of whether that stage actually arises in play. A payoff function for a player is a mapping from the cross-product of players' strategy spaces to that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two by three matrix", a "-matrix", or a matrix of dimension . Without further specifications, matrices represent linear maps, and allow explicit computations in linear algebra. Therefore, the study of matrices is a large part of linear algebra, and most properties and operations of abstract linear algebra can be expressed in terms of matrices. For example, matrix multiplication represents composition of linear maps. Not all matrices are related to linear algebra. This is, in particular, the case in graph theory, of incidence matrices, and adjacency matrices. ''This article focuses on matrices related to linear algebra, and, unle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixed Strategies

In game theory, a player's strategy is any of the options which they choose in a setting where the outcome depends ''not only'' on their own actions ''but'' on the actions of others. The discipline mainly concerns the action of a player in a game affecting the behavior or actions of other players. Some examples of "games" include chess, bridge, poker, monopoly, diplomacy or battleship. A player's strategy will determine the action which the player will take at any stage of the game. In studying game theory, economists enlist a more rational lens in analyzing decisions rather than the psychological or sociological perspectives taken when analyzing relationships between decisions of two or more parties in different disciplines. The strategy concept is sometimes (wrongly) confused with that of a move. A move is an action taken by a player at some point during the play of a game (e.g., in chess, moving white's Bishop a2 to b3). A strategy on the other hand is a complete algorithm for p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nash Equilibrium

In game theory, the Nash equilibrium, named after the mathematician John Nash, is the most common way to define the solution of a non-cooperative game involving two or more players. In a Nash equilibrium, each player is assumed to know the equilibrium strategies of the other players, and no one has anything to gain by changing only one's own strategy. The principle of Nash equilibrium dates back to the time of Cournot, who in 1838 applied it to competing firms choosing outputs. If each player has chosen a strategy an action plan based on what has happened so far in the game and no one can increase one's own expected payoff by changing one's strategy while the other players keep their's unchanged, then the current set of strategy choices constitutes a Nash equilibrium. If two players Alice and Bob choose strategies A and B, (A, B) is a Nash equilibrium if Alice has no other strategy available that does better than A at maximizing her payoff in response to Bob choosing B, and Bob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Complementarity Problem

In mathematical optimization theory, the linear complementarity problem (LCP) arises frequently in computational mechanics and encompasses the well-known quadratic programming as a special case. It was proposed by Cottle and Dantzig in 1968. Formulation Given a real matrix ''M'' and vector ''q'', the linear complementarity problem LCP(''q'', ''M'') seeks vectors ''z'' and ''w'' which satisfy the following constraints: * w, z \geqslant 0, (that is, each component of these two vectors is non-negative) * z^Tw = 0 or equivalently \sum\nolimits_i w_i z_i = 0. This is the complementarity condition, since it implies that, for all i, at most one of w_i and z_i can be positive. * w = Mz + q A sufficient condition for existence and uniqueness of a solution to this problem is that ''M'' be symmetric positive-definite. If ''M'' is such that has a solution for every ''q'', then ''M'' is a Q-matrix. If ''M'' is such that have a unique solution for every ''q'', then ''M'' is a P-matrix ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lemke–Howson Algorithm

The Lemke–Howson algorithm is an algorithm that computes a Nash equilibrium of a bimatrix game, named after its inventors, Carlton E. Lemke and J. T. Howson. It is said to be "the best known among the combinatorial algorithms for finding a Nash equilibrium", although more recently the Porter-Nudelman-Shoham algorithm has outperformed on a number of benchmarks. Description The input to the algorithm is a 2-player game ''G''. ''G'' is represented by two ''m'' × ''n'' game matrices A and B, containing the payoffs for players 1 and 2 respectively, who have ''m'' and ''n'' pure strategies respectively. In the following we assume that all payoffs are positive. (By rescaling, any game can be transformed to a strategically equivalent game with positive payoffs.) ''G'' has two corresponding polytopes (called the ''best-response polytopes'') P1 and P2, in ''m'' dimensions and ''n'' dimensions respectively, defined as follows. P1 is in R''m''; let denote the coordinates. P1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduction (complexity)

In computability theory and computational complexity theory, a reduction is an algorithm for transforming one problem into another problem. A sufficiently efficient reduction from one problem to another may be used to show that the second problem is at least as difficult as the first. Intuitively, problem ''A'' is reducible to problem ''B'', if an algorithm for solving problem ''B'' efficiently (if it existed) could also be used as a subroutine to solve problem ''A'' efficiently. When this is true, solving ''A'' cannot be harder than solving ''B''. "Harder" means having a higher estimate of the required computational resources in a given context (e.g., higher time complexity, greater memory requirement, expensive need for extra hardware processor cores for a parallel solution compared to a single-threaded solution, etc.). The existence of a reduction from ''A'' to ''B'', can be written in the shorthand notation ''A'' ≤m ''B'', usually with a subscript on the ≤ to indicate the t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leontief Utilities

In economics, especially in consumer theory, a Leontief utility function is a function of the form: u(x_1,\ldots,x_m)=\min\left\ . where: * m is the number of different goods in the economy. * x_i (for i\in 1,\dots,m) is the amount of good i in the bundle. * w_i (for i\in 1,\dots,m) is the weight of good i for the consumer. This form of utility function was first conceptualized by Wassily Leontief. Examples Leontief utility functions represent complementary goods. For example: * Suppose x_1 is the number of left shoes and x_2 the number of right shoes. A consumer can only use pairs of shoes. Hence, his utility is \min(x_1,x_2). * In a cloud computing environment, there is a large server that runs many different tasks. Suppose a certain type of a task requires 2 CPUs, 3 gigabytes of memory and 4 gigabytes of disk-space to complete. The utility of the user is equal to the number of completed tasks. Hence, it can be represented by: \min(, , ). Properties A consumer with a Leonti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

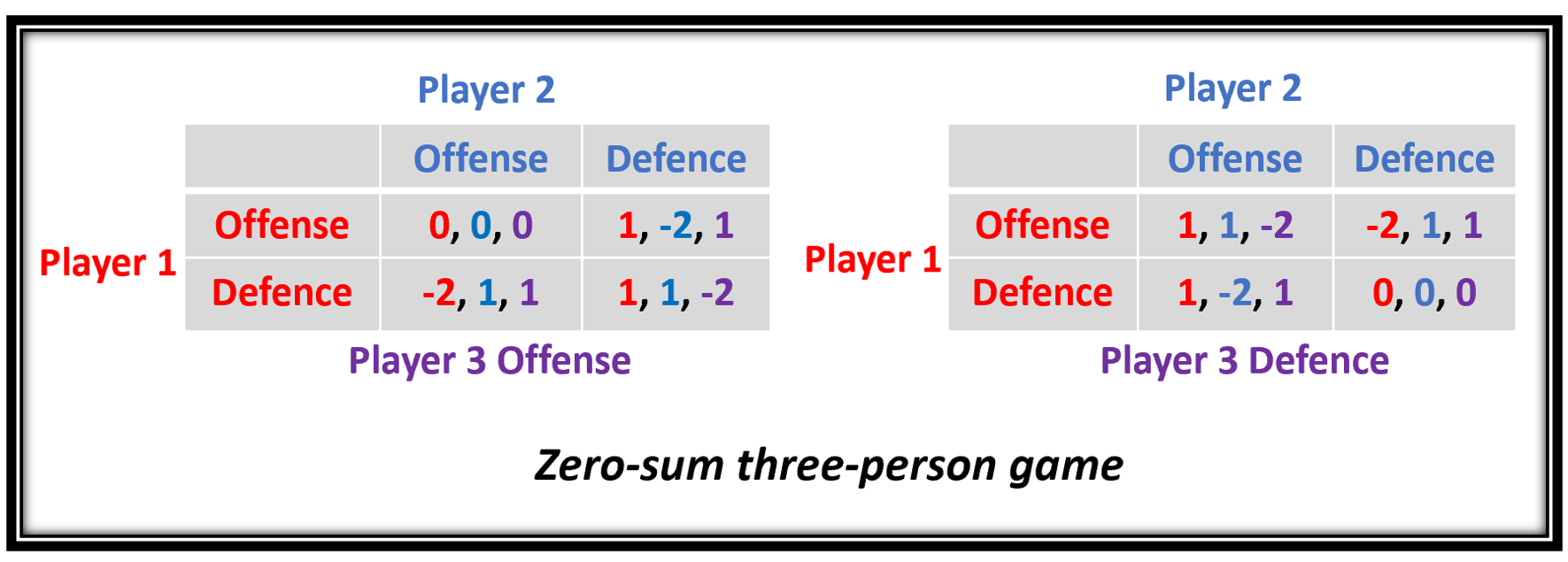

Zero-sum Game

Zero-sum game is a mathematical representation in game theory and economic theory of a situation which involves two sides, where the result is an advantage for one side and an equivalent loss for the other. In other words, player one's gain is equivalent to player two's loss, therefore the net improvement in benefit of the game is zero. If the total gains of the participants are added up, and the total losses are subtracted, they will sum to zero. Thus, cutting a cake, where taking a more significant piece reduces the amount of cake available for others as much as it increases the amount available for that taker, is a zero-sum game if all participants value each unit of cake equally. Other examples of zero-sum games in daily life include games like poker, chess, and bridge where one person gains and another person loses, which results in a zero-net benefit for every player. In the markets and financial instruments, futures contracts and options are zero-sum games as well. In c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |