|

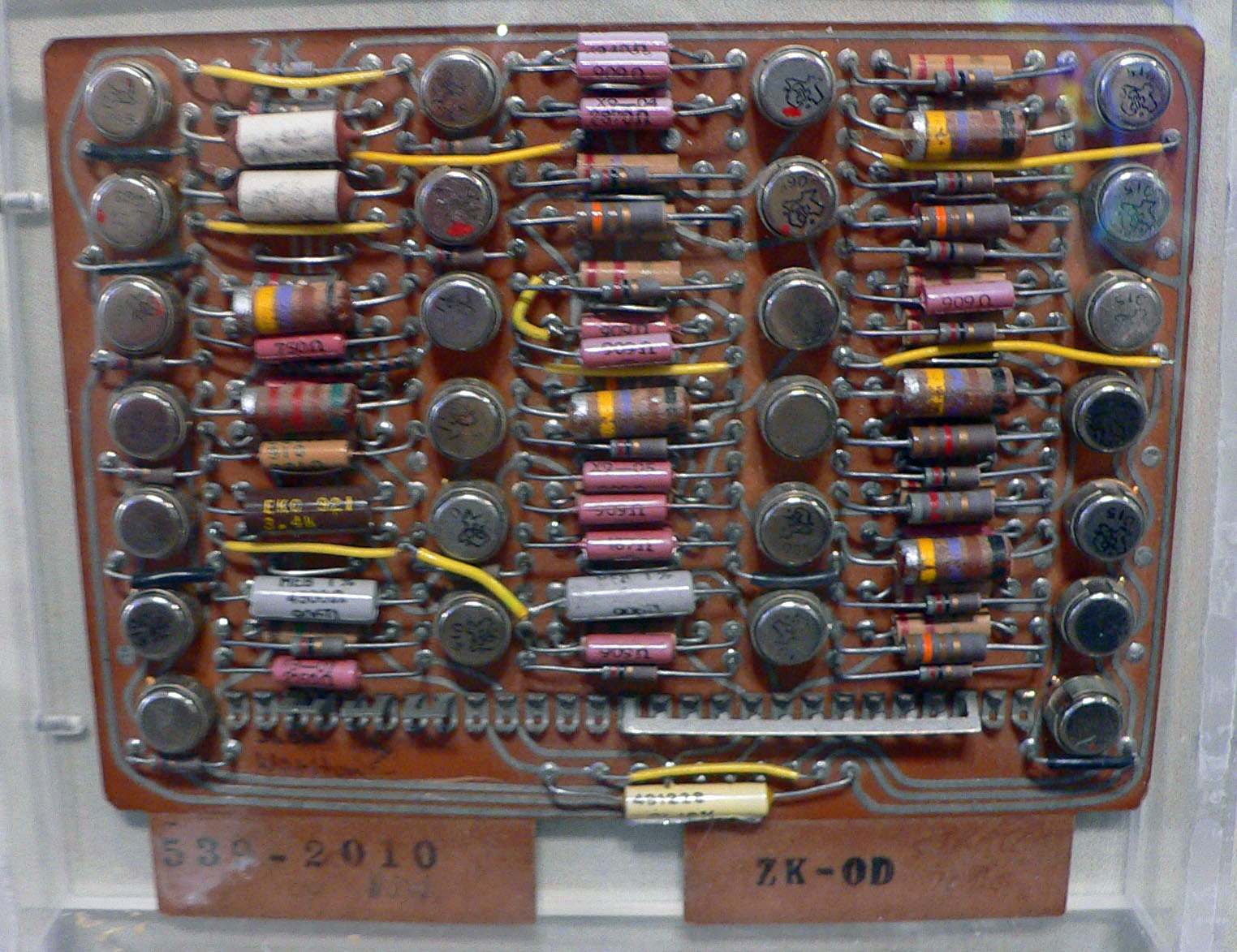

BYTE

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Werner Buchholz

Werner Buchholz (24 October 1922 – 11 July 2019) was a German-American computer scientist. After growing up in Europe, Buchholz moved to Canada and then to the United States. He worked for International Business Machines (IBM) in New York. In June 1956, he coined the term "byte" for a unit of digital information. In 1990, he was recognized as a computer pioneer by the Institute of Electrical and Electronics Engineers. Biography Early life Werner Buchholz was born on 24 October 1922 in Detmold, Germany. His older brother, Carl Hellmut and he were the sons of the merchant and his wife, . Due to the growing antisemitism in Detmold in 1936, the family moved to Cologne. Werner was able to go to England in 1938 where he attended school, while Carl Hellmut emigrated to the United States. Because of the threat of invasion in May 1940, Werner with other refugee students was interned by the British and later sent to Canada. With the help of the Jewish community in Toronto, he was rele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Octet (computing)

The octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term '' byte'' might be ambiguous, as the byte has historically been used for storage units of a variety of sizes. The term ''octad(e)'' for eight bits is no longer common. Definition The international standard IEC 60027-2, chapter 3.8.2, states that a byte is an octet of bits. However, the unit byte has historically been platform-dependent and has represented various storage sizes in the history of computing. Due to the influence of several major computer architectures and product lines, the byte became overwhelmingly associated with eight bits. This meaning of ''byte'' is codified in such standards as ISO/IEC 80000-13. While ''byte'' and ''octet'' are often used synonymously, those working with certain legacy systems are careful to avoid ambiguity. Octets can be represented using number systems of varying bases such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Number

A binary number is a number expressed in the Radix, base-2 numeral system or binary numeral system, a method for representing numbers that uses only two symbols for the natural numbers: typically "0" (zero) and "1" (one). A ''binary number'' may also refer to a rational number that has a finite representation in the binary numeral system, that is, the quotient of an integer by a power of two. The base-2 numeral system is a positional notation with a radix of 2. Each digit is referred to as a bit, or binary digit. Because of its straightforward implementation in digital electronic circuitry using logic gates, the binary system is used by almost all modern computer, computers and computer-based devices, as a preferred system of use, over various other human techniques of communication, because of the simplicity of the language and the noise immunity in physical implementation. History The modern binary number system was studied in Europe in the 16th and 17th centuries by Thoma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DEC PDP-10

Digital Equipment Corporation (DEC)'s PDP-10, later marketed as the DECsystem-10, is a mainframe computer family manufactured beginning in 1966 and discontinued in 1983. 1970s models and beyond were marketed under the DECsystem-10 name, especially as the TOPS-10 operating system became widely used. The PDP-10's architecture is almost identical to that of DEC's earlier PDP-6, sharing the same 36-bit word length and slightly extending the instruction set. The main difference was a greatly improved hardware implementation. Some aspects of the instruction set are unusual, most notably the ''byte'' instructions, which operate on bit fields of any size from 1 to 36 bits inclusive, according to the general definition of a byte as ''a contiguous sequence of a fixed number of bits''. The PDP-10 was found in many university computing facilities and research labs during the 1970s, the most notable being Harvard University's Aiken Computation Laboratory, MIT's AI Lab and Project MAC, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CDC 3600

The CDC 3000 series ("thirty-six hundred" or "thirty-one hundred") are a family of mainframe computer, mainframe computers from Control Data Corporation (CDC). The first member, the CDC 3600, was a 48-bit computing, 48-bit system introduced in 1963. The same basic design led to the cut-down CDC 3400 of 1964, and then the 24-bit computing, 24-bit CDC 3300, 3200 and 3100 introduced between 1964 and 1965. The 3000 series replaced the earlier CDC 1604 and CDC 924 systems. The line was a great success and became CDC's cash cow through the 1960s. The series significantly outsold the much faster and more expensive machines in the CDC 6000 series, but the performance of the 3000's relative to other vendors quickly eroded. The line was phased out of production in the early 1970s in favour of new members of the 6000 series, and then the CDC Cyber series, initially based on the 6600 design but spanning a wide range of performance. Specifications Upper 3000 series The upper 3000 series ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Burroughs B1700

The Burroughs B1000 Series was a series of mainframe computers, built by the Burroughs Corporation, and originally introduced in the 1970s with continued software development until 1987. The series consisted of three major generations which were the B1700, B1800, and B1900 series machines. They were also known as the Burroughs Small Systems, by contrast with the Burroughs Large Systems (B5000, B6000, B7000, B8000) and the Burroughs Medium Systems (B2000, B3000, B4000). Much of the original research for the B1700, initially codenamed the PLP ("Proper Language Processor" or "Program Language Processor"), was done at the Burroughs Pasadena plant. (Bunker, et al.), 1968. Production of the B1700s began in the mid-1970s and occurred at both the [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

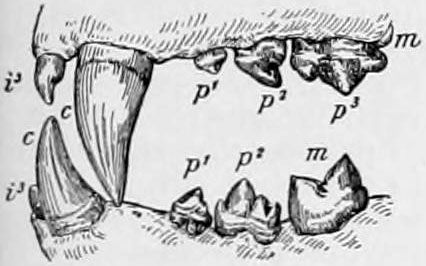

Bite

Biting is an action involving a set of teeth closing down on an object. It is a common zoological behavior, being found in toothed animals such as mammals, reptiles, amphibians, fishes, and arthropods. Biting is also an action humans participate in, most commonly when chewing food. Myocytic contraction of the muscles of mastication is responsible for generating the force that initiates the preparatory jaw abduction (opening), then rapidly adducts (closes) the jaw and moves the top and bottom teeth towards each other, resulting in the forceful action of a bite. Biting is one of the main functions in the lives of larger organisms, providing them the ability to forage, hunt, eat, build, play, fight, protect, and much more. Biting may be a form of physical aggression due to predatory or territorial intentions. In animals, biting can also be a normal activity, being used for eating, scratching, carrying objects, preparing food for young, removing ectoparasites or irritating foreign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IBM 7030

The IBM 7030, also known as Stretch, was IBM's first transistorized supercomputer. It was the fastest computer in the world from 1961 until the first CDC 6600 became operational in 1964."Designed by Seymour Cray, the CDC 6600 was almost three times faster than the next fastest machine of its day, the IBM 7030 Stretch." Originally designed to meet a requirement formulated by Edward Teller at Lawrence Livermore National Laboratory, the first example was delivered to Los Alamos National Laboratory in 1961, and a second customized version, the IBM 7950 Harvest, to the National Security Agency in 1962. The Stretch at the Atomic Weapons Research Establishment at Aldermaston, England was heavily used by researchers there and at AERE Harwell, but only after the development of the S2 Fortran compiler which was the first to add dynamic arrays, and which was later ported to the Ferranti Atlas of Atlas Computer Laboratory at Chilton. The 7030 was much slower than expected and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |