|

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Endianness

In computing, endianness, also known as byte sex, is the order or sequence of bytes of a word of digital data in computer memory. Endianness is primarily expressed as big-endian (BE) or little-endian (LE). A big-endian system stores the most significant byte of a word at the smallest memory address and the least significant byte at the largest. A little-endian system, in contrast, stores the least-significant byte at the smallest address. Bi-endianness is a feature supported by numerous computer architectures that feature switchable endianness in data fetches and stores or for instruction fetches. Other orderings are generically called middle-endian or mixed-endian. Endianness may also be used to describe the order in which the bits are transmitted over a communication channel, e.g., big-endian in a communications channel transmits the most significant bits first. Bit-endianness is seldom used in other contexts. Etymology Danny Cohen introduced the terms ''big-endian'' a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Units Of Information

In computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels. In information theory, units of information are also used to measure information contained in messages and the entropy of random variables. The most commonly used units of data storage capacity are the bit, the capacity of a system that has only two states, and the byte (or octet), which is equivalent to eight bits. Multiples of these units can be formed from these with the SI prefixes (power-of-ten prefixes) or the newer IEC binary prefixes (power-of-two prefixes). Primary units In 1928, Ralph Hartley observed a fundamental storage principle, which was further formalized by Claude Shannon in 1945: the information that can be stored in a system is proportional to the logarithm of ''N'' possible states of that system, denoted . Changing the base of the logarithm from ''b'' to a diff ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word (computer Architecture)

In computing, a word is the natural unit of data used by a particular processor design. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word (the ''word size'', ''word width'', or ''word length'') is an important characteristic of any specific processor design or computer architecture. The size of a word is reflected in many aspects of a computer's structure and operation; the majority of the registers in a processor are usually word-sized and the largest datum that can be transferred to and from the working memory in a single operation is a word in many (not all) architectures. The largest possible address size, used to designate a location in memory, is typically a hardware word (here, "hardware word" means the full-sized natural word of the processor, as opposed to any other definition used). Documentation for older computers with fixed word size commonly states memory sizes in words ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Of Two

A power of two is a number of the form where is an integer, that is, the result of exponentiation with number two as the base and integer as the exponent. In a context where only integers are considered, is restricted to non-negative values, so there are 1, 2, and 2 multiplied by itself a certain number of times. The first ten powers of 2 for non-negative values of are: : 1, 2, 4, 8, 16, 32, 64, 128, 256, 512, ... Because two is the base of the binary numeral system, powers of two are common in computer science. Written in binary, a power of two always has the form 100...000 or 0.00...001, just like a power of 10 in the decimal system. Computer science Two to the exponent of , written as , is the number of ways the bits in a binary word of length can be arranged. A word, interpreted as an unsigned integer, can represent values from 0 () to () inclusively. Corresponding signed integer values can be positive, negative and zero; see signed n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Werner Buchholz

Werner Buchholz (24 October 1922 – 11 July 2019) was a German-American computer scientist. After growing up in Europe, Buchholz moved to Canada and then to the United States. He worked for International Business Machines (IBM) in New York. In June 1956, he coined the term "byte" for a unit of digital information. In 1990, he was recognized as a computer pioneer by the Institute of Electrical and Electronics Engineers. Biography Early life Werner Buchholz was born on 24 October 1922 in Detmold, Germany. His older brother, Carl Hellmut and he were the sons of the merchant and his wife, . Due to the growing anti-semitism in Detmold in 1936, the family moved to Cologne. Werner was able to go to England in 1938 where he attended school, while Carl Hellmut emigrated to America. Because of the threat of invasion in May 1940, Werner with other refugee students was interned by the British and later sent to Canada. With the help of the Jewish community in Toronto, he was released in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

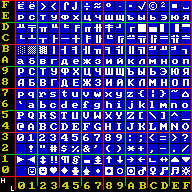

Nibble (computing)

In computing, a nibble (occasionally nybble, nyble, or nybl to match the spelling of byte) is a four-bit aggregation, or half an octet (computing), octet. It is also known as half-byte or tetrade. In a computer network, networking or telecommunication context, the nibble is often called a semi-octet, quadbit, or quartet. A nibble has sixteen () possible values. A nibble can be represented by a single hexadecimal digit (math), digit (–) and called a hex digit. A full byte (octet) is represented by two hexadecimal digits (–); therefore, it is common to display a byte of information as two nibbles. Sometimes the set of all 256 (number), 256-byte values is represented as a table, which gives easily readable hexadecimal codes for each value. 4-bit computing, Four-bit computer architectures use groups of four bits as their fundamental unit. Such architectures were used in early microprocessors, pocket calculators and pocket computers. They continue to be used in some microcont ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Octet (computing)

The octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as the byte has historically been used for storage units of a variety of sizes. The term ''octad(e)'' for eight bits is no longer common. Definition The international standard IEC 60027-2, chapter 3.8.2, states that a byte is an octet of bits. However, the unit byte has historically been platform-dependent and has represented various storage sizes in the history of computing. Due to the influence of several major computer architectures and product lines, the byte became overwhelmingly associated with eight bits. This meaning of ''byte'' is codified in such standards as ISO/IEC 80000-13. While ''byte'' and ''octet'' are often used synonymously, those working with certain legacy systems are careful to avoid ambiguity. Octets can be represented using number systems of varying bases such as the hexadeci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Octet (computing)

The octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as the byte has historically been used for storage units of a variety of sizes. The term ''octad(e)'' for eight bits is no longer common. Definition The international standard IEC 60027-2, chapter 3.8.2, states that a byte is an octet of bits. However, the unit byte has historically been platform-dependent and has represented various storage sizes in the history of computing. Due to the influence of several major computer architectures and product lines, the byte became overwhelmingly associated with eight bits. This meaning of ''byte'' is codified in such standards as ISO/IEC 80000-13. While ''byte'' and ''octet'' are often used synonymously, those working with certain legacy systems are careful to avoid ambiguity. Octets can be represented using number systems of varying bases such as the hexadeci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Character (computing)

In computer and machine-based telecommunications terminology, a character is a unit of information that roughly corresponds to a grapheme, grapheme-like unit, or symbol, such as in an alphabet or syllabary in the written form of a natural language. Examples of characters include letters, numerical digits, common punctuation marks (such as "." or "-"), and whitespace. The concept also includes control characters, which do not correspond to visible symbols but rather to instructions to format or process the text. Examples of control characters include carriage return and tab as well as other instructions to printers or other devices that display or otherwise process text. Characters are typically combined into strings. Historically, the term ''character'' was used to denote a specific number of contiguous bits. While a character is most commonly assumed to refer to 8 bits (one byte) today, other options like the 6-bit character code were once popular, and the 5-bit Baudot ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

8-bit Computing

In computer architecture, 8-bit integers or other data units are those that are 8 bits wide (1 octet). Also, 8-bit central processing unit (CPU) and arithmetic logic unit (ALU) architectures are those that are based on registers or data buses of that size. Memory addresses (and thus address buses) for 8-bit CPUs are generally larger than 8-bit, usually 16-bit. 8-bit microcomputers are microcomputers that use 8-bit microprocessors. The term '8-bit' is also applied to the character sets that could be used on computers with 8-bit bytes, the best known being various forms of extended ASCII, including the ISO/IEC 8859 series of national character sets especially Latin 1 for English and Western European languages. The IBM System/360 introduced byte-addressable memory with 8-bit bytes, as opposed to bit-addressable or decimal digit-addressable or word-addressable memory, although its general-purpose registers were 32 bits wide, and addresses were contained in the lower 24 bits of thos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEC 80000-13

ISO 80000 or IEC 80000 is an international standard introducing the International System of Quantities (ISQ). It was developed and promulgated jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). It serves as a style guide for the use of physical quantities and units of measurement, formulas involving them, and their corresponding units, in scientific and educational documents for worldwide use. The ISO/IEC 80000 family of standards was completed with the publication of Part 1 in November 2009. Overview , ISO/IEC 80000 comprises 13 parts, two of which (parts 6 and 13) were developed by IEC and the remaining 11 were developed by ISO, with a further three parts (15, 16 and 17) under development. Part 14 was withdrawn. Subject areas The 80000 standard currently has 13 parts. Part 1: General ISO 80000-1:2009 replaces ISO 31-0:1992 and ISO 1000:1992. It gives general information and definitions con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

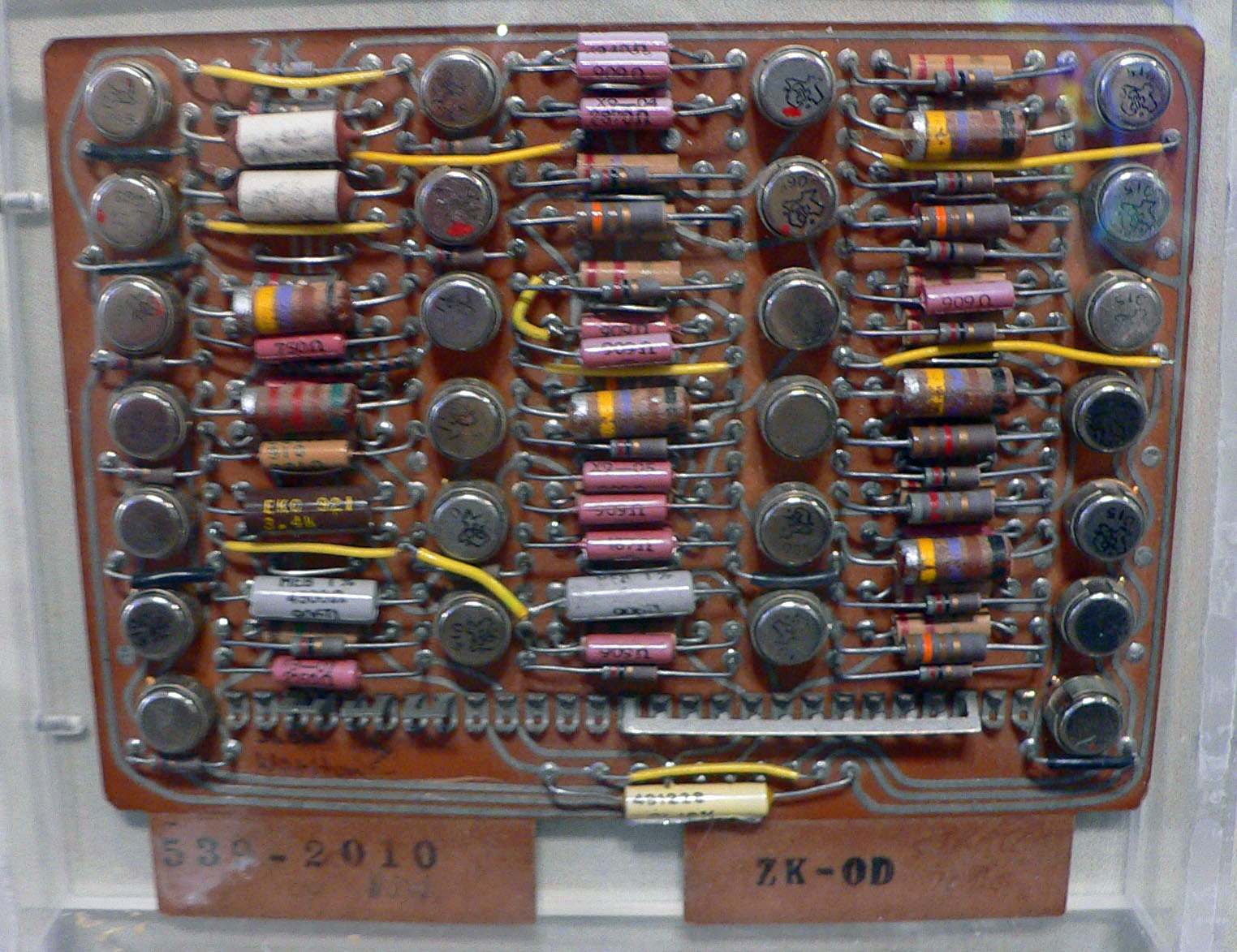

IBM 7030

The IBM 7030, also known as Stretch, was IBM's first transistorized supercomputer. It was the fastest computer in the world from 1961 until the first CDC 6600 became operational in 1964."Designed by Seymour Cray, the CDC 6600 was almost three times faster than the next fastest machine of its day, the IBM 7030 Stretch." Originally designed to meet a requirement formulated by Edward Teller at Lawrence Livermore National Laboratory, the first example was delivered to Los Alamos National Laboratory in 1961, and a second customized version, the IBM 7950 Harvest, to the National Security Agency in 1962. The Stretch at the Atomic Weapons Research Establishment at Aldermaston, England was heavily used by researchers there and at AERE Harwell, but only after the development of the S2 Fortran Compiler which was the first to add dynamic arrays, and which was later ported to the Ferranti Atlas of Atlas Computer Laboratory at Chilton. The 7030 was much slower than expected and failed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |