|

Binary128

In computing, quadruple precision (or quad precision) is a binary floating point–based computer number format that occupies 16 bytes (128 bits) with precision at least twice the 53-bit double precision. This 128-bit quadruple precision is designed not only for applications requiring results in higher than double precision, but also, as a primary function, to allow the computation of double precision results more reliably and accurately by minimising overflow and round-off errors in intermediate calculations and scratch variables. William Kahan, primary architect of the original IEEE-754 floating point standard noted, "For now the 10-byte Extended format is a tolerable compromise between the value of extra-precise arithmetic and the price of implementing it to run fast; very soon two more bytes of precision will become tolerable, and ultimately a 16-byte format ... That kind of gradual evolution towards wider precision was already in view when IEEE Standard 754 for Floating-Poi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating Point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten ( decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinities, and special "not a number" values (NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operations (such as trigonometric functions) on arithmetic formats * ''excepti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, engineering, mathematical, technological and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology and software engineering. The term "computing" is also synonymous with counting and calculating. In earlier times, it was used in reference to the action performed by mechanical computing machines, and before that, to human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. Computing is intimately tied to the representation of numbers, though mathematical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel Fortran Compiler

Intel Fortran Compiler, is a group of Fortran compilers from Intel for Windows, macOS, and Linux. Overview The compilers generate code for IA-32 and Intel 64 processors and certain non-Intel but compatible processors, such as certain AMD processors. A specific release of the compiler (11.1) remains available for development of Linux-based applications for IA-64 (Itanium 2) processors. On Windows, it is known as Intel Visual Fortran. On macOS and Linux, it is known as Intel Fortran. In 2020 the existing compiler was renamed “Intel Fortran Compiler Classic” (ifort) and a new Intel Fortran Compiler for oneAPI (ifx) supporting GPU offload was introduced. The 2021 release of the Classic compiler adds full Fortran support through the 2018 standard, full OpenMP* 4.5, and Initial Open MP 5.1 for CPU only. The 2021 beta compiler focuses on OpenMP for GPU Offload. When used with the Intel HPC toolkit (see the "Description of Packaging" below) the compiler can also automatically gen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

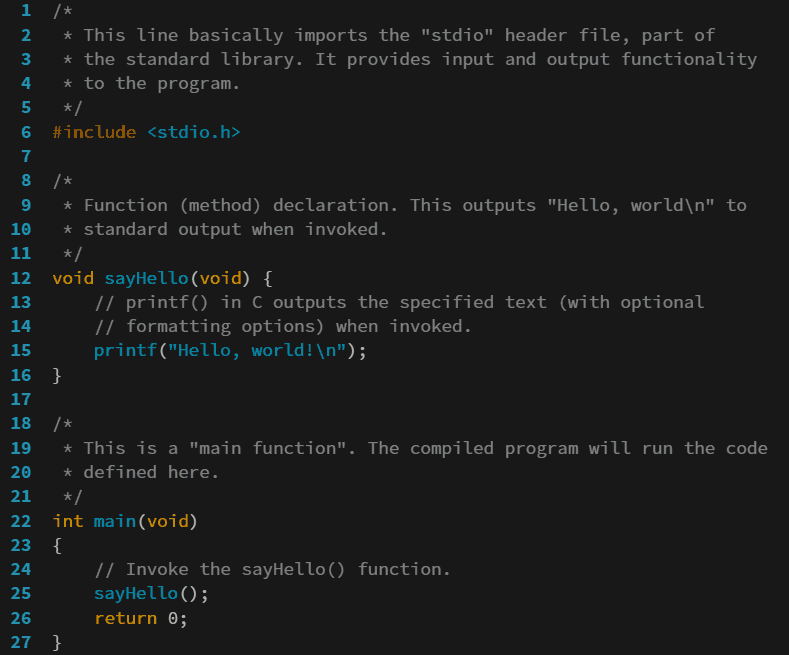

Programming Language

A programming language is a system of notation for writing computer programs. Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages. Definitions There are many considerations when defining ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hardware Support

Hardware may refer to: Technology Computing and electronics * Electronic hardware, interconnected electronic components which perform analog or logic operations ** Digital electronics, electronics that operate on digital signals *** Computer hardware, physical parts of a computer *** Networking hardware, devices that enable use of a computer network ** Electronic component, device in an electronic system used to affect electrons, usually industrial products Other technologies * Household hardware, equipment used for home repair and other work, such as fasteners, wire, plumbing supplies, electrical supplies, utensils, and machine parts * Builders hardware, metal hardware for building fixtures, such as hinges and latches * Hardware (development cooperation), in technology transfer * Drum hardware, used to tension, position, and support the instruments * Military technology, application of technology to warfare * Music hardware, devices other than instruments to create music Entert ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arbitrary-precision Arithmetic

In computer science, arbitrary-precision arithmetic, also called bignum arithmetic, multiple-precision arithmetic, or sometimes infinite-precision arithmetic, indicates that calculations are performed on numbers whose digits of precision are limited only by the available memory of the host system. This contrasts with the faster fixed-precision arithmetic found in most arithmetic logic unit (ALU) hardware, which typically offers between 8 and 64 bits of precision. Several modern programming languages have built-in support for bignums, and others have libraries available for arbitrary-precision integer and floating-point math. Rather than storing values as a fixed number of bits related to the size of the processor register, these implementations typically use variable-length arrays of digits. Arbitrary precision is used in applications where the speed of arithmetic is not a limiting factor, or where precise results with very large numbers are required. It should not b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete & Computational Geometry

'' Discrete & Computational Geometry'' is a peer-reviewed mathematics journal published quarterly by Springer. Founded in 1986 by Jacob E. Goodman and Richard M. Pollack, the journal publishes articles on discrete geometry and computational geometry. Abstracting and indexing The journal is indexed in: * ''Mathematical Reviews'' * ''Zentralblatt MATH'' * ''Science Citation Index The Science Citation Index Expanded – previously entitled Science Citation Index – is a citation index originally produced by the Institute for Scientific Information (ISI) and created by Eugene Garfield. It was officially launched in 1964 ...'' * '' Current Contents''/Engineering, Computing and Technology Notable articles The articles by Gil Kalai with a proof of a subexponential upper bound on the diameter of a polyhedron and by Samuel Ferguson on the Kepler conjecture, both published in Discrete & Computational geometry, earned their author the Fulkerson Prize. References Externa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double-precision

Double-precision floating-point format (sometimes called FP64 or float64) is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. Floating point is used to represent fractional values, or when a wider range is needed than is provided by fixed point (of the same bit width), even if at the cost of precision. Double precision may be chosen when the range or precision of single precision would be insufficient. In the IEEE 754-2008 standard, the 64-bit base-2 format is officially referred to as binary64; it was called double in IEEE 754-1985. IEEE 754 specifies additional floating-point formats, including 32-bit base-2 ''single precision'' and, more recently, base-10 representations. One of the first programming languages to provide single- and double-precision floating-point data types was Fortran. Before the widespread adoption of IEEE 754-1985, the representation and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unit In The Last Place

In computer science and numerical analysis, unit in the last place or unit of least precision (ulp) is the spacing between two consecutive floating-point numbers, i.e., the value the least significant digit (rightmost digit) represents if it is 1. It is used as a measure of accuracy in numeric calculations. Definition One definition is: In radix b with precision p, if b^e \le , x, x. Otherwise, \operatorname (x + 1) = x or \operatorname (x + 1) = x + \operatorname(x), depending on the value of the least significant digit and the exponent of x. This is demonstrated in the following Haskell code typed at an interactive prompt: > until (\x -> x x+1) (+1) 0 :: Float 1.6777216e7 > it-1 1.6777215e7 > it+1 1.6777216e7 Here we start with 0 in single precision and repeatedly add 1 until the operation does not change the value. Since the significand for a single-precision number contains 24 bits, the first integer that is not exactly representable is 224+1, and this value rounds t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double Precision

Double-precision floating-point format (sometimes called FP64 or float64) is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. Floating point is used to represent fractional values, or when a wider range is needed than is provided by fixed point (of the same bit width), even if at the cost of precision. Double precision may be chosen when the range or precision of single precision would be insufficient. In the IEEE 754-2008 standard, the 64-bit base-2 format is officially referred to as binary64; it was called double in IEEE 754-1985. IEEE 754 specifies additional floating-point formats, including 32-bit base-2 ''single precision'' and, more recently, base-10 representations. One of the first programming languages to provide single- and double-precision floating-point data types was Fortran. Before the widespread adoption of IEEE 754-1985, the representation a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |