|

Autoencoders

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction, to generate lower-dimensional embeddings for subsequent use by other machine learning algorithms. Variants exist which aim to make the learned representations assume useful properties. Examples are regularized autoencoders (''sparse'', ''denoising'' and ''contractive'' autoencoders), which are effective in learning representations for subsequent classification tasks, and ''variational'' autoencoders, which can be used as generative models. Autoencoders are applied to many problems, including facial recognition, feature detection, anomaly detection, and le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variational Autoencoder

In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling. It is part of the families of probabilistic graphical models and variational Bayesian methods. In addition to being seen as an autoencoder neural network architecture, variational autoencoders can also be studied within the mathematical formulation of variational Bayesian methods, connecting a neural encoder network to its decoder through a probabilistic latent space (for example, as a multivariate Gaussian distribution) that corresponds to the parameters of a variational distribution. Thus, the encoder maps each point (such as an image) from a large complex dataset into a distribution within the latent space, rather than to a single point in that space. The decoder has the opposite function, which is to map from the latent space to the input space, again according to a distribution (although in practice, noise is rarely a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Activation Function

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on its individual inputs and their weights. Nontrivial problems can be solved using only a few nodes if the activation function is ''nonlinear''. Modern activation functions include the logistic ( sigmoid) function used in the 2012 speech recognition model developed by Hinton et al; the ReLU used in the 2012 AlexNet computer vision model and in the 2015 ResNet model; and the smooth version of the ReLU, the GELU, which was used in the 2018 BERT model. Comparison of activation functions Aside from their empirical performance, activation functions also have different mathematical properties: ; Nonlinear: When the activation function is non-linear, then a two-layer neural network can be proven to be a universal function approximator. This is known as the Universal Approximation Theorem. The identity activation function does not satisfy this property. W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anomaly Detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority of the data and do not conform to a well defined notion of normal behavior. Such examples may arouse suspicions of being generated by a different mechanism, or appear inconsistent with the remainder of that set of data. Anomaly detection finds application in many domains including cybersecurity, medicine, machine vision, statistics, neuroscience, law enforcement and financial fraud to name only a few. Anomalies were initially searched for clear rejection or omission from the data to aid statistical analysis, for example to compute the mean or standard deviation. They were also removed to better predictions from models such as linear regression, and more recently their removal aids the performance of machine learning algorithms. However, in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Model

In statistical classification, two main approaches are called the generative approach and the discriminative approach. These compute classifiers by different approaches, differing in the degree of statistical modelling. Terminology is inconsistent, but three major types can be distinguished: # A generative model is a statistical model of the joint probability distribution P(X, Y) on a given observable variable ''X'' and target variable ''Y'';: "Generative classifiers learn a model of the joint probability, p(x, y), of the inputs ''x'' and the label ''y'', and make their predictions by using Bayes rules to calculate p(y\mid x), and then picking the most likely label ''y''. A generative model can be used to "generate" random instances ( outcomes) of an observation ''x''. # A discriminative model is a model of the conditional probability P(Y\mid X = x) of the target ''Y'', given an observation ''x''. It can be used to "discriminate" the value of the target variable ''Y'', given an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirac Measure

In mathematics, a Dirac measure assigns a size to a set based solely on whether it contains a fixed element ''x'' or not. It is one way of formalizing the idea of the Dirac delta function, an important tool in physics and other technical fields. Definition A Dirac measure is a measure on a set (with any -algebra of subsets of ) defined for a given and any (measurable) set by :\delta_x (A) = 1_A(x)= \begin 0, & x \not \in A; \\ 1, & x \in A. \end where is the indicator function of . The Dirac measure is a probability measure, and in terms of probability it represents the almost sure outcome in the sample space . We can also say that the measure is a single atom at ; however, treating the Dirac measure as an atomic measure is not correct when we consider the sequential definition of Dirac delta, as the limit of a delta sequence. The Dirac measures are the extreme points of the convex set of probability measures on . The name is a back-formation from the Dirac delta fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Norm (mathematics)

In mathematics, a norm is a function (mathematics), function from a real or complex vector space to the non-negative real numbers that behaves in certain ways like the distance from the Origin (mathematics), origin: it Equivariant map, commutes with scaling, obeys a form of the triangle inequality, and zero is only at the origin. In particular, the Euclidean distance in a Euclidean space is defined by a norm on the associated Euclidean vector space, called the #Euclidean norm, Euclidean norm, the #p-norm, 2-norm, or, sometimes, the magnitude or length of the vector. This norm can be defined as the square root of the inner product of a vector with itself. A seminorm satisfies the first two properties of a norm but may be zero for vectors other than the origin. A vector space with a specified norm is called a normed vector space. In a similar manner, a vector space with a seminorm is called a ''seminormed vector space''. The term pseudonorm has been used for several related meaning ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Learning

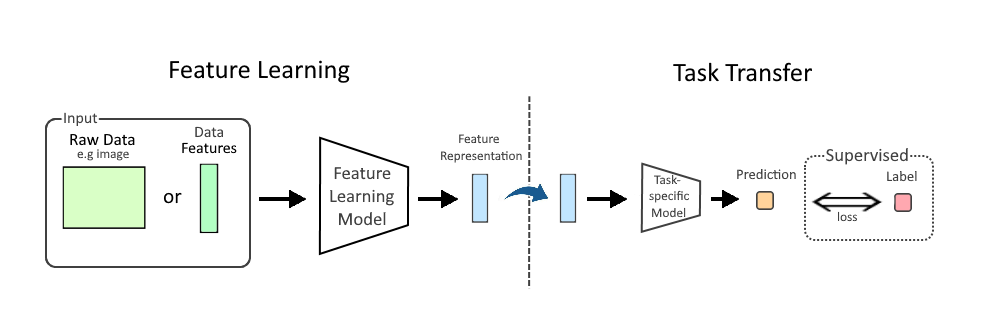

In machine learning (ML), feature learning or representation learning is a set of techniques that allow a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that ML tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data, such as image, video, and sensor data, have not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised, or self-supervised: * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where the inp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |