|

Zero-overhead Looping

In computer architecture, Zero-overhead looping is a hardware feature found in some processors that enables loops to execute without the performance cost of traditional loop control instructions. Instead of software managing loop iterations, the processor's hardware handles repetition automatically, saving clock cycles and improving efficiency. This technique is commonly employed in digital signal processors (DSPs) and certain complex instruction set computer (CISC) architectures. Background In many instruction sets, a loop must be implemented by using instructions to increment or decrement a counter, check whether the end of the loop has been reached, and if not jump to the beginning of the loop so it can be repeated. Although this typically only represents around 3–16 bytes of space for each loop, even that small amount could be significant depending on the size of the CPU caches. More significant is that those instructions each take time to execute, time which is not spent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor (computing)

In computing and computer science, a processor or processing unit is an electrical component (digital circuit) that performs operations on an external data source, usually memory or some other data stream. It typically takes the form of a microprocessor, which can be implemented on a single or a few tightly integrated metal–oxide–semiconductor integrated circuit chips. In the past, processors were constructed using multiple individual vacuum tubes, multiple individual transistors, or multiple integrated circuits. The term is frequently used to refer to the central processing unit (CPU), the main processor in a system. However, it can also refer to other coprocessors, such as a graphics processing unit (GPU). Traditional processors are typically based on silicon; however, researchers have developed experimental processors based on alternative materials such as carbon nanotubes, graphene, diamond, and alloys made of elements from groups three and five of the periodic tabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (computing)

In computer science, control flow (or flow of control) is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an ''imperative programming'' language from a ''declarative programming'' language. Within an imperative programming language, a ''control flow statement'' is a statement that results in a choice being made as to which of two or more paths to follow. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are usually not termed control flow statements. A set of statements is in turn generally structured as a block, which in addition to grouping, also defines a lexical scope. Interrupts and signals are low-level mechanisms that can alter the flow of control in a way similar to a subroutine, but usually occur as a response to some external stimulus or event (that can occur asynchronous ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Sets

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

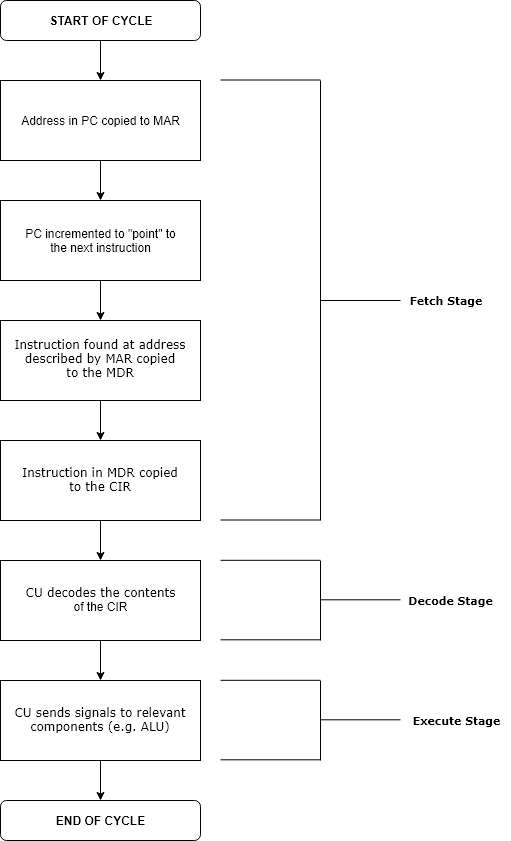

Instruction Cycle

The instruction cycle (also known as the fetch–decode–execute cycle, or simply the fetch–execute cycle) is the cycle that the central processing unit (CPU) follows from boot-up until the computer has shut down in order to process instructions. It is composed of three main stages: the fetch stage, the decode stage, and the execute stage. In simpler CPUs, the instruction cycle is executed sequentially, each instruction being processed before the next one is started. In most modern CPUs, the instruction cycles are instead executed concurrently, and often in parallel, through an instruction pipeline: the next instruction starts being processed before the previous instruction has finished, which is possible because the cycle is broken up into separate steps. Role of components Program counter The program counter (PC) is a register that holds the memory address of the next instruction to be executed. After each instruction copy to the memory address register (MAR), the PC can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital Signal Processor

A digital signal processor (DSP) is a specialized microprocessor chip, with its architecture optimized for the operational needs of digital signal processing. DSPs are fabricated on metal–oxide–semiconductor (MOS) integrated circuit chips. They are widely used in audio signal processing, telecommunications, digital image processing, radar, sonar and speech recognition systems, and in common consumer electronic devices such as mobile phones, disk drives and high-definition television (HDTV) products. The goal of a DSP is usually to measure, filter or compress continuous real-world analog signals. Most general-purpose microprocessors can also execute digital signal processing algorithms successfully, but may not be able to keep up with such processing continuously in real-time. Also, dedicated DSPs usually have better power efficiency, thus they are more suitable in portable devices such as mobile phones because of power consumption constraints. DSPs often use special m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Instruction Set Computer

A complex instruction set computer (CISC ) is a computer architecture in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step operations or addressing modes within single instructions. The term was retroactively coined in contrast to reduced instruction set computer (RISC) and has therefore become something of an umbrella term for everything that is not RISC, where the typical differentiating characteristic is that most RISC designs use uniform instruction length for almost all instructions, and employ strictly separate load and store instructions. Examples of CISC architectures include complex mainframe computers to simplistic microcontrollers where memory load and store operations are not separated from arithmetic instructions. Specific instruction set architectures that have been retroactively labeled CISC are System/360 through z/Architecture, the PDP-1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Miss

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near data that has already be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compile Time

In computer science, compile time (or compile-time) describes the time window during which a language's statements are converted into binary instructions for the processor to execute. The term is used as an adjective to describe concepts related to the context of program compilation, as opposed to concepts related to the context of program execution ( run time). For example, ''compile-time requirements'' are programming language requirements that must be met by source code before compilation and ''compile-time properties'' are properties of the program that can be reasoned about during compilation. The actual length of time it takes to compile a program is usually referred to as ''compilation time''. Overview Most compilers have at least the following compiler phases (which therefore occur at compile-time): syntax analysis, semantic analysis, and code generation. During optimization phases, constant expressions in the source code can also be evaluated at compile-time usin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null-terminated String

In computer programming, a null-terminated string is a character string stored as an array containing the characters and terminated with a ''null character'' (a character with an internal value of zero, called "NUL" in this article, not same as the glyph zero). Alternative names are '' C string'', which refers to the C programming language and ASCIIZ (although C can use encodings other than ASCII). The length of a string is found by searching for the (first) NUL. This can be slow as it takes O(''n'') (linear time) with respect to the string length. It also means that a string cannot contain a NUL (there is a NUL in memory, but it is after the last character, not the string). History Null-terminated strings were produced by the .ASCIZ directive of the PDP-11 assembly languages and the ASCIZ directive of the MACRO-10 macro assembly language for the PDP-10. These predate the development of the C programming language, but other forms of strings were often used. At the time C ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |