|

Yellowstone (supercomputer)

Yellowstone"Yellowstone" NCAR Computational and Information Systems Laboratory (CISL) website: Resources. Retrieved 2012-06-12. was the inaugural at the NCAR-Wyoming Supercomputing Center (NWSC) in Cheyenne, Wyoming. It was installed, tested, and readied for production in the summer of 2012. The Yellowstone supercomputing cluster was decommissioned on December 31, 2017, being replaced by its successor [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IDataPlex

System x is a line of x86 servers produced by IBM – and later by Lenovo – as a sub-brand of IBM's ''System'' brand, alongside IBM Power Systems, IBM System z and IBM System Storage. In addition, IBM System x was the main component of the IBM System Cluster 1350 solution. In January 2014, IBM announced the sale of its x86 server business to Lenovo for $2.3 billion, in a sale completed October 1, 2014. History Starting out with the ''PS/2 Server'', then the ''IBM PC Server'', rebranded ''Netfinity'', then ''eServer xSeries'' and finally System x, these servers are distinguished by being based on off-the-shelf x86 CPUs; IBM positioned them as their "low end" or "entry" offering compared to their POWER and Mainframe products. Previously IBM servers based on AMD Opteron CPUs did not share the ''xSeries'' brand; instead they fell directly under the ''e''Server umbrella. However, later AMD Opteron-based servers did fall under the System x brand. Predecessors IBM PS/2 Server * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infiniband

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used as either a direct or switched interconnect between servers and storage systems, as well as an interconnect between storage systems. It is designed to be scalable and uses a switched fabric network topology. By 2014, it was the most commonly used interconnect in the TOP500 list of supercomputers, until about 2016. Mellanox (acquired by Nvidia) manufactures InfiniBand host bus adapters and network switches, which are used by large computer system and database vendors in their product lines. As a computer cluster interconnect, IB competes with Ethernet, Fibre Channel, and Intel Omni-Path. The technology is promoted by the InfiniBand Trade Association. History InfiniBand originated in 1999 from the merger of two competing designs: F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer Operating Systems

A supercomputer operating system is an operating system intended for supercomputers. Since the end of the 20th century, supercomputer operating systems have undergone major transformations, as fundamental changes have occurred in supercomputer architecture. While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been moving away from in-house operating systems and toward some form of Linux, with it running all the supercomputers on the TOP500 list in November 2017. In 2021, top 10 computers run for instance Red Hat Enterprise Linux (RHEL), or some variant of it or other Linux distribution e.g. Ubuntu. Given that modern massively parallel supercomputers typically separate computations from other services by using multiple types of nodes, they usually run different operating systems on different nodes, e.g., using a small and efficient lightweight kernel such as Compute Node Kernel (CNK) or Compute Node Linux (CNL) on compute nodes, bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer Architecture

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems. While the supercomputers of the 1970s used only a few processors, in the 1990s, machines with thousands of processors began to appear and by the end of the 20th century, massively parallel supercomputers with tens of thousands of "off-the-shelf" processors were the norm. Supercomputers of the 21st century can use over 100,000 processors (some being graphic units) connected by fast connections. Throughout the decades, the management of heat density has remained a key issue for most centralized supercomputers. The large amount of heat generated by a system may also have other effec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extreme Science And Engineering Discovery Environment

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Network

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies, based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies. The nodes of a computer network can include personal computers, servers, networking hardware, or other specialised or general-purpose hosts. They are identified by network addresses, and may have hostnames. Hostnames serve as memorable labels for the nodes, rarely changed after initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol. Computer networks may be classified by many criteria, including the transmission medium used to carry signals, bandwidth, communications pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Archive

An archive is an accumulation of historical records or materials – in any medium – or the physical facility in which they are located. Archives contain primary source documents that have accumulated over the course of an individual or organization's lifetime, and are kept to show the function of that person or organization. Professional archivists and historians generally understand archives to be records that have been naturally and necessarily generated as a product of regular legal, commercial, administrative, or social activities. They have been metaphorically defined as "the secretions of an organism", and are distinguished from documents that have been consciously written or created to communicate a particular message to posterity. In general, archives consist of records that have been selected for permanent or long-term preservation on grounds of their enduring cultural, historical, or evidentiary value. Archival records are normally unpublished and almost alway ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and confirmatory data analysis (CDA). EDA focuses on discovering ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Petabyte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scientific Visualization

Scientific visualization ( also spelled scientific visualisation) is an interdisciplinary branch of science concerned with the visualization of scientific phenomena.Michael Friendly (2008)"Milestones in the history of thematic cartography, statistical graphics, and data visualization" It is also considered a subset of computer graphics, a branch of computer science. The purpose of scientific visualization is to graphically illustrate scientific data to enable scientists to understand, illustrate, and glean insight from their data. Research into how people read and misread various types of visualizations is helping to determine what types and features of visualizations are most understandable and effective in conveying information. History One of the earliest examples of three-dimensional scientific visualisation was Maxwell's thermodynamic surface, sculpted in clay in 1874 by James Clerk Maxwell. This prefigured modern scientific visualization techniques that use computer graph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Workflow

A workflow consists of an orchestrated and repeatable pattern of activity, enabled by the systematic organization of resources into processes that transform materials, provide services, or process information. It can be depicted as a sequence of operations, the work of a person or group, the work of an organization of staff, or one or more simple or complex mechanisms. From a more abstract or higher-level perspective, workflow may be considered a view or representation of real work. The flow being described may refer to a document, service, or product that is being transferred from one step to another. Workflows may be viewed as one fundamental building block to be combined with other parts of an organization's structure such as information technology, teams, projects and hierarchies. Historical development The development of the concept of a workflow occurred above a series of loosely defined, overlapping eras. Beginnings in manufacturing The modern history of workflows ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer File System

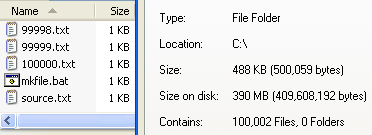

In computing, file system or filesystem (often abbreviated to fs) is a method and data structure that the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began, or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data are easily isolated and identified. Taking its name from the way a paper-based data management system is named, each group of data is called a "file". The structure and logic rules used to manage the groups of data and their names is called a "file system." There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is designe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |