|

VEX Prefix

The VEX prefix (from "vector extensions") and VEX coding scheme are an extension to the IA-32 and x86-64 instruction set architecture for microprocessors from Intel, AMD and others. Features The VEX coding scheme allows the definition of new instructions and the extension or modification of previously existing instruction codes. This serves the following purposes: * The opcode map is extended to make space for future instructions. * It allows instruction codes to have up to four operands (plus immediate), where the original scheme allows only two operands (plus immediate). * It allows the size of SIMD vector registers to be extended from the 128- bit XMM registers to the 256-bit YMM registers. There is room for further extensions of the register size. * It allows existing two-operand instructions to be modified into non-destructive three-operand forms where the destination register is different from both source registers. For example, instead of (where register ''a'' is change ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

IA-32

IA-32 (short for "Intel Architecture, 32-bit", commonly called ''i386'') is the 32-bit version of the x86 instruction set architecture, designed by Intel and first implemented in the i386, 80386 microprocessor in 1985. IA-32 is the first incarnation of x86 that supports 32-bit computing; as a result, the "IA-32" term may be used as a Metonymy, metonym to refer to all x86 versions that support 32-bit computing. Within various programming language directives, IA-32 is still sometimes referred to as the "i386" architecture. In some other contexts, certain iterations of the IA-32 ISA are sometimes labelled ''i486'', ''i586'' and ''i686'', referring to the instruction supersets offered by the i486, 80486, the P5 (microarchitecture), P5 and the P6 (microarchitecture), P6 microarchitectures respectively. These updates offered numerous additions alongside the base IA-32 set including X87, floating-point capabilities and the MMX (instruction set), MMX extensions. Intel was historically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Xeon Phi

Xeon Phi is a discontinued series of x86 manycore processors designed and made by Intel. It was intended for use in supercomputers, servers, and high-end workstations. Its architecture allowed use of standard programming languages and application programming interfaces (APIs) such as OpenMP. Xeon Phi launched in 2010. Since it was originally based on an earlier GPU design ( codenamed "Larrabee") by Intel that was cancelled in 2009, it shared application areas with GPUs. The main difference between Xeon Phi and a GPGPU like Nvidia Tesla was that Xeon Phi, with an x86-compatible core, could, with less modification, run software that was originally targeted to a standard x86 CPU. Initially in the form of PCI Express-based add-on cards, a second-generation product, codenamed ''Knights Landing'', was announced in June 2013. These second-generation chips could be used as a standalone CPU, rather than just as an add-in card. In June 2013, the Tianhe-2 supercomputer at the National S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sandy Bridge (microarchitecture)

Sandy Bridge is the codename for Intel's 32 nm microarchitecture used in the second generation of the Intel Core processors ( Core i7, i5, i3). The Sandy Bridge microarchitecture is the successor to Nehalem and Westmere microarchitecture. Intel demonstrated an A1 stepping Sandy Bridge processor in 2009 during Intel Developer Forum (IDF), and released first products based on the architecture in January 2011 under the Core brand. Sandy Bridge is manufactured in the 32 nm process and has a soldered contact with the die and IHS (Integrated Heat Spreader), while Intel's subsequent generation Ivy Bridge uses a 22 nm die shrink and a TIM (Thermal Interface Material) between the die and the IHS. Technology Intel demonstrated a Sandy Bridge processor with A1 stepping at 2 GHz during the Intel Developer Forum in September 2009. Upgraded features from Nehalem include: CPU * Intel Turbo Boost 2.0 * 32 KB data + 32 KB instruction L1 cache and 256 KB L2 c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

XOP Instruction Set

The XOP (''eXtended Operations'') instruction set, announced by AMD on May 1, 2009, is an extension to the 128-bit SSE core instructions in the x86 and AMD64 instruction set for the Bulldozer processor core, which was released on October 12, 2011. However AMD removed support for XOP from Zen (microarchitecture) onward. The XOP instruction set contains several different types of vector instructions since it was originally intended as a major upgrade to SSE. Most of the instructions are integer instructions, but it also contains floating point permutation and floating point fraction extraction instructions. See the index for a list of instruction types. History XOP is a revised subset of what was originally intended as SSE5. It was changed to be similar but not overlapping with AVX, parts that overlapped with AVX were removed or moved to separate standards such as FMA4 (floating-point vector multiply–accumulate) and CVT16 ( Half-precision floating-point conversion imp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ars Technica

''Ars Technica'' is a website covering news and opinions in technology, science, politics, and society, created by Ken Fisher and Jon Stokes in 1998. It publishes news, reviews, and guides on issues such as computer hardware and software, science, technology policy, and video games. ''Ars Technica'' was privately owned until May 2008, when it was sold to Condé Nast Digital, the online division of Condé Nast Publications. Condé Nast purchased the site, along with two others, for $25 million and added it to the company's ''Wired'' Digital group, which also includes '' Wired'' and, formerly, Reddit. The staff mostly works from home and has offices in Boston, Chicago, London, New York City, and San Francisco. The operations of ''Ars Technica'' are funded primarily by advertising, and it has offered a paid subscription service since 2001. History Ken Fisher, who serves as the website's current editor-in-chief, and Jon Stokes created ''Ars Technica'' in 1998. Its purpose was t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

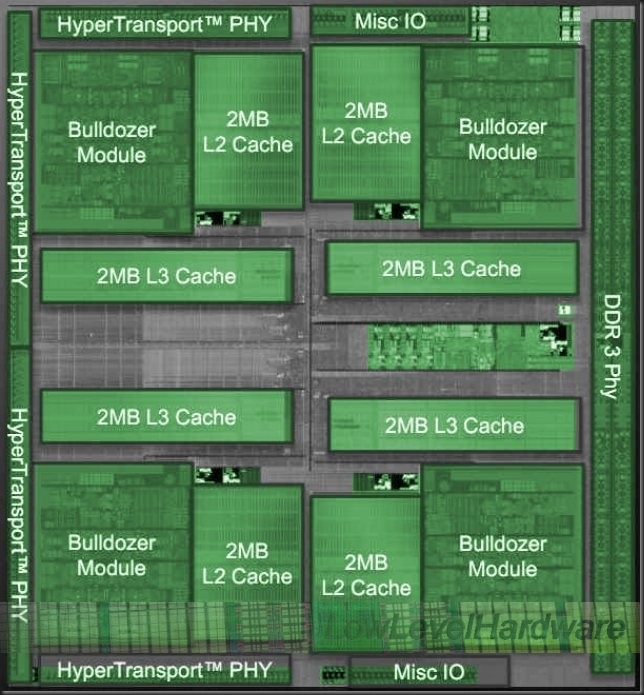

Bulldozer (processor)

The AMD Bulldozer Family 15h is a microprocessor microarchitecture for the FX and Opteron line of processors, developed by AMD for the desktop and server markets. Bulldozer is the codename for this family of microarchitectures. It was released on October 12, 2011, as the successor to the K10 microarchitecture. Bulldozer is designed from scratch, not a development of earlier processors. The core is specifically aimed at computing products with TDPs of 10 to 125 watts. AMD claims dramatic performance-per-watt efficiency improvements in high-performance computing (HPC) applications with Bulldozer cores. The ''Bulldozer'' cores support most of the instruction sets implemented by Intel processors (Sandy Bridge) available at its introduction (including SSSE3, SSE4.1, SSE4.2, AES, CLMUL, and AVX) as well as new instruction sets proposed by AMD; ABM, XOP, FMA4 and F16C. Only Bulldozer GEN4 (Excavator) supports AVX2 instruction sets. Overview According to AMD, Bull ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

SSE5

The SSE5 (short for Streaming SIMD Extensions version 5) was a SIMD instruction set extension proposed by AMD on August 30, 2007 as a supplement to the 128-bit SSE core instructions in the AMD64 architecture. AMD chose not to implement SSE5 as originally proposed. In May 2009, AMD replaced SSE5 with three smaller instruction set extensions named as XOP, FMA4, and F16C, which retain the proposed functionality of SSE5, but encode the instructions differently for better compatibility with Intel's proposed AVX instruction set. The three SSE5-derived instruction sets were introduced in the Bulldozer processor core, released in October 2011 on a 32 nm process. Compatibility AMD's SSE5 extension bundle does not include the full set of Intel's SSE4 instructions, making it a competitor to SSE4 rather than a successor. SSE5 enhancements The proposed SSE5 instruction set consisted of 170 instructions (including 46 base instructions), many of which are designed to improve single-th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Real Mode

Real mode, also called real address mode, is an operating mode of all x86-compatible CPUs. The mode gets its name from the fact that addresses in real mode always correspond to real locations in memory. Real mode is characterized by a 20- bit segmented memory address space (giving 1 MB of addressable memory) and unlimited direct software access to all addressable memory, I/O addresses and peripheral hardware. Real mode provides no support for memory protection, multitasking, or code privilege levels. Before the introduction of protected mode with the release of the 80286, real mode was the only available mode for x86 CPUs; and for backward compatibility, all x86 CPUs start in real mode when reset, though it is possible to emulate real mode on other systems when starting in other modes. History The 80286 architecture introduced protected mode, allowing for (among other things) hardware-level memory protection. Using these new features, however, required a new operating system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Memory Alignment

Data structure alignment is the way data is arranged and accessed in computer memory. It consists of three separate but related issues: data alignment, data structure padding, and packing. The CPU in modern computer hardware performs reads and writes to memory most efficiently when the data is ''naturally aligned'', which generally means that the data's memory address is a multiple of the data size. For instance, in a 32-bit architecture, the data may be aligned if the data is stored in four consecutive bytes and the first byte lies on a 4-byte boundary. ''Data alignment'' is the aligning of elements according to their natural alignment. To ensure natural alignment, it may be necessary to insert some ''padding'' between structure elements or after the last element of a structure. For example, on a 32-bit machine, a data structure containing a 16-bit value followed by a 32-bit value could have 16 bits of ''padding'' between the 16-bit value and the 32-bit value to align the 32 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Advanced Matrix Extensions

Advanced Matrix Extensions (AMX), also known as Intel Advanced Matrix Extensions (Intel AMX), are extensions to the x86 instruction set architecture (ISA) for microprocessors from Intel designed to work on matrices to accelerate artificial intelligence (AI) and machine learning (ML) workloads. Extensions AMX was introduced by Intel in June 2020 and first supported by Intel with the Sapphire Rapids microarchitecture for Xeon servers, released in January 2023. It introduced 2-dimensional registers called tiles upon which accelerators can perform operations. It is intended as an extensible architecture; the first accelerator implemented is called tile matrix multiply unit (TMUL). In Intel Architecture Instruction Set Extensions and Future Features revision 46, published in September 2022, a new AMX-FP16 extension was documented. This extension adds support for half-precision floating-point numbers. In revision 48 from March 2023, AMX-COMPLEX was documented, adding support for half-p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

AVX-512

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and first implemented in the 2016 Intel Xeon Phi x200 (Knights Landing), and then later in a number of AMD and other Intel CPUs ( see list below). AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F (AVX-512 Foundation) is required by all AVX-512 implementations. Besides widening most 256-bit instructions, the extensions introduce various new operations, such as new data conversions, scatter operations, and permutations. The number of AVX registers is increased from 16 to 32, and eight new "mask registers" are added, which allow for variable selection and blending of the results of instructions. In CPUs with the vector length (VL) extension—included in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Bit Manipulation

Bit manipulation is the act of algorithmically manipulating bits or other pieces of data shorter than a word. Computer programming tasks that require bit manipulation include low-level device control, error detection and error correction, correction algorithms, data compression, encryption algorithms, and Optimization (computer science), optimization. For most other tasks, modern programming languages allow the programmer to work directly with Abstraction (computer science), abstractions instead of bits that represent those abstractions. Source code that does bit manipulation makes use of the bitwise operations: AND, OR, XOR, NOT, and possibly other operations analogous to the boolean operators; there are also bitwise operation#Bit shifts, bit shifts and operations to count ones and zeros, find high and low one or zero, set, reset and test bits, extract and insert fields, mask and zero fields, gather and scatter bits to and from specified bit positions or fields. Integer arithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |