|

VA-API

Video Acceleration API (VA-API) is an open source application programming interface that allows applications such as VLC media player or GStreamer to use hardware video acceleration capabilities, usually provided by the graphics processing unit (GPU). It is implemented by the free and open-source Library (computing), library , combined with a hardware-specific driver, usually provided together with the GPU driver. VA-API video decode/encode interface is platform and window system independent but is primarily targeted at Direct Rendering Infrastructure (DRI) in X Window System on Unix-like operating systems (including Linux, FreeBSD, Solaris (operating system), Solaris), and Android (operating system), Android, however it can potentially also be used with direct framebuffer and graphics sub-systems for video output. Accelerated processing includes support for Video codec, video decoding, video coding, video encoding, subpicture blending, and rendering. The VA-API specification wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel GMA

The Intel Graphics Media Accelerator (GMA) is a series of integrated graphics processors introduced in 2004 by Intel, replacing the earlier Intel Extreme Graphics series and being succeeded by the Intel HD and Iris Graphics series. This series targets the market of low-cost graphics solutions. The products in this series are integrated onto the motherboard, have limited graphics processing power, and use the computer's main memory for storage instead of a dedicated video memory. They are commonly found on netbooks, low-priced laptops and desktop computers, as well as business computers which do not need high levels of graphics capability. In early 2007, about 90% of all PC motherboards sold had an integrated GPU. History The GMA line of GPUs replaces the earlier Intel Extreme Graphics, and the Intel740 line, the latter of which was a discrete unit in the form of AGP and PCI cards with technology that evolved from companies Real3D and Lockheed Martin. Later, Intel integrated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 series of instruction sets, the instruction sets found in most personal computers (PCs). Incorporated in Delaware, Intel ranked No. 45 in the 2020 ''Fortune'' 500 list of the largest United States corporations by total revenue for nearly a decade, from 2007 to 2016 fiscal years. Intel supplies microprocessors for computer system manufacturers such as Acer, Lenovo, HP, and Dell. Intel also manufactures motherboard chipsets, network interface controllers and integrated circuits, flash memory, graphics chips, embedded processors and other devices related to communications and computing. Intel (''int''egrated and ''el''ectronics) was founded on July 18, 1968, by semiconductor pioneers Gordon Moore (of Moore's law) and Robert Noyce ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Framebuffer

A framebuffer (frame buffer, or sometimes framestore) is a portion of random-access memory (RAM) containing a bitmap that drives a video display. It is a memory buffer containing data representing all the pixels in a complete video frame. Modern video cards contain framebuffer circuitry in their cores. This circuitry converts an in-memory bitmap into a video signal that can be displayed on a computer monitor. In computing, a screen buffer is a part of computer memory used by a computer application for the representation of the content to be shown on the computer display. The screen buffer may also be called the video buffer, the regeneration buffer, or regen buffer for short. Screen buffers should be distinguished from video memory. To this end, the term off-screen buffer is also used. The information in the buffer typically consists of color values for every pixel to be shown on the display. Color values are commonly stored in 1-bit binary (monochrome), 4-bit palettized, 8-bit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Efficiency Video Coding

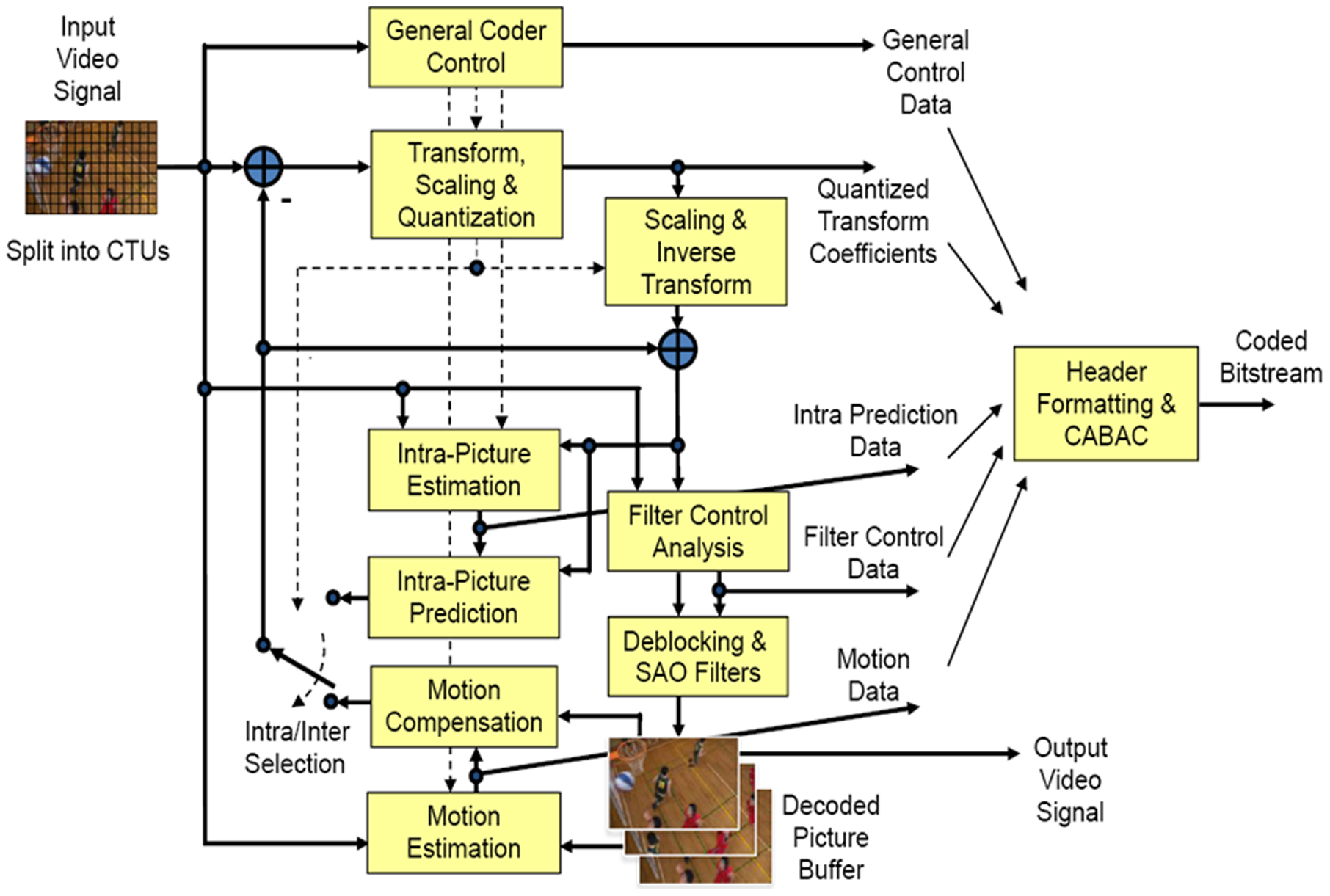

High Efficiency Video Coding (HEVC), also known as H.265 and MPEG-H Part 2, is a video compression standard designed as part of the MPEG-H project as a successor to the widely used Advanced Video Coding (AVC, H.264, or MPEG-4 Part 10). In comparison to AVC, HEVC offers from 25% to 50% better data compression at the same level of video quality, or substantially improved video quality at the same bit rate. It supports resolutions up to 8192×4320, including 8K UHD, and unlike the primarily 8-bit AVC, HEVC's higher fidelity Main 10 profile has been incorporated into nearly all supporting hardware. While AVC uses the integer discrete cosine transform (DCT) with 4×4 and 8×8 block sizes, HEVC uses integer DCT and DST transforms with varied block sizes between 4×4 and 32×32. The High Efficiency Image Format (HEIF) is based on HEVC. , HEVC is used by 43% of video developers, and is the second most widely used video coding format after AVC. Concept In most ways, HEVC is an extensi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-4 Part 2

MPEG-4 Part 2, MPEG-4 Visual (formally ISO/IEC 14496-2) is a video compression format developed by the Moving Picture Experts Group (MPEG). It belongs to the MPEG-4 ISO/IEC standards. It uses block-wise motion compensation and a discrete cosine transform (DCT), similar to previous standards such as MPEG-1 Part 2 and H.262/MPEG-2 Part 2. Several popular codecs including DivX, Xvid, and Nero Digital implement this standard. Note that MPEG-4 Part 10 defines a different format from MPEG-4 Part 2 and should not be confused with it. MPEG-4 Part 10 is commonly referred to as H.264 or AVC, and was jointly developed by ITU-T and MPEG. MPEG-4 Part 2 is H.263 compatible in the sense that a basic H.263 bitstream is correctly decoded by an MPEG-4 Video decoder. (MPEG-4 Video decoder is natively capable of decoding a basic form of H.263.) In MPEG-4 Visual, there are two types of video object layers: the video object layer that provides full MPEG-4 functionality, and a reduced functionality ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deblocking Filter (video)

A deblocking filter is a video filter applied to decoded compressed video to improve visual quality and prediction performance by smoothing the sharp edges which can form between macroblocks when block coding techniques are used. The filter aims to improve the appearance of decoded pictures. It is a part of the specification for both the SMPTE VC-1 codec and the ITU H.264 (ISO MPEG-4 AVC) codec. H.264 deblocking filter In contrast with older MPEG- 1/ 2/ 4 standards, the H.264 deblocking filter is not an optional additional feature in the decoder. It is a feature on both the decoding path and on the encoding path, so that the in-loop effects of the filter are taken into account in reference macroblocks used for prediction. When a stream is encoded, the filter strength can be selected, or the filter can be switched off entirely. Otherwise, the filter strength is determined by coding modes of adjacent blocks, quantization step size, and the steepness of the luminance gradient betw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Motion Compensation

Motion compensation in computing, is an algorithmic technique used to predict a frame in a video, given the previous and/or future frames by accounting for motion of the camera and/or objects in the video. It is employed in the encoding of video data for video compression, for example in the generation of MPEG-2 files. Motion compensation describes a picture in terms of the transformation of a reference picture to the current picture. The reference picture may be previous in time or even from the future. When images can be accurately synthesized from previously transmitted/stored images, the compression efficiency can be improved. Motion compensation is one of the two key video compression techniques used in video coding standards, along with the discrete cosine transform (DCT). Most video coding standards, such as the H.26x and MPEG formats, typically use motion-compensated DCT hybrid coding, known as block motion compensation (BMC) or motion-compensated DCT (MC DCT). Function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable-length Code

In coding theory a variable-length code is a code which maps source symbols to a ''variable'' number of bits. Variable-length codes can allow sources to be compressed and decompressed with ''zero'' error (lossless data compression) and still be read back symbol by symbol. With the right coding strategy an independent and identically-distributed source may be compressed almost arbitrarily close to its entropy. This is in contrast to fixed length coding methods, for which data compression is only possible for large blocks of data, and any compression beyond the logarithm of the total number of possibilities comes with a finite (though perhaps arbitrarily small) probability of failure. Some examples of well-known variable-length coding strategies are Huffman coding, Lempel–Ziv coding, arithmetic coding, and context-adaptive variable-length coding. Codes and their extensions The extension of a code is the mapping of finite length source sequences to finite length bit strings ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DirectX Video Acceleration

DirectX Video Acceleration (DXVA) is a Microsoft API specification for the Microsoft Windows and Xbox 360 platforms that allows video decoding to be hardware-accelerated. The pipeline allows certain CPU-intensive operations such as iDCT, motion compensation and deinterlacing to be offloaded to the GPU. DXVA 2.0 allows more operations, including video capturing and processing operations, to be hardware-accelerated as well. DXVA works in conjunction with the video rendering model used by the video card. DXVA 1.0, which was introduced as a standardized API with Windows 2000 and is currently available on Windows 98 or later, can use either the overlay rendering mode or VMR 7/9. DXVA 2.0, available only on Windows Vista, Windows 7, Windows 8 and later OSs, integrates with Media Foundation (MF) and uses the Enhanced Video Renderer (EVR) present in MF. Overview The DXVA is used by software video decoders to define a codec-specific pipeline for hardware-accelerated decoding and ren ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XvMC

X-Video Motion Compensation (XvMC), is an extension of the X video extension (Xv) for the X Window System. The XvMC API allows video programs to offload portions of the video decoding process to the GPU video-hardware. In theory this process should also reduce bus bandwidth requirements. Currently, the supported portions to be offloaded by XvMC onto the GPU are motion compensation (mo comp) and inverse discrete cosine transform (iDCT) for MPEG-2 video. XvMC also supports offloading decoding of mo comp, iDCT, and VLD ("Variable-Length Decoding", more commonly known as "slice level acceleration") for not only MPEG-2 but also MPEG-4 ASP video on VIA Unichrome (S3 Graphics Chrome Series) hardware. XvMC was the first UNIX equivalent of the Microsoft Windows DirectX Video Acceleration (DxVA) API. Popular software applications known to take advantage of XvMC include MPlayer, MythTV, and xine. Device drivers Each hardware video GPU capable of XvMC video acceleration requires a X11 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |