|

Variable Length Code

In coding theory a variable-length code is a code which maps source symbols to a ''variable'' number of bits. Variable-length codes can allow sources to be compressed and decompressed with ''zero'' error (lossless data compression) and still be read back symbol by symbol. With the right coding strategy an independent and identically-distributed source may be compressed almost arbitrarily close to its entropy. This is in contrast to fixed length coding methods, for which data compression is only possible for large blocks of data, and any compression beyond the logarithm of the total number of possibilities comes with a finite (though perhaps arbitrarily small) probability of failure. Some examples of well-known variable-length coding strategies are Huffman coding, Lempel–Ziv coding, arithmetic coding, and context-adaptive variable-length coding. Codes and their extensions The extension of a code is the mapping of finite length source sequences to finite length bit stri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error control (or ''channel coding'') # Cryptographic coding # Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, ZIP data compression makes data files smaller, for purposes such as to reduce Internet traffic. Data compressio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Injective

In mathematics, an injective function (also known as injection, or one-to-one function) is a function that maps distinct elements of its domain to distinct elements; that is, implies . (Equivalently, implies in the equivalent contrapositive statement.) In other words, every element of the function's codomain is the image of one element of its domain. The term must not be confused with that refers to bijective functions, which are functions such that each element in the codomain is an image of exactly one element in the domain. A homomorphism between algebraic structures is a function that is compatible with the operations of the structures. For all common algebraic structures, and, in particular for vector spaces, an is also called a . However, in the more general context of category theory, the definition of a monomorphism differs from that of an injective homomorphism. This is thus a theorem that they are equivalent for algebraic structures; see for more detail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cambridge University Press

Cambridge University Press is the university press of the University of Cambridge. Granted letters patent by Henry VIII of England, King Henry VIII in 1534, it is the oldest university press in the world. It is also the King's Printer. Cambridge University Press is a department of the University of Cambridge and is both an academic and educational publisher. It became part of Cambridge University Press & Assessment, following a merger with Cambridge Assessment in 2021. With a global sales presence, publishing hubs, and offices in more than 40 Country, countries, it publishes over 50,000 titles by authors from over 100 countries. Its publishing includes more than 380 academic journals, monographs, reference works, school and university textbooks, and English language teaching and learning publications. It also publishes Bibles, runs a bookshop in Cambridge, sells through Amazon, and has a conference venues business in Cambridge at the Pitt Building and the Sir Geoffrey Cass Spo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable-length Instruction Set

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation''. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable-length Quantity

A variable-length quantity (VLQ) is a universal code that uses an arbitrary number of binary octets (eight-bit bytes) to represent an arbitrarily large integer. A VLQ is essentially a base-128 representation of an unsigned integer with the addition of the eighth bit to mark continuation of bytes. VLQ is identical to LEB128 except in endianness. See the example below. Applications and history Base-128 compression is known by many namesVB (Variable Byte), VByte, Varint, VInt, EncInt etc. Jianguo Wang; Chunbin Lin; Yannis Papakonstantinou; Steven Swanson"An Experimental Study of Bitmap Compression vs. Inverted List Compression" 2017. . A variable-length quantity (VLQ) was defined for use in the standard MIDI file formatMIDI File Format: Variable Quantities [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Channel Coding

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

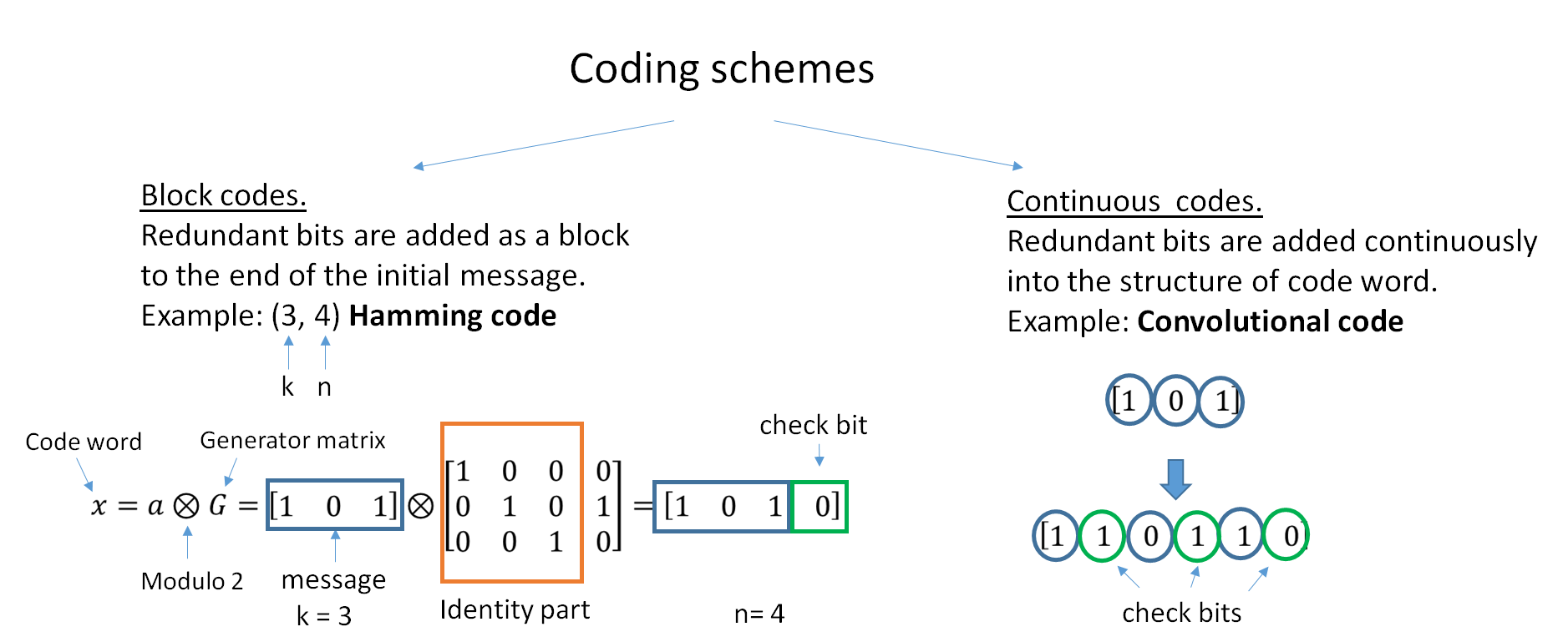

Block Code

In coding theory, block codes are a large and important family of error-correcting codes that encode data in blocks. There is a vast number of examples for block codes, many of which have a wide range of practical applications. The abstract definition of block codes is conceptually useful because it allows coding theorists, mathematicians, and computer scientists to study the limitations of ''all'' block codes in a unified way. Such limitations often take the form of ''bounds'' that relate different parameters of the block code to each other, such as its rate and its ability to detect and correct errors. Examples of block codes are Reed–Solomon codes, Hamming codes, Hadamard codes, Expander codes, Golay codes, and Reed–Muller codes. These examples also belong to the class of linear codes, and hence they are called linear block codes. More particularly, these codes are known as algebraic block codes, or cyclic block codes, because they can be generated using boolean polyno ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sardinas–Patterson Algorithm

In coding theory, the Sardinas–Patterson algorithm is a classical algorithm for determining in polynomial time whether a given variable-length code is uniquely decodable, named after August Albert Sardinas and George W. Patterson, who published it in 1953. The algorithm carries out a systematic search for a string which admits two different decompositions into codewords. As Knuth reports, the algorithm was rediscovered about ten years later in 1963 by Floyd, despite the fact that it was at the time already well known in coding theory. Idea of the algorithm Consider the code \. This code, which is based on an example by Berstel, is an example of a code which is not uniquely decodable, since the string :011101110011 can be interpreted as the sequence of codewords :01110 – 1110 – 011, but also as the sequence of codewords :011 – 1 – 011 – 10011. Two possible decodings of this encoded string are thus given by ''cdb'' and ''babe''. In general, a codeword can be foun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-singular Codes

In the mathematical field of algebraic geometry, a singular point of an algebraic variety is a point that is 'special' (so, singular), in the geometric sense that at this point the tangent space at the variety may not be regularly defined. In case of varieties defined over the reals, this notion generalizes the notion of local non-flatness. A point of an algebraic variety which is not singular is said to be regular. An algebraic variety which has no singular point is said to be non-singular or smooth. Definition A plane curve defined by an implicit equation :F(x,y)=0, where is a smooth function is said to be ''singular'' at a point if the Taylor series of has order at least at this point. The reason for this is that, in differential calculus, the tangent at the point of such a curve is defined by the equation :(x-x_0)F'_x(x_0,y_0) + (y-y_0)F'_y(x_0,y_0)=0, whose left-hand side is the term of degree one of the Taylor expansion. Thus, if this term is zero, the tangent may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantization (signal Processing)

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set (often a continuous set) to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms. The difference between an input value and its quantized value (such as round-off error) is referred to as quantization error. A device or algorithmic function that performs quantization is called a quantizer. An analog-to-digital converter is an example of a quantizer. Example For example, rounding a real number x to the nearest integer value forms a very basic type of quantizer – a ''uniform'' one. A typical (''mid-tread'') uni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |