|

Vapnik

Vladimir Naumovich Vapnik (russian: Владимир Наумович Вапник; born 6 December 1936) is one of the main developers of the Vapnik–Chervonenkis theory of statistical learning, and the co-inventor of the support-vector machine method, and support-vector clustering algorithm. Early life and education Vladimir Vapnik was born to a Jewish family in the Soviet Union. He received his master's degree in mathematics from the Uzbek State University, Samarkand, Uzbek SSR in 1958 and Ph.D in statistics at the Institute of Control Sciences, Moscow in 1964. He worked at this institute from 1961 to 1990 and became Head of the Computer Science Research Department. Academic career At the end of 1990, Vladimir Vapnik moved to the USA and joined the Adaptive Systems Research Department at AT&T Bell Labs in Holmdel, New Jersey. While at AT&T, Vapnik and his colleagues did work on the support-vector machine, which he also worked on much earlier before moving to the USA. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support-vector Machine

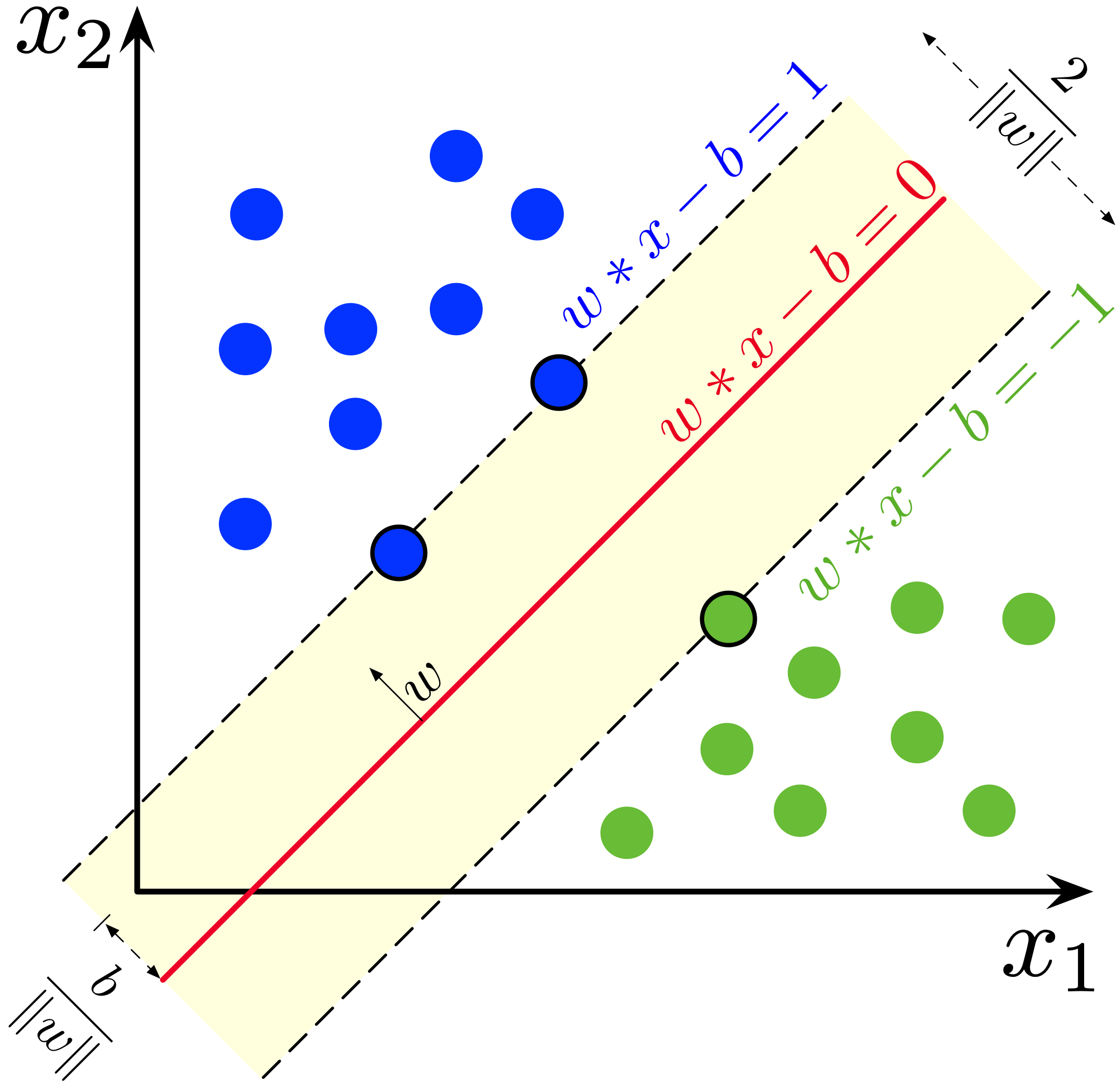

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues (Boser et al., 1992, Guyon et al., 1993, Cortes and Vapnik, 1995, Vapnik et al., 1997) SVMs are one of the most robust prediction methods, being based on statistical learning frameworks or VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that assigns new examples to one category or the other, making it a non- probabilistic binary linear classifier (although methods such as Platt scaling exist to use SVM in a probabilistic classification setting). SVM maps training examples to points in space so as to maximise the width of the gap between the two categories. New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support-vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues (Boser et al., 1992, Guyon et al., 1993, Cortes and Vapnik, 1995, Vapnik et al., 1997) SVMs are one of the most robust prediction methods, being based on statistical learning frameworks or VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that assigns new examples to one category or the other, making it a non- probabilistic binary linear classifier (although methods such as Platt scaling exist to use SVM in a probabilistic classification setting). SVM maps training examples to points in space so as to maximise the width of the gap between the two categories. New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vapnik–Chervonenkis Dimension

Vapnik–Chervonenkis theory, the Vapnik–Chervonenkis (VC) dimension is a measure of the capacity (complexity, expressive power, richness, or flexibility) of a set of functions that can be learned by a statistical binary classification algorithm. It is defined as the cardinality of the largest set of points that the algorithm can shatter, which means the algorithm can always learn a perfect classifier for any labeling of at least one configuration of those data points. It was originally defined by Vladimir Vapnik and Alexey Chervonenkis. Informally, the capacity of a classification model is related to how complicated it can be. For example, consider the thresholding of a high-degree polynomial: if the polynomial evaluates above zero, that point is classified as positive, otherwise as negative. A high-degree polynomial can be wiggly, so it can fit a given set of training points well. But one can expect that the classifier will make errors on other points, because it is too wiggl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vapnik–Chervonenkis Theory

Vapnik–Chervonenkis theory (also known as VC theory) was developed during 1960–1990 by Vladimir Vapnik and Alexey Chervonenkis. The theory is a form of computational learning theory, which attempts to explain the learning process from a statistical point of view. Introduction VC theory covers at least four parts (as explained in ''The Nature of Statistical Learning Theory''): *Theory of consistency of learning processes **What are (necessary and sufficient) conditions for consistency of a learning process based on the empirical risk minimization principle? *Nonasymptotic theory of the rate of convergence of learning processes **How fast is the rate of convergence of the learning process? *Theory of controlling the generalization ability of learning processes **How can one control the rate of convergence (the generalization ability) of the learning process? *Theory of constructing learning machines **How can one construct algorithms that can control the generalization abilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Learning Theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data. Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics. Introduction The goals of learning are understanding and prediction. Learning falls into many categories, including supervised learning, unsupervised learning, online learning, and reinforcement learning. From the perspective of statistical learning theory, supervised learning is best understood. Supervised learning involves learning from a training set of data. Every point in the training is an input-output pair, where the input maps to an output. The learning problem consists of inferring the function that maps between the input and the output, such that the learned function can be used to predict t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov Medal

The Kolmogorov Medal is a prize awarded to distinguished researchers with life-long contributions to one of the fields initiated by Andrey Kolmogorov. The Kolmogorov Medal was first awarded in 2003 to celebrate 100 years since the birth of Kolmogorov. The recipient is invited to deliver a lecture. Early lectures were published in The Computer Journal. Recipients The following people have received the Kolmogorov Medal: Publications See also * List of mathematics awards This list of mathematics awards is an index to articles about notable awards for mathematics. The list is organized by the region and country of the organization that sponsors the award, but awards may be open to mathematicians from around the wo ... References {{International mathematical activities Mathematics awards ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structural Risk Minimization

Structural risk minimization (SRM) is an inductive principle of use in machine learning. Commonly in machine learning, a generalized model must be selected from a finite data set, with the consequent problem of overfitting – the model becoming too strongly tailored to the particularities of the training set and generalizing poorly to new data. The SRM principle addresses this problem by balancing the model's complexity against its success at fitting the training data. This principle was first set out in a 1974 paper by Vladimir Vapnik and Alexey Chervonenkis and uses the VC dimension. In practical terms, Structural Risk Minimization is implemented by minimizing E_ + \beta H(W), where E_ is the train error, the function H(W) is called a regularization function, and \beta is a constant. H(W) is chosen such that it takes large values on parameters W that belong to high-capacity subsets of the parameter space. Minimizing H(W) in effect limits the capacity of the accessible subset ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predict ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paris Kanellakis Award

The Paris Kanellakis Theory and Practice Award is granted yearly by the Association for Computing Machinery (ACM) to honor "specific theoretical accomplishments that have had a significant and demonstrable effect on the practice of computing". It was instituted in 1996, in memory of Paris C. Kanellakis, a computer scientist who died with his immediate family in an airplane crash in South America in 1995 (American Airlines Flight 965). The award is accompanied by a prize of $10,000 and is endowed by contributions from Kanellakis's parents, with additional financial support provided by four ACM Special Interest Groups (SIGACT, SIGDA, SIGMOD, and SIGPLAN), the ACM SIG Projects Fund, and individual contributions. Winners See also * List of computer science awards This list of computer science awards is an index to articles on notable awards related to computer science. It includes lists of awards by the Association for Computing Machinery, the Institute of Electrical and Electronic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Frank Rosenblatt Award

The IEEE Frank Rosenblatt Award is a Technical Field Award established by the Institute of Electrical and Electronics Engineers Board of Directors in 2004. This award is presented for outstanding contributions to the advancement of the design, practice, techniques, or theory in biologically and linguistically motivated computational paradigms, including neural networks, connectionist systems, evolutionary computation, fuzzy systems, and hybrid intelligent systems in which these paradigms are contained. The award may be presented to an individual, multiple recipients, or a team of up to three people. It is named for Frank Rosenblatt, creator of the perceptron. Recipients of this award receive a bronze medal, certificate, and honorarium. Recipients * 2021: James M. Keller * 2020: Xin Yao * 2019: Erkki Oja * 2018: Enrique H. Ruspini * 2017: Stephen Grossberg * 2016: Ronald R. Yager * 2015: Marco Dorigo * 2014: Geoffrey E. Hinton * 2013: Terrence Sejnowski * 2012: Vladimi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Benjamin Franklin Medal (Franklin Institute)

The Franklin Institute Awards (or Benjamin Franklin Medal) is an American science and engineering award presented by the Franklin Institute, a science museum in Philadelphia. The Franklin Institute awards comprises the Benjamin Franklin Medals in seven areas of science and engineering, the Bower Awards and Prize for Achievement in Science, and the Bower Award for Business Leadership. Since 1824, the institute has recognized "world-changing scientists, engineers, inventors, and industrialists—all of whom reflect Benjamin Franklin’s spirit of curiosity, ingenuity, and innovation". Some of the noted past laureates include Nikola Tesla, Thomas Edison, Marie Curie, Max Planck, Albert Einstein, Stephen Hawking. Some of the 21st century laureates of the institute awards are Bill Gates, James P. Allison, Indra Nooyi, Jane Goodall, Elizabeth Blackburn, George Church, Robert S. Langer, and Alex Gorsky. Benjamin Franklin Medals In 1998, the Benjamin Franklin Medals were created b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |