|

Uncertain Inference

Uncertain inference was first described by C. J. van Rijsbergen as a way to formally define a query and document relationship in Information retrieval. This formalization is a logical implication with an attached measure of uncertainty. Definitions Rijsbergen proposes that the measure of uncertainty of a document ''d'' to a query ''q'' be the probability of its logical implication, i.e.: :P(d \to q) A user's query can be interpreted as a set of assertions about the desired document. It is the system's task to infer, given a particular document, if the query assertions are true. If they are, the document is retrieved. In many cases the contents of documents are not sufficient to assert the queries. A knowledge base of facts and rules is needed, but some of them may be uncertain because there may be a probability associated to using them for inference. Therefore, we can also refer to this as ''plausible inference''. The plausibility of an inference d \to q is a function of the pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Information Retrieval

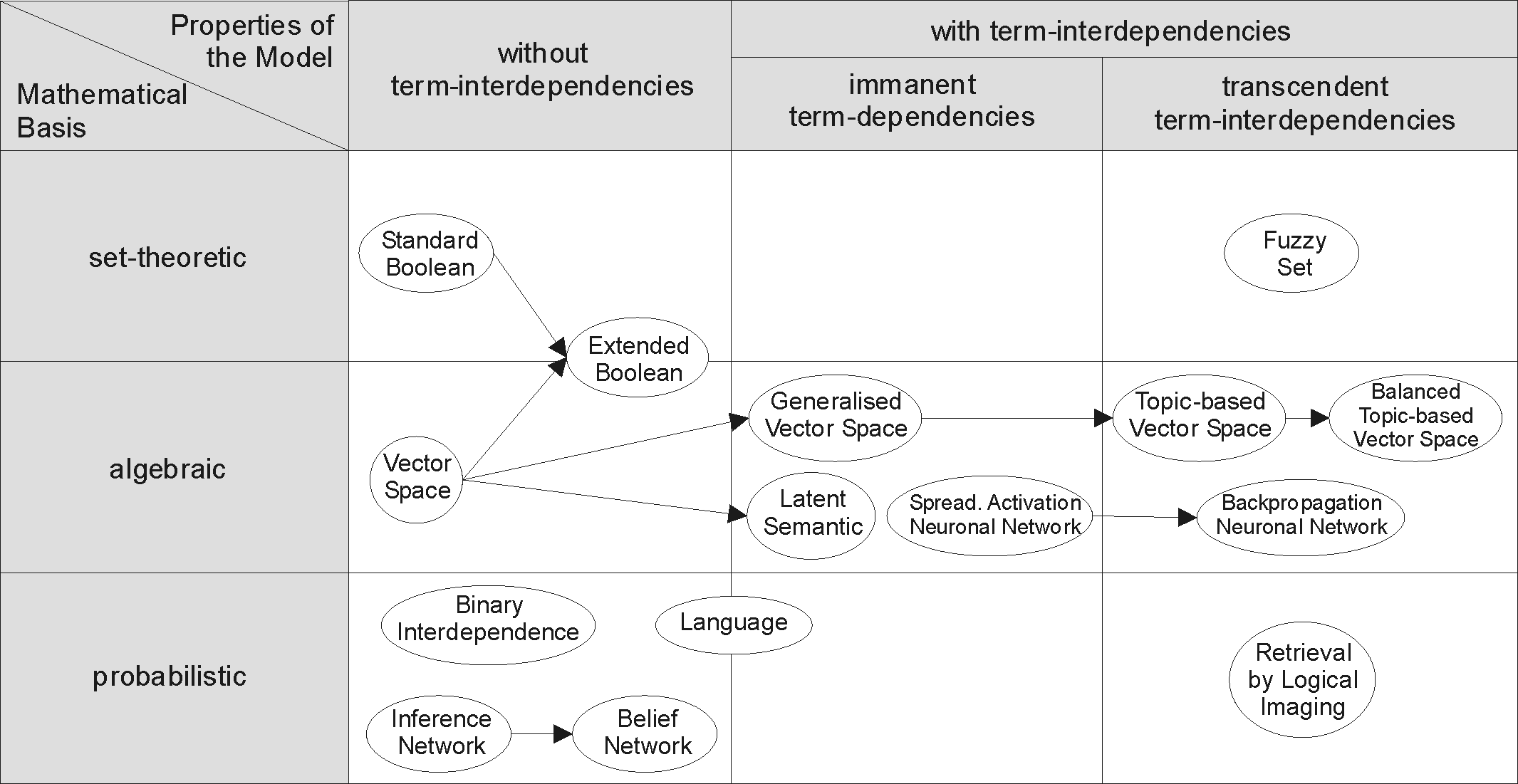

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Markov Logic Network

A Markov logic network (MLN) is a probabilistic logic which applies the ideas of a Markov network to first-order logic, defining probability distributions on possible worlds on any given domain. History In 2002, Ben Taskar, Pieter Abbeel and Daphne Koller introduced relational Markov networks as templates to specify Markov networks abstractly and without reference to a specific domain. Work on Markov logic networks began in 2003 by Pedro Domingos and Matt Richardson. Markov logic networks is a popular formalism for statistical relational learning. Syntax A Markov logic network consists of a collection of formulas from first-order logic, to each of which is assigned a real number, the weight. The underlying idea is that an interpretation is more likely if it satisfies formulas with positive weights and less likely if it satisfies formulas with negative weights. For instance, the following Markov logic network codifies how smokers are more likely to be friends with other sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Imprecise Probability

Imprecise probability generalizes probability theory to allow for partial probability specifications, and is applicable when information is scarce, vague, or conflicting, in which case a unique probability distribution may be hard to identify. Thereby, the theory aims to represent the available knowledge more accurately. Imprecision is useful for dealing with expert elicitation, because: * People have a limited ability to determine their own subjective probabilities and might find that they can only provide an interval. * As an interval is compatible with a range of opinions, the analysis ought to be more convincing to a range of different people. Introduction Uncertainty is traditionally modelled by a probability theory, probability distribution, as developed by Andrey Kolmogorov, Kolmogorov, Pierre-Simon Laplace, Laplace, Bruno de Finetti, de Finetti, Frank P. Ramsey, Ramsey, Richard Threlkeld Cox, Cox, Dennis Lindley, Lindley, and many others. However, this has not been unani ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Plausible Reasoning

Plausible reasoning is a method of deriving new conclusions from given known premises, a method different from the classical syllogistic argumentation methods of Aristotelian two-valued logic. The syllogistic style of argumentation is illustrated by the oft-quoted argument "All men are mortal, Socrates is a man, and therefore, Socrates is mortal." In contrast, consider the statement "if it is raining then it is cloudy." The only logical inference that one can draw from this is that "if it is not cloudy then it is not raining." But ordinary people in their everyday lives would conclude that "if it is not raining then being cloudy is less plausible," or "if it is cloudy then rain is more plausible." The unstated and unconsciously applied reasoning, arguably incorrect, that made people come to their conclusions is typical of plausible reasoning. As another example, "Suppose some dark night a policeman walks down a street, apparently deserted; but suddenly he hears a burglar alarm, look ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Probabilistic Logic

Probabilistic logic (also probability logic and probabilistic reasoning) involves the use of probability and logic to deal with uncertain situations. Probabilistic logic extends traditional logic truth tables with probabilistic expressions. A difficulty of probabilistic logics is their tendency to multiply the computational complexities of their probabilistic and logical components. Other difficulties include the possibility of counter-intuitive results, such as in case of belief fusion in Dempster–Shafer theory. Source trust and epistemic uncertainty about the probabilities they provide, such as defined in subjective logic, are additional elements to consider. The need to deal with a broad variety of contexts and issues has led to many different proposals. Logical background There are numerous proposals for probabilistic logics. Very roughly, they can be categorized into two different classes: those logics that attempt to make a probabilistic extension to logical entailment, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fuzzy Logic

Fuzzy logic is a form of many-valued logic in which the truth value of variables may be any real number between 0 and 1. It is employed to handle the concept of partial truth, where the truth value may range between completely true and completely false. By contrast, in Boolean algebra, Boolean logic, the truth values of variables may only be the integer values 0 or 1. The term ''fuzzy logic'' was introduced with the 1965 proposal of fuzzy set theory by mathematician Lotfi A. Zadeh, Lotfi Zadeh. Fuzzy logic had, however, been studied since the 1920s, as Łukasiewicz logic, infinite-valued logic—notably by Jan Łukasiewicz, Łukasiewicz and Alfred Tarski, Tarski. Fuzzy logic is based on the observation that people make decisions based on imprecise and non-numerical information. Fuzzy models or fuzzy sets are mathematical means of representing vagueness and imprecise information (hence the term fuzzy). These models have the capability of recognising, representing, manipulating, in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Finite-state Machine

A finite-state machine (FSM) or finite-state automaton (FSA, plural: ''automata''), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number of ''State (computer science), states'' at any given time. The FSM can change from one state to another in response to some Input (computer science), inputs; the change from one state to another is called a ''transition''. An FSM is defined by a list of its states, its initial state, and the inputs that trigger each transition. Finite-state machines are of two types—Deterministic finite automaton, deterministic finite-state machines and Nondeterministic finite automaton, non-deterministic finite-state machines. For any non-deterministic finite-state machine, an equivalent deterministic one can be constructed. The behavior of state machines can be observed in many devices in modern society that perform a predetermined sequence of actions d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Markov Chain

In probability theory and statistics, a Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). Markov processes are named in honor of the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes. They provide the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found application in areas including Bayesian statistics, biology, chemistry, economics, fin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maximum Entropy Principle

The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy, in the context of precisely stated prior data (such as a proposition that expresses testable information). Another way of stating this: Take precisely stated prior data or testable information about a probability distribution function. Consider the set of all trial probability distributions that would encode the prior data. According to this principle, the distribution with maximal information entropy is the best choice. History The principle was first expounded by E. T. Jaynes in two papers in 1957, where he emphasized a natural correspondence between statistical mechanics and information theory. In particular, Jaynes argued that the Gibbsian method of statistical mechanics is sound by also arguing that the entropy of statistical mechanics and the information entropy of information theory are the same ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Probabilistic Logic Network

A probabilistic logic network (PLN) is a conceptual, mathematical and computational approach to uncertain inference. It was inspired by logic programming and it uses probabilities in place of crisp (true/false) truth values, and fractional uncertainty in place of crisp known/unknown values. In order to carry out effective reasoning in real-world circumstances, artificial intelligence software handles uncertainty. Previous approaches to uncertain inference do not have the breadth of scope required to provide an integrated treatment of the disparate forms of cognitively critical uncertainty as they manifest themselves within the various forms of pragmatic inference. Going beyond prior probabilistic approaches to uncertain inference, PLN encompasses uncertain logic with such ideas as induction, abduction, analogy, fuzziness and speculation, and reasoning about time and causality. PLN was developed by Ben Goertzel, Matt Ikle, Izabela Lyon Freire Goertzel, and Ari Heljakka for use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Logical Consequence

Logical consequence (also entailment or logical implication) is a fundamental concept in logic which describes the relationship between statement (logic), statements that hold true when one statement logically ''follows from'' one or more statements. A Validity (logic), valid logical argument is one in which the Consequent, conclusion is entailed by the premises, because the conclusion is the consequence of the premises. The philosophical analysis of logical consequence involves the questions: In what sense does a conclusion follow from its premises? and What does it mean for a conclusion to be a consequence of premises?Beall, JC and Restall, Greg, Logical Consequence' The Stanford Encyclopedia of Philosophy (Fall 2009 Edition), Edward N. Zalta (ed.). All of philosophical logic is meant to provide accounts of the nature of logical consequence and the nature of logical truth. Logical consequence is logical truth, necessary and Formalism (philosophy of mathematics), formal, by wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Conditional Probability

In probability theory, conditional probability is a measure of the probability of an Event (probability theory), event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |