|

Uby

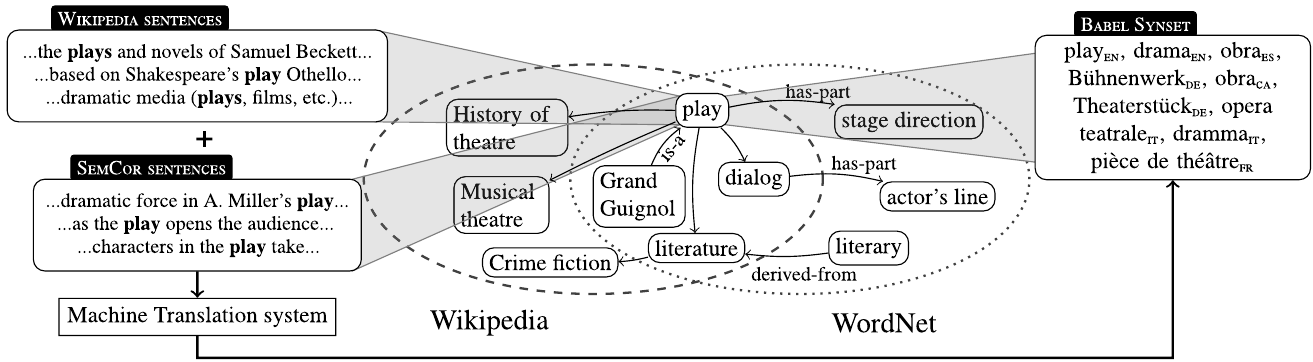

UBY is a large-scale lexical-semantic resource for natural language processing (NLP) developed at the Ubiquitous Knowledge Processing Lab (UKP) in the department of Computer Science of the Technische Universität Darmstadt . UBY is based on the ISO standard Lexical Markup Framework (LMF) and combines information from several expert-constructed and collaboratively constructed resources for English and German. UBY applies a word sense alignment approach (subfield of word sense disambiguation) for combining information about nouns and verbs. Currently, UBY contains 12 integrated resources in English and German. Included resources * English resources: WordNet, Wiktionary, Wikipedia, FrameNet, VerbNet, OmegaWiki * German resources: German Wikipedia, German Wiktionary, OntoWiktionary, GermaNet and IMSLex-Subcat * Multilingual resources: OmegaWiki. Format UBY-LMF is a format for standardizing lexical resources for Natural Language Processing (NLP). UBY-LMF conforms to the IS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UBY-LMF

UBY-LMF is a format for standardizing lexical resources for Natural Language Processing (NLP). UBY-LMF conforms to the ISO standard for lexicons: LMF, designed within the ISO-TC37, and constitutes a so-called serialization of this abstract standard. In accordance with the LMF, all attributes and other linguistic terms introduced in UBY-LMF refer to standardized descriptions of their meaning in ISOCat. UBY-LMF has been implemented in Java and is actively developed as aOpen Source project on Google Code Based on this Java implementation, the large scale electronic lexicon UBY has automatically been created - it is the result of using UBY-LMF to standardize a range of diverse lexical resources frequently used for NLP applications. In 2013, UBY contains 10 lexicons which are pairwise interlinked at the sense level: * English WordNet, Wiktionary, Wikipedia, FrameNet, VerbNet, OmegaWiki * German Wiktionary, Wikipedia, GermaNet GermaNet is a semantic network for the German language. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Markup Framework

Language resource management - Lexical markup framework (LMF; ISO 24613:2008), is the International Organization for Standardization ISO/TC37 standard for natural language processing (NLP) and machine-readable dictionary (MRD) lexicons. The scope is standardization of principles and methods relating to language resources in the contexts of multilingual communication. Objectives The goals of LMF are to provide a common model for the creation and use of lexical resources, to manage the exchange of data between and among these resources, and to enable the merging of large number of individual electronic resources to form extensive global electronic resources. Types of individual instantiations of LMF can include monolingual, bilingual or multilingual lexical resources. The same specifications are to be used for both small and large lexicons, for both simple and complex lexicons, for both written and spoken lexical representations. The descriptions range from morphology, syntax, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GermaNet

GermaNet is a semantic network for the German language. It relates nouns, verbs, and adjectives semantically by grouping lexical units that express the same concept into ''synsets'' and by defining semantic relations between these synsets. GermaNet is free for academic use, after signing a license. GermaNet has much in common with the English WordNet and can be viewed as an on-line thesaurus or a light-weight ontology. GermaNet has been developed and maintained at the University of Tübingen since 1997 within the research group for General and Computational Linguistics. It has been integrated into the EuroWordNet, a multilingual lexical-semantic database. Database Contents GermaNet partitions the lexical space into a set of concepts that are interlinked by semantic relations. A semantic concept is modeled by a ''synset''. A synset is a set of words (called lexical units) where all the words are taken to have the same or almost the same meaning. Thus, a synset is a set of synonyms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WordNet

WordNet is a lexical database of semantic relations between words in more than 200 languages. WordNet links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into '' synsets'' with short definitions and usage examples. WordNet can thus be seen as a combination and extension of a dictionary and thesaurus. While it is accessible to human users via a web browser, its primary use is in automatic text analysis and artificial intelligence applications. WordNet was first created in the English language and the English WordNet database and software tools have been released under a BSD style license and are freely available for download from that WordNet website. History and team members WordNet was first created in English only in the Cognitive Science Laboratory of Princeton University under the direction of psychology professor George Armitage Miller starting in 1985 and was later directed by Christiane Fellbaum. The project was ini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FrameNet

FrameNet is a research and resource development project based at the International Computer Science Institute (ICSI) in Berkeley, California, which has produced an electronic resource based on a theory of meaning called frame semantics. The data that FrameNet has analyzed show that the sentence "John sold a car to Mary" essentially describes the same basic situation (semantic frame) as "Mary bought a car from John", just from a different perspective. A semantic frame is a conceptual structure describing an event, relation, or object along with its participants. The FrameNet lexical database contains over 1,200 semantic ''frames'', 13,000 ''lexical units'' (a pairing of a word with a meaning; polysemous words are represented by several ''lexical units'') and 202,000 example sentences. Charles J. Fillmore, who developed the theory of frame semantics which serves as the theoretical the basis of FrameNet, founded the project in 1997 and continued to lead the effort until he died in 2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VerbNet

The VerbNet project maps PropBank PropBank is a corpus that is annotated with verbal propositions and their arguments—a "proposition bank". Although "PropBank" refers to a specific corpus produced by Martha Palmer ''et al.'', the term ''propbank'' is also coming to be used a ... verb types to their corresponding Levin classes. It is a lexical resource that incorporates both semantic and syntactic information about its contents. VerbNet is part of thSemLinkproject in development at the University of Colorado. Related projects * UBY a database of 10 resources including VerbNet. External linksKarin Kipper's dissertation— VerbNet: a broad-coverage, comprehensive verb lexicon— contains download of the VerbNet XML files and web interface to the databaseUnified Verb Index— unified index to three lexical semantic resources, VerbNet, PropBank, and FrameNet Lexical databases Corpora {{corpora-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BabelNet

BabelNet is a multilingual lexicalized semantic network and ontology developed at the NLP group of the Sapienza University of Rome.R. Navigli and S. P Ponzetto. 2012BabelNet: The Automatic Construction, Evaluation and Application of a Wide-Coverage Multilingual Semantic Network Artificial Intelligence, 193, Elsevier, pp. 217-250. BabelNet was automatically created by linking Wikipedia to the most popular computational lexicon of the English language, WordNet. The integration is done using an automatic mapping and by filling in lexical gaps in resource-poor languages by using statistical machine translation. The result is an encyclopedic dictionary that provides concepts and named entities lexicalized in many languages and connected with large amounts of semantic relations. Additional lexicalizations and definitions are added by linking to free-license wordnets, OmegaWiki, the English Wiktionary, Wikidata, FrameNet, VerbNet and others. Similarly to WordNet, BabelNet groups words i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EuroWordNet

EuroWordNet is a system of semantic networks for European languages, based on WordNet. Each language develops its own wordnet but they are interconnected with ''interlingual links'' stored in the ''Interlingual Index'' (ILI). Unlike the original Princeton WordNet, most of the other wordnets are not freely available. Languages The original EuroWordNet project dealt with Dutch, Italian, Spanish, German, French, Czech, and Estonian. These wordnets are now frozen, but wordnets for other languages have been developed to varying degrees. License Some examples of EuroWordNet are available for free. Access to the full database, however, is charged. In some cases, OpenThesaurus and BabelNet may serve as a free alternative. See also * vidby * Babbel External links [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation or interactive translation), is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another. On a basic level, MT performs mechanical substitution of words in one language for words in another, but that alone rarely produces a good translation because recognition of whole phrases and their closest counterparts in the target language is needed. Not all words in one language have equivalent words in another language, and many words have more than one meaning. Solving this problem with corpus statistical and neural techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies. Current machine translation software often allows for customizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Classification

Document classification or document categorization is a problem in library science, information science and computer science. The task is to assign a document to one or more classes or categories. This may be done "manually" (or "intellectually") or algorithmically. The intellectual classification of documents has mostly been the province of library science, while the algorithmic classification of documents is mainly in information science and computer science. The problems are overlapping, however, and there is therefore interdisciplinary research on document classification. The documents to be classified may be texts, images, music, etc. Each kind of document possesses its special classification problems. When not otherwise specified, text classification is implied. Documents may be classified according to their subjects or according to other attributes (such as document type, author, printing year etc.). In the rest of this article only subject classification is considered. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Sense Disambiguation

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference. Given that natural language requires reflection of neurological reality, as shaped by the abilities provided by the brain's neural networks, computer science has had a long-term challenge in developing the ability in computers to do natural language processing and machine learning. Many techniques have been researched, including dictionary-based methods that use the knowledge encoded in lexical resources, supervised machine le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |