|

Trimmed Estimator

In statistics, a trimmed estimator is an estimator derived from another estimator by excluding some of the extreme values, a process called truncation. This is generally done to obtain a more robust statistic, and the extreme values are considered outliers. Trimmed estimators also often have higher efficiency for mixture distributions and heavy-tailed distributions than the corresponding untrimmed estimator, at the cost of lower efficiency for other distributions, such as the normal distribution. Given an estimator, the x% trimmed version is obtained by discarding the x% lowest or highest observations or on both end: it is a statistic on the ''middle'' of the data. For instance, the 5% trimmed mean is obtained by taking the mean of the 5% to 95% range. In some cases a trimmed estimator discards a fixed number of points (such as maximum and minimum) instead of a percentage. Examples The median is the most trimmed statistic (nominally 50%), as it discards all but the most central data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interquartile Mean

The interquartile mean (IQM) (or midmean) is a statistical measure of central tendency based on the truncated mean of the interquartile range. The IQM is very similar to the scoring method used in sports that are evaluated by a panel of judges: ''discard the lowest and the highest scores; calculate the mean value of the remaining scores''. Calculation In calculation of the IQM, only the data between the first and third quartiles is used, and the lowest 25% and the highest 25% of the data are discarded. : x_\mathrm = \sum_^ assuming the values have been ordered. Examples Dataset size divisible by four The method is best explained with an example. Consider the following dataset: :5, 8, 4, 38, 8, 6, 9, 7, 7, 3, 1, 6 First sort the list from lowest-to-highest: :1, 3, 4, 5, 6, 6, 7, 7, 8, 8, 9, 38 There are 12 observations (datapoints) in the dataset, thus we have 4 quartiles of 3 numbers. Discard the lowest and the highest 3 values: :1, 3, 4, 5, 6, 6, 7, 7, 8, 8, 9, 38 We now ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson's Skewness Coefficients

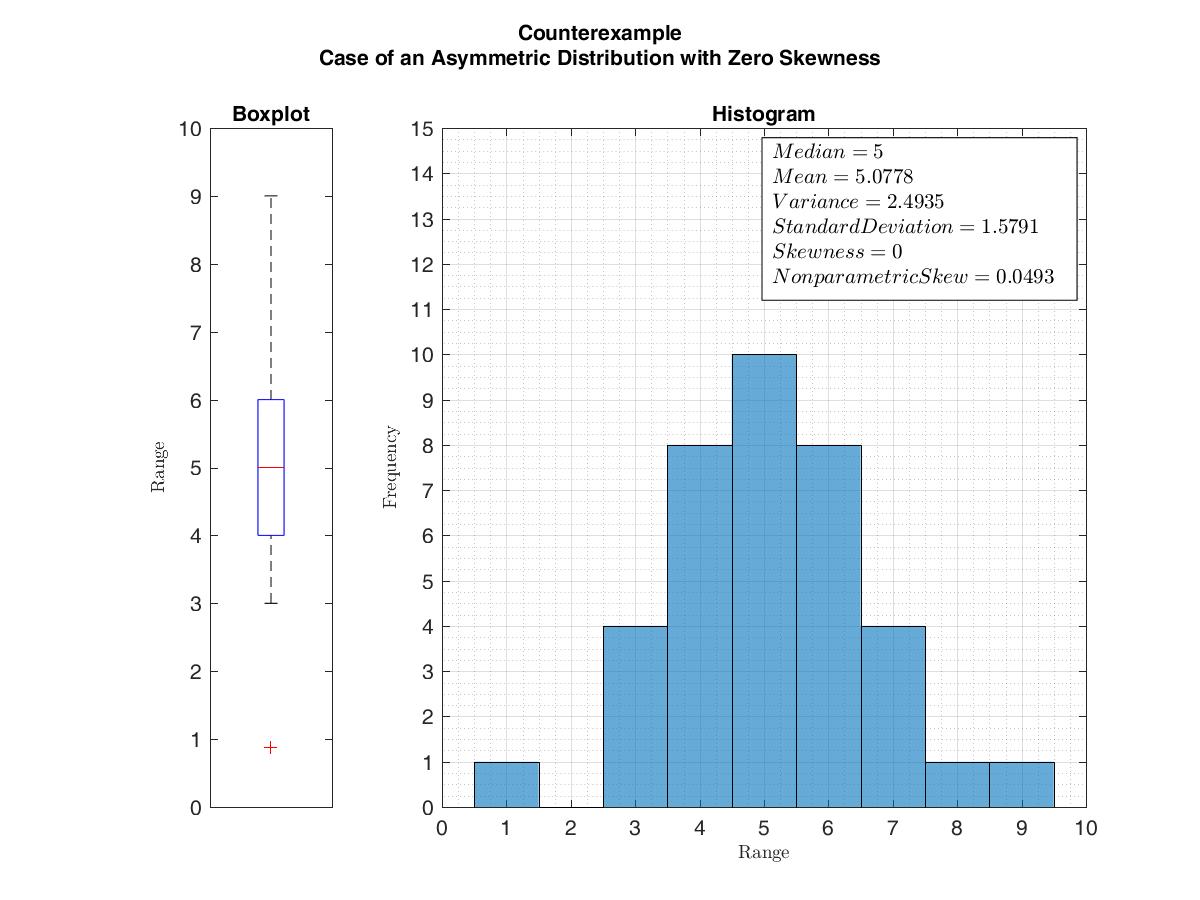

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real number, real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined. For a unimodal distribution, negative skew commonly indicates that the ''tail'' is on the left side of the distribution, and positive skew indicates that the tail is on the right. In cases where one tail is long but the other tail is fat, skewness does not obey a simple rule. For example, a zero value means that the tails on both sides of the mean balance out overall; this is the case for a symmetric distribution, but can also be true for an asymmetric distribution where one tail is long and thin, and the other is short but fat. Introduction Consider the two distributions in the figure just below. Within each graph, the values on the right side of the distribution taper differently from the values on the left side. These tapering sides are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonparametric Skew

In statistics and probability theory, the nonparametric skew is a statistic occasionally used with random variables that take real values.Arnold BC, Groeneveld RA (1995) Measuring skewness with respect to the mode. The American Statistician 49 (1) 34–38 DOI:10.1080/00031305.1995.10476109Rubio F.J.; Steel M.F.J. (2012) "On the Marshall–Olkin transformation as a skewing mechanism". ''Computational Statistics & Data Analysis''Preprint/ref> It is a measure of the skewness of a random variable's distribution—that is, the distribution's tendency to "lean" to one side or the other of the mean. Its calculation does not require any knowledge of the form of the underlying distribution—hence the name nonparametric. It has some desirable properties: it is zero for any symmetric distribution; it is unaffected by a scale shift; and it reveals either left- or right-skewness equally well. In some statistical samples it has been shown to be less powerfulTabor J (2010) Investiga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined. For a unimodal distribution, negative skew commonly indicates that the ''tail'' is on the left side of the distribution, and positive skew indicates that the tail is on the right. In cases where one tail is long but the other tail is fat, skewness does not obey a simple rule. For example, a zero value means that the tails on both sides of the mean balance out overall; this is the case for a symmetric distribution, but can also be true for an asymmetric distribution where one tail is long and thin, and the other is short but fat. Introduction Consider the two distributions in the figure just below. Within each graph, the values on the right side of the distribution taper differently from the values on the left side. These tapering sides are called ''tail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value ''� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unbiased Estimator

In statistics, the bias of an estimator (or bias function) is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called ''unbiased''. In statistics, "bias" is an property of an estimator. Bias is a distinct concept from consistency: consistent estimators converge in probability to the true value of the parameter, but may be biased or unbiased; see bias versus consistency for more. All else being equal, an unbiased estimator is preferable to a biased estimator, although in practice, biased estimators (with generally small bias) are frequently used. When a biased estimator is used, bounds of the bias are calculated. A biased estimator may be used for various reasons: because an unbiased estimator does not exist without further assumptions about a population; because an estimator is difficult to compute (as in unbiased estimation of standard deviation); because a biased estimato ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parameter Estimation

Estimation theory is a branch of statistics that deals with estimating the values of parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sample of voters. Alternatively, it is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L-estimator

In statistics, an L-estimator is an estimator which is a linear combination of order statistics of the measurements (which is also called an L-statistic). This can be as little as a single point, as in the median (of an odd number of values), or as many as all points, as in the mean. The main benefits of L-estimators are that they are often extremely simple, and often robust statistics: assuming sorted data, they are very easy to calculate and interpret, and are often resistant to outliers. They thus are useful in robust statistics, as descriptive statistics, in statistics education, and when computation is difficult. However, they are inefficient, and in modern times robust statistics M-estimators are preferred, though these are much more difficult computationally. In many circumstances L-estimators are reasonably efficient, and thus adequate for initial estimation. Examples A basic example is the median. Given ''n'' values x_1, \ldots, x_n, if n=2k+1 is odd, the median equals x ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interdecile Range

In statistics, the interdecile range is the difference between the first and the ninth deciles (10% and 90%). The interdecile range is a measure of statistical dispersion of the values in a set of data, similar to the range (statistics), range and the interquartile range, and can be computed from the (non-parametric) seven-number summary. Despite its simplicity, the interdecile range of a sample drawn from a normal distribution can be divided by 2.56 to give a reasonably Efficiency (statistics), efficient estimator of the standard deviation of a normal distribution. This is derived from the fact that the lower (respectively upper) decile of a normal distribution with arbitrary variance is equal to the mean minus (respectively, plus) 1.28 times the standard deviation. A more efficient estimator is given by instead taking the 7% trimmed range (the difference between the 7th and 93rd percentiles) and dividing by 3 (corresponding to 86% of the data falling within ±1.5 standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Range (statistics)

In statistics, the range of a set of data is the difference between the largest and smallest values, the result of subtracting the sample maximum and minimum. It is expressed in the same units as the data. In descriptive statistics, range is the size of the smallest interval which contains all the data and provides an indication of statistical dispersion. Since it only depends on two of the observations, it is most useful in representing the dispersion of small data sets. For continuous IID random variables For ''n'' independent and identically distributed continuous random variables ''X''1, ''X''2, ..., ''X''''n'' with the cumulative distribution function G(''x'') and a probability density function g(''x''), let T denote the range of them, that is, T= max(''X''1, ''X''2, ..., ''X''''n'')- min(''X''1, ''X''2, ..., ''X''''n''). Distribution The range, T, has the cumulative distribution function ::F(t)= n \int_^\infty g(x)(x+t)-G(x) \, \textx. Gumbel notes that the "beauty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interquartile Range

In descriptive statistics, the interquartile range (IQR) is a measure of statistical dispersion, which is the spread of the data. The IQR may also be called the midspread, middle 50%, fourth spread, or H‑spread. It is defined as the difference between the 75th and 25th percentiles of the data. To calculate the IQR, the data set is divided into quartiles, or four rank-ordered even parts via linear interpolation. These quartiles are denoted by Q1 (also called the lower quartile), ''Q''2 (the median), and ''Q''3 (also called the upper quartile). The lower quartile corresponds with the 25th percentile and the upper quartile corresponds with the 75th percentile, so IQR = ''Q''3 − ''Q''1. The IQR is an example of a trimmed estimator, defined as the 25% trimmed range, which enhances the accuracy of dataset statistics by dropping lower contribution, outlying points. It is also used as a robust measure of scale It can be clearly visualized by the box on a Box plot. Use Unlike tota ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |