|

TurboVNC

VirtualGL is an open-source software package that redirects the 3D rendering commands from Unix and Linux OpenGL applications to 3D accelerator hardware in a dedicated server and sends the rendered output to a ( thin) client located elsewhere on the network. On the server side, VirtualGL consists of a library that handles the redirection and a wrapper program that instructs applications to use this library. Clients can connect to the server either using a remote X11 connection or using an X11 proxy such as a VNC server. In case of an X11 connection some client-side VirtualGL software is also needed to receive the rendered graphics output separately from the X11 stream. In case of a VNC connection no specific client-side software is needed other than the VNC client itself. Problem The performance of OpenGL applications can be greatly improved by rendering the graphics on dedicated hardware accelerators that are typically present in a GPU. GPUs have become so commonplace that ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TightVNC

TightVNC is a free and open-source remote desktop software server and client application for Linux and Windows. A server for macOS is available under a commercial source code license only, without SDK or binary version provided. Constantin Kaplinsky developed TightVNC, using and extending the RFB protocol of Virtual Network Computing (VNC) to allow end-users to control another computer's screen remotely. Encodings TightVNC uses so-called "tight encoding" of areas, which improves performance over low bandwidth connection. It is effectively a combination of the JPEG and zlib compression mechanisms. It is possible to watch videos and play DirectX games through TightVNC over a broadband connection, albeit at a low frame rate. TightVNC includes many other common features of VNC derivatives, such as file transfer capability. Compatibility TightVNC is cross-compatible with other client and server implementations of VNC; however, tight encoding is not supported by most other implementa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sun Visualization System

Sun Visualization System was a sharable visualization product introduced by Sun Microsystems in January 2007. It used other Sun technologies, including Sun servers, Solaris, Sun Ray Ultra-Thin Clients, and Sun Grid Engine. The Sun Visualization System software was based on several open source technologies: Chromium to perform distributed 3D rendering, VirtualGL to re-route 3D rendering jobs to arbitrary graphics devices, and TurboVNC to deliver the rendered 3D images to a client or clients. Sun sponsored and/or contributed changes back to these projects throughout the life of the Sun Visualization System. In January 2009, The VirtualGL project reported that it was no longer being sponsored by Sun Microsystems, and in April 2009, Sun announced that it was discontinuing the Sun Shared Visualization and Sun Scalable Visualization products. Customers were able to order the products through July 31, 2009, and service and support was provided until October 2014. Main hardware comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtual Network Computing

Virtual Network Computing (VNC) is a graphical desktop-sharing system that uses the Remote Frame Buffer protocol (RFB) to remotely control another computer. It transmits the keyboard and mouse input from one computer to another, relaying the graphical- screen updates, over a network. VNC is platform-independent – there are clients and servers for many GUI-based operating systems and for Java. Multiple clients may connect to a VNC server at the same time. Popular uses for this technology include remote technical support and accessing files on one's work computer from one's home computer, or vice versa. VNC was originally developed at the Olivetti & Oracle Research Lab in Cambridge, United Kingdom. The original VNC source code and many modern derivatives are open source under the GNU General Public License. There are a number of variants of VNC which offer their own particular functionality; e.g., some optimised for Microsoft Windows, or offering file transfer (not par ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TigerVNC

TigerVNC is an open source Virtual Network Computing (VNC) server and client software, started as a fork of TightVNC in 2009. The client supports Windows, Linux and macOS. The server supports Linux. There is no server for macOS and the Windows server as of release 1.11.0 is no longer maintained. Red Hat, Cendio AB, and TurboVNC maintainers started this fork because RealVNC had focused on their enterprise non-open VNC and no TightVNC update had appeared since 2006. The past few years however, Cendio AB who use it for their product ThinLinc is the main contributor to the project. TigerVNC is fully open-source, with development and discussion done via publicly accessible mailing lists and repositories. TigerVNC has a different feature set than TightVNC, despite its origins. For example, TigerVNC adds encryption for all supported operating systems and not just Linux. Conversely, TightVNC has features that TigerVNC doesn't have, such as file transfers. TigerVNC focuses on performan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Library (computing)

In computer science, a library is a collection of non-volatile resources used by computer programs, often for software development. These may include configuration data, documentation, help data, message templates, pre-written code and subroutines, classes, values or type specifications. In IBM's OS/360 and its successors they are referred to as partitioned data sets. A library is also a collection of implementations of behavior, written in terms of a language, that has a well-defined interface by which the behavior is invoked. For instance, people who want to write a higher-level program can use a library to make system calls instead of implementing those system calls over and over again. In addition, the behavior is provided for reuse by multiple independent programs. A program invokes the library-provided behavior via a mechanism of the language. For example, in a simple imperative language such as C, the behavior in a library is invoked by using C's normal func ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quad Buffering

In computer science, multiple buffering is the use of more than one buffer to hold a block of data, so that a "reader" will see a complete (though perhaps old) version of the data, rather than a partially updated version of the data being created by a "writer". It is very commonly used for computer display images. It is also used to avoid the need to use dual-ported RAM (DPRAM) when the readers and writers are different devices. Description An easy way to explain how multiple buffering works is to take a real-world example. It is a nice sunny day and you have decided to get the paddling pool out, only you can not find your garden hose. You'll have to fill the pool with buckets. So you fill one bucket (or buffer) from the tap, turn the tap off, walk over to the pool, pour the water in, walk back to the tap to repeat the exercise. This is analogous to single buffering. The tap has to be turned off while you "process" the bucket of water. Now consider how you would do it if you ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

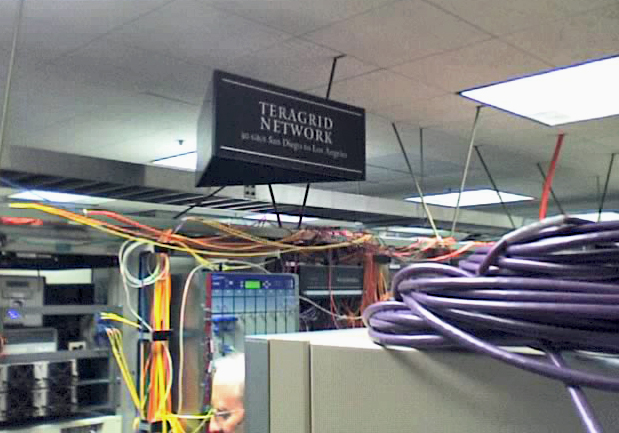

TeraGrid

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Texas At Austin

The University of Texas at Austin (UT Austin, UT, or Texas) is a public research university in Austin, Texas. It was founded in 1883 and is the oldest institution in the University of Texas System. With 40,916 undergraduate students, 11,075 graduate students and 3,133 teaching faculty as of Fall 2021, it is also the largest institution in the system. It is ranked among the top universities in the world by major college and university rankings, and admission to its programs is considered highly selective. UT Austin is considered one of the United States's Public Ivies. The university is a major center for academic research, with research expenditures totaling $679.8 million for fiscal year 2018. It joined the Association of American Universities in 1929. The university houses seven museums and seventeen libraries, including the LBJ Presidential Library and the Blanton Museum of Art, and operates various auxiliary research facilities, such as the J. J. Pickle Researc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Texas Advanced Computing Center

The Texas Advanced Computing Center (TACC) at the University of Texas at Austin, United States, is an advanced computing research center that provides comprehensive advanced computing resources and support services to researchers in Texas and across the USA. The mission of TACC is to enable discoveries that advance science and society through the application of advanced computing technologies. Specializing in high performance computing, scientific visualization, data analysis & storage systems, software, research & development and portal interfaces, TACC deploys and operates advanced computational infrastructure to enable computational research activities of faculty, staff, and students of UT Austin. TACC also provides consulting, technical documentation, and training to support researchers who use these resources. TACC staff members conduct research and development in applications and algorithms, computing systems design/architecture, and programming tools and environments. Foun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double Buffering

In computer science, multiple buffering is the use of more than one buffer to hold a block of data, so that a "reader" will see a complete (though perhaps old) version of the data, rather than a partially updated version of the data being created by a "writer". It is very commonly used for computer display images. It is also used to avoid the need to use dual-ported RAM (DPRAM) when the readers and writers are different devices. Description An easy way to explain how multiple buffering works is to take a real-world example. It is a nice sunny day and you have decided to get the paddling pool out, only you can not find your garden hose. You'll have to fill the pool with buckets. So you fill one bucket (or buffer) from the tap, turn the tap off, walk over to the pool, pour the water in, walk back to the tap to repeat the exercise. This is analogous to single buffering. The tap has to be turned off while you "process" the bucket of water. Now consider how you would do it if yo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

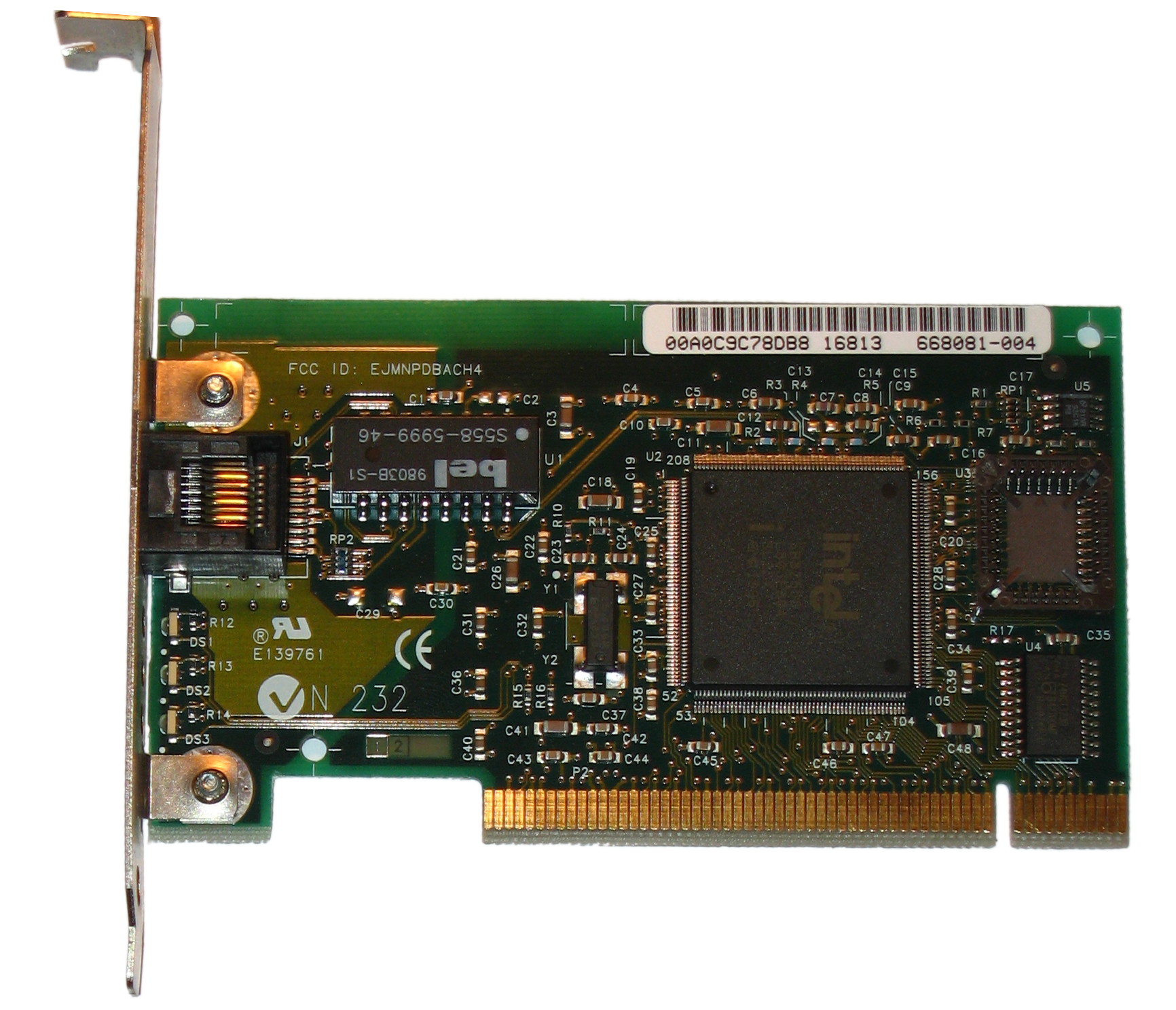

Fast Ethernet

In computer networking, Fast Ethernet physical layers carry traffic at the nominal rate of 100 Mbit/s. The prior Ethernet speed was 10 Mbit/s. Of the Fast Ethernet physical layers, 100BASE-TX is by far the most common. Fast Ethernet was introduced in 1995 as the IEEE 802.3u standard and remained the fastest version of Ethernet for three years before the introduction of Gigabit Ethernet. The acronym ''GE/FE'' is sometimes used for devices supporting both standards. Nomenclature The "100" in the media type designation refers to the transmission speed of 100 Mbit/s, while the "BASE" refers to baseband signaling. The letter following the dash ("T" or "F") refers to the physical medium that carries the signal (twisted pair or fiber, respectively), while the last character ("X", "4", etc.) refers to the line code method used. Fast Ethernet is sometimes referred to as 100BASE-X, where "X" is a placeholder for the FX and TX variants. General design Fast Ethernet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)