|

Titan (supercomputer)

Titan or OLCF-3 was a supercomputer built by Cray at Oak Ridge National Laboratory for use in a variety of science projects. Titan was an upgrade of Jaguar, a previous supercomputer at Oak Ridge, that uses graphics processing units (GPUs) in addition to conventional central processing units (CPUs). Titan was the first such hybrid to perform over 10 petaFLOPS. The upgrade began in October 2011, commenced stability testing in October 2012 and it became available to researchers in early 2013. The initial cost of the upgrade was US$60 million, funded primarily by the United States Department of Energy. Titan was eclipsed at Oak Ridge by Summit in 2019, which was built by IBM and features fewer nodes with much greater GPU capability per node as well as local per-node non-volatile caching of file data from the system's parallel file system. Titan employed AMD Opteron CPUs in conjunction with Nvidia Tesla GPUs to improve energy efficiency while providing an order of magnitu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray Inc

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world. In 1972, the company was founded by computer designer Seymour Cray as Cray Research, Inc., and it continues to manufacture parts in Chippewa Falls, Wisconsin, where Cray was born and raised. After being acquired by Silicon Graphics in 1996, the modern company was formed after being purchased in 2000 by Tera Computer Company, which adopted the name Cray Inc. In 2019, the company was acquired by Hewlett Packard Enterprise for $1.3 billion. History Background: 1950–1972 In 1950, Seymour Cray began working in the computing field when he joined Engineering Research Associates (ERA) in Saint Paul, Minnesota. There, he helped to create the ERA 1103. ERA eventually became ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphics Processing Unit

A graphics processing unit (GPU) is a specialized electronic circuit designed for digital image processing and to accelerate computer graphics, being present either as a discrete video card or embedded on motherboards, mobile phones, personal computers, workstations, and game consoles. GPUs were later found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. The ability of GPUs to rapidly perform vast numbers of calculations has led to their adoption in diverse fields including artificial intelligence (AI) where they excel at handling data-intensive and computationally demanding tasks. Other non-graphical uses include the training of neural networks and cryptocurrency mining. History 1970s Arcade system boards have used specialized graphics circuits since the 1970s. In early video game hardware, RAM for frame buffers was expensive, so video chips composited data together as the display was being scann ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Climate Model

Numerical climate models (or climate system models) are mathematical models that can simulate the interactions of important drivers of climate. These drivers are the atmosphere, oceans, land surface and ice. Scientists use climate models to study the dynamics of the climate system and to make projections of future climate and of climate change. Climate models can also be qualitative (i.e. not numerical) models and contain narratives, largely descriptive, of possible futures. Climate models take account of incoming energy from the Sun as well as outgoing energy from Earth. An imbalance results in a change in temperature. The incoming energy from the Sun is in the form of short wave electromagnetic radiation, chiefly visible and short-wave (near) infrared. The outgoing energy is in the form of long wave (far) infrared electromagnetic energy. These processes are part of the greenhouse effect. Climate models vary in complexity. For example, a simple radiant heat transfer model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

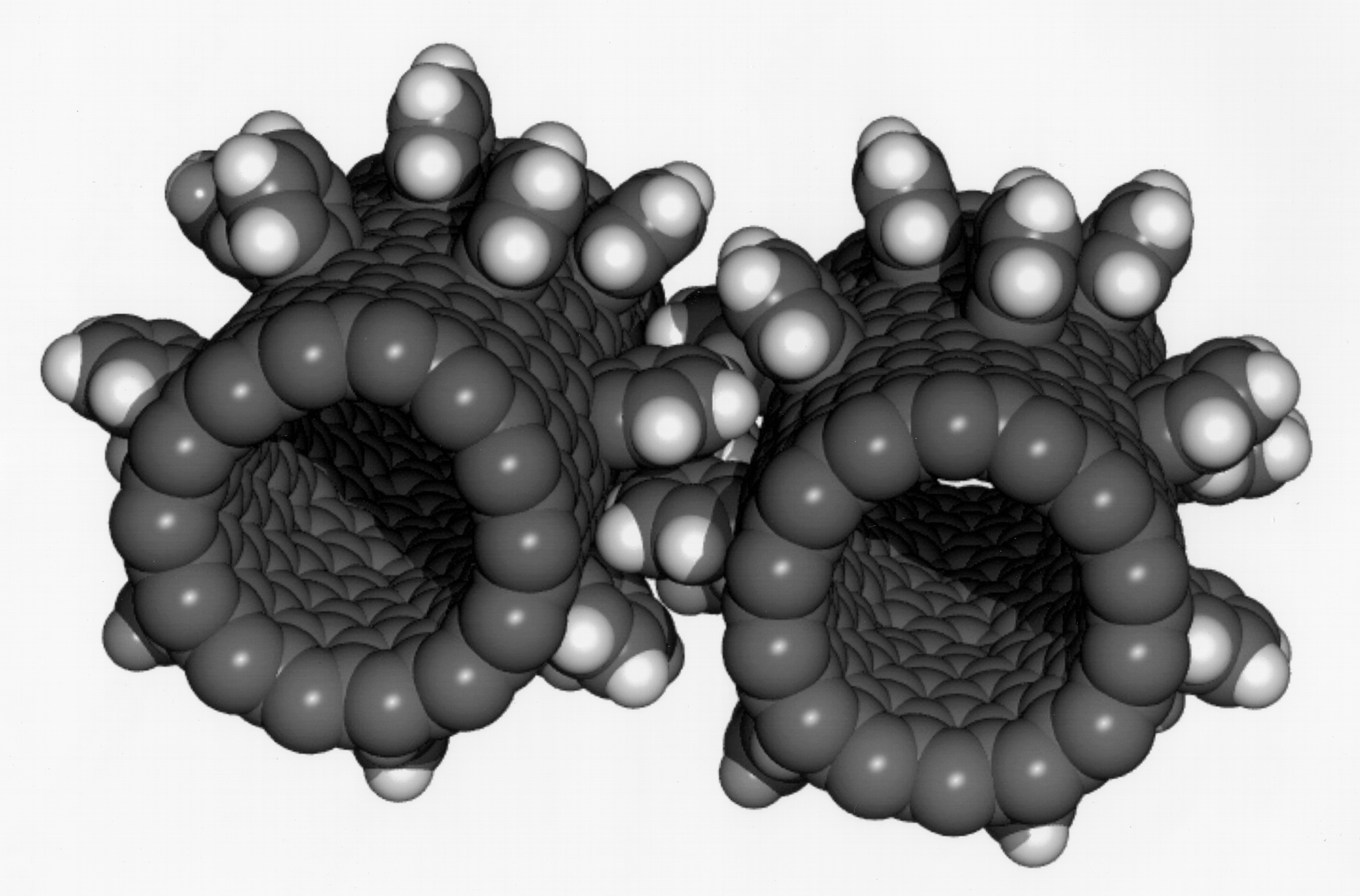

Nanoscopic Scale

Nanotechnology is the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). At this scale, commonly known as the nanoscale, surface area and quantum mechanical effects become important in describing properties of matter. This definition of nanotechnology includes all types of research and technologies that deal with these special properties. It is common to see the plural form "nanotechnologies" as well as "nanoscale technologies" to refer to research and applications whose common trait is scale. An earlier understanding of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabricating macroscale products, now referred to as molecular nanotechnology. Nanotechnology defined by scale includes fields of science such as surface science, organic chemistry, molecular biology, semiconductor physics, energy storage, engineering, microfabrication, and molecular engineering. The associated rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Program

A computer program is a sequence or set of instructions in a programming language for a computer to Execution (computing), execute. It is one component of software, which also includes software documentation, documentation and other intangible components. A ''computer program'' in its human-readable form is called source code. Source code needs another computer program to Execution (computing), execute because computers can only execute their native machine instructions. Therefore, source code may be Translator (computing), translated to machine instructions using a compiler written for the language. (Assembly language programs are translated using an Assembler (computing), assembler.) The resulting file is called an executable. Alternatively, source code may execute within an interpreter (computing), interpreter written for the language. If the executable is requested for execution, then the operating system Loader (computing), loads it into Random-access memory, memory and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tianhe-2

Tianhe-2 or TH-2 (, i.e. 'Milky Way 2') is a 33.86- petaflop supercomputer located in the National Supercomputer Center in Guangzhou, China. It was developed by a team of 1,300 scientists and engineers. It was the world's fastest supercomputer according to the TOP500 lists for June 2013, November 2013, June 2014, November 2014, June 2015, and November 2015. The record was surpassed in June 2016 by the Sunway TaihuLight. In 2015, plans by Sun Yat-sen University in collaboration with Guangzhou district and city administration to double its computing capacities were stopped by a U.S. government rejection of Intel's application for an export license for the CPUs and coprocessor boards. In response to the U.S. sanctions, China introduced the Sunway TaihuLight supercomputer in 2016, which substantially outperforms the Tianhe-2 (and also affected the update of Tianhe-2 to Tianhe-2A, replacing U.S. tech), and in November 2022 ranks eighth in the TOP500 list while using completely do ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LINPACK Benchmark

The LINPACK benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense ''n'' × ''n'' system of linear equations ''Ax'' = ''b'', which is a common task in engineering. The latest version of these benchmarks is used to build the TOP500 list, ranking the world's most powerful supercomputers. The aim is to approximate how fast a computer will perform when solving real problems. It is a simplification, since no single computational task can reflect the overall performance of a computer system. Nevertheless, the LINPACK benchmark performance can provide a good correction over the peak performance provided by the manufacturer. The peak performance is the maximal theoretical performance a computer can achieve, calculated as the machine's frequency, in cycles per second, times the number of operations per cycle it can perform. The actual performance will always be lower than the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Energy Efficiency (physics)

Energy conversion efficiency (''η'') is the ratio between the useful output of an energy conversion machine and the input, in energy terms. The input, as well as the useful output may be chemical, electric power, mechanical work, light (radiation), or heat. The resulting value, ''η'' (eta), ranges between 0 and 1. Overview Energy conversion efficiency depends on the usefulness of the output. All or part of the heat produced from burning a fuel may become rejected waste heat if, for example, work is the desired output from a thermodynamic cycle. Energy converter is an example of an energy transformation. For example, a light bulb falls into the categories energy converter. \eta = \frac Even though the definition includes the notion of usefulness, efficiency is considered a technical or physical term. Goal or mission oriented terms include effectiveness and efficacy. Generally, energy conversion efficiency is a dimensionless number between 0 and 1.0, or 0% to 100%. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel File System

A clustered file system (CFS) is a file system which is shared by being simultaneously mounted on multiple servers. There are several approaches to clustering, most of which do not employ a clustered file system (only direct attached storage for each node). Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance. Shared-disk file system A shared-disk file system uses a storage area network A storage area network (SAN) or storage network is a computer network which provides access to consolidated, block device, block-level data storage. SANs are primarily used to access Computer data storage, data storage devices, such as disk ... (SAN) to allow multiple computers to gain direct disk access at the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical Application software, computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-volatile Memory

Non-volatile memory (NVM) or non-volatile storage is a type of computer memory that can retain stored information even after power is removed. In contrast, volatile memory needs constant power in order to retain data. Non-volatile memory typically refers to storage in memory chips, which store data in floating-gate memory cells consisting of floating-gate MOSFETs ( metal–oxide–semiconductor field-effect transistors), including flash memory storage such as NAND flash and solid-state drives (SSD). Other examples of non-volatile memory include read-only memory (ROM), EPROM (erasable programmable ROM) and EEPROM (electrically erasable programmable ROM), ferroelectric RAM, most types of computer data storage devices (e.g. disk storage, hard disk drives, optical discs, floppy disks, and magnetic tape), and early computer storage methods such as punched tape and cards. Overview Non-volatile memory is typically used for the task of secondary storage or long-term per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Summit (supercomputer)

Summit or OLCF-4 was a supercomputer developed by IBM for use at Oak Ridge Leadership Computing Facility (OLCF), a facility at the Oak Ridge National Laboratory, United States of America. It held the number 1 position on the TOP500 list from June 2018 to June 2020. As of June 2024, its LINPACK benchmark was clocked at 148.6 petaFLOPS. Summit was decommissioned on November 15, 2024. As of November 2019, the supercomputer had ranked as the 5th most energy efficient in the world with a measured power efficiency of 14.668 gigaFLOPS/watt. Summit was the first supercomputer to reach exaflop (a quintillion operations per second) speed, on a non-standard metric, achieving 1.88 exaflops during a genomic analysis and is expected to reach 3.3 exaflops using mixed-precision calculations. History The United States Department of Energy awarded a $325 million contract in November 2014 to IBM, Nvidia and Mellanox. The effort resulted in construction of Summit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |