|

Strong Duality

Strong duality is a condition in mathematical optimization in which the primal optimal objective and the dual optimal objective are equal. This is as opposed to weak duality (the primal problem has optimal value smaller than or equal to the dual problem, in other words the duality gap is greater than or equal to zero). Characterizations Strong duality holds if and only if the duality gap is equal to 0. Sufficient conditions Sufficient conditions comprise: * F = F^ where F is the perturbation function relating the primal and dual problems and F^ is the biconjugate of F (follows by construction of the duality gap) * F is convex and lower semi-continuous (equivalent to the first point by the Fenchel–Moreau theorem) * the primal problem is a linear optimization problem * Slater's condition for a convex optimization problem See also *Convex optimization Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex fu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maximizing or minimizing a real function by systematically choosing input values from within an allowed set and computing the value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, optimization includes finding "best available" values of some objective function given a defin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Duality (optimization)

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weak Duality

In applied mathematics, weak duality is a concept in optimization which states that the duality gap is always greater than or equal to 0. That means the solution to the dual (minimization) problem is ''always'' greater than or equal to the solution to an associated primal problem. This is opposed to strong duality which only holds in certain cases. Uses Many primal-dual approximation algorithms are based on the principle of weak duality.. Weak duality theorem The ''primal'' problem: : Maximize subject to ; The ''dual'' problem, : Minimize subject to . The weak duality theorem states . Namely, if (x_1,x_2,....,x_n) is a feasible solution for the primal maximization linear program and (y_1,y_2,....,y_m) is a feasible solution for the dual minimization linear program, then the weak duality theorem can be stated as \sum_^n c_j x_j \leq \sum_^m b_i y_i , where c_j and b_i are the coefficients of the respective objective functions. Proof: Generalizations More generally, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Duality Gap

In optimization problems in applied mathematics, the duality gap is the difference between the primal and dual solutions. If d^* is the optimal dual value and p^* is the optimal primal value then the duality gap is equal to p^* - d^*. This value is always greater than or equal to 0 (for minimization problems). The duality gap is zero if and only if strong duality holds. Otherwise the gap is strictly positive and weak duality holds. In general given two dual pairs separated locally convex spaces \left(X,X^*\right) and \left(Y,Y^*\right). Then given the function f: X \to \mathbb \cup \, we can define the primal problem by :\inf_ f(x). \, If there are constraint conditions, these can be built into the function f by letting f = f + I_\text where I is the indicator function. Then let F: X \times Y \to \mathbb \cup \ be a perturbation function such that F(x,0) = f(x). The ''duality gap'' is the difference given by :\inf_ (x,0)- \sup_ F^*(0,y^*)/math> where F^* is the convex c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perturbation Function

In mathematical optimization, the perturbation function is any function which relates to primal and dual problems. The name comes from the fact that any such function defines a perturbation of the initial problem. In many cases this takes the form of shifting the constraints. In some texts the value function is called the perturbation function, and the perturbation function is called the bifunction. Definition Given two dual pairs of separated locally convex spaces \left(X,X^*\right) and \left(Y,Y^*\right). Then given the function f: X \to \mathbb \cup \, we can define the primal problem by :\inf_ f(x). \, If there are constraint conditions, these can be built into the function f by letting f \leftarrow f + I_\mathrm where I is the characteristic function. Then F: X \times Y \to \mathbb \cup \ is a ''perturbation function'' if and only if F(x,0) = f(x). Use in duality The duality gap is the difference of the right and left hand side of the inequality :\sup_ -F^*(0,y^*) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Conjugate

In mathematics and mathematical optimization, the convex conjugate of a function is a generalization of the Legendre transformation which applies to non-convex functions. It is also known as Legendre–Fenchel transformation, Fenchel transformation, or Fenchel conjugate (after Adrien-Marie Legendre and Werner Fenchel). It allows in particular for a far reaching generalization of Lagrangian duality. Definition Let X be a real topological vector space and let X^ be the dual space to X. Denote by :\langle \cdot , \cdot \rangle : X^ \times X \to \mathbb the canonical dual pairing, which is defined by \left( x^*, x \right) \mapsto x^* (x). For a function f : X \to \mathbb \cup \ taking values on the extended real number line, its is the function :f^ : X^ \to \mathbb \cup \ whose value at x^* \in X^ is defined to be the supremum: :f^ \left( x^ \right) := \sup \left\, or, equivalently, in terms of the infimum: :f^ \left( x^ \right) := - \inf \left\. This definition can b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-continuity

In mathematical analysis, semicontinuity (or semi-continuity) is a property of extended real-valued functions that is weaker than continuity. An extended real-valued function f is upper (respectively, lower) semicontinuous at a point x_0 if, roughly speaking, the function values for arguments near x_0 are not much higher (respectively, lower) than f\left(x_0\right). A function is continuous if and only if it is both upper and lower semicontinuous. If we take a continuous function and increase its value at a certain point x_0 to f\left(x_0\right) + c for some c>0, then the result is upper semicontinuous; if we decrease its value to f\left(x_0\right) - c then the result is lower semicontinuous. The notion of upper and lower semicontinuous function was first introduced and studied by René Baire in his thesis in 1899. Definitions Assume throughout that X is a topological space and f:X\to\overline is a function with values in the extended real numbers \overline=\R \cup \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fenchel–Moreau Theorem

In convex analysis, the Fenchel–Moreau theorem (named after Werner Fenchel and Jean Jacques Moreau) or Fenchel biconjugation theorem (or just biconjugation theorem) is a theorem which gives necessary and sufficient conditions for a function to be equal to its biconjugate. This is in contrast to the general property that for any function f^ \leq f. This can be seen as a generalization of the bipolar theorem. It is used in duality theory to prove strong duality (via the perturbation function). Statement Let (X,\tau) be a Hausdorff locally convex space, for any extended real valued function f: X \to \mathbb \cup \ it follows that f = f^ if and only if one of the following is true # f is a proper, lower semi-continuous In mathematical analysis, semicontinuity (or semi-continuity) is a property of extended real-valued functions that is weaker than continuity. An extended real-valued function f is upper (respectively, lower) semicontinuous at a point x_0 if, ro ..., and c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Optimization

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear function#As a polynomial function, linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the mathematical optimization, optimization of a linear objective function, subject to linear equality and linear inequality Constraint (mathematics), constraints. Its feasible region is a convex polytope, which is a set defined as the intersection (mathematics), intersection of finitely many Half-space (geometry), half spaces, each of which is defined by a linear inequality. Its objective function is a real number, real-valued affine function, affine (linear) function defined on this polyhedron. A linear programming algorithm finds a point in the polytope where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slater's Condition

In mathematics, Slater's condition (or Slater condition) is a sufficient condition for strong duality to hold for a convex optimization problem, named after Morton L. Slater. Informally, Slater's condition states that the feasible region must have an interior point (see technical details below). Slater's condition is a specific example of a constraint qualification. In particular, if Slater's condition holds for the primal problem, then the duality gap is 0, and if the dual value is finite then it is attained. Formulation Consider the optimization problem : \text\; f_0(x) : \text\ :: f_i(x) \le 0 , i = 1,\ldots,m :: Ax = b where f_0,\ldots,f_m are convex functions In mathematics, a real-valued function is called convex if the line segment between any two points on the graph of the function lies above the graph between the two points. Equivalently, a function is convex if its epigraph (the set of poin .... This is an instance of convex programming. In words, Sla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Optimization

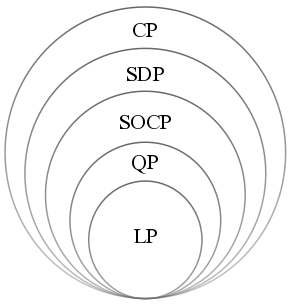

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Convex optimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing, communications and networks, electronic circuit design, data analysis and modeling, finance, statistics ( optimal experimental design), and structural optimization, where the approximation concept has proven to be efficient. With recent advancements in computing and optimization algorithms, convex programming is nearly as straightforward as linear programming. Definition A convex optimization problem is an optimization problem in which the objective function is a convex function and the feasibl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Convex optimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing, communications and networks, electronic circuit design, data analysis and modeling, finance, statistics ( optimal experimental design), and structural optimization, where the approximation concept has proven to be efficient. With recent advancements in computing and optimization algorithms, convex programming is nearly as straightforward as linear programming. Definition A convex optimization problem is an optimization problem in which the objective function is a convex function and the feasibl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |