|

Solomonoff's Theory Of Inductive Inference

Solomonoff's theory of inductive inference is a mathematical theory of Inductive reasoning, induction introduced by Ray Solomonoff, based on probability theory and Computer science, theoretical computer science. In essence, Solomonoff's induction derives the posterior probability of any Computability, computable theory, given a sequence of observed data. This posterior probability is derived from Bayes' theorem, Bayes' rule and some ''universal'' prior, that is, a prior that assigns a positive probability to any computable theory. Solomonoff's induction naturally formalizes Occam's razorJJ McCall. Induction: From Kolmogorov and Solomonoff to De Finetti and Back to Kolmogorov – Metroeconomica, 2004 – Wiley Online Library.D Stork. Foundations of Occam's razor and parsimony in learning from ricoh.com – NIPS 2001 Workshop, 2001A.N. Soklakov. Occam's razor as a formal basis for a physical theorfrom arxiv.org– Foundations of Physics Letters, 2002 – SpringerM Hutter. On the exis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JARGON

Jargon is the specialized terminology associated with a particular field or area of activity. Jargon is normally employed in a particular Context (language use), communicative context and may not be well understood outside that context. The context is usually a particular occupation (that is, a certain trade, profession, vernacular or academic field), but any ingroups and outgroups, ingroup can have jargon. The main trait that distinguishes jargon from the rest of a language is special vocabulary—including some words specific to it and often different word sense, senses or meanings of words, that outgroups would tend to take in another sense—therefore misunderstanding that communication attempt. Jargon is sometimes understood as a form of technical slang and then distinguished from the official terminology used in a particular field of activity. The terms ''jargon'', ''slang,'' and ''argot'' are not consistently differentiated in the literature; different authors interpret the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Countable Set

In mathematics, a set is countable if either it is finite or it can be made in one to one correspondence with the set of natural numbers. Equivalently, a set is ''countable'' if there exists an injective function from it into the natural numbers; this means that each element in the set may be associated to a unique natural number, or that the elements of the set can be counted one at a time, although the counting may never finish due to an infinite number of elements. In more technical terms, assuming the axiom of countable choice, a set is ''countable'' if its cardinality (its number of elements) is not greater than that of the natural numbers. A countable set that is not finite is said countably infinite. The concept is attributed to Georg Cantor, who proved the existence of uncountable sets, that is, sets that are not countable; for example the set of the real numbers. A note on terminology Although the terms "countable" and "countably infinite" as defined here are quite co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turing Machine

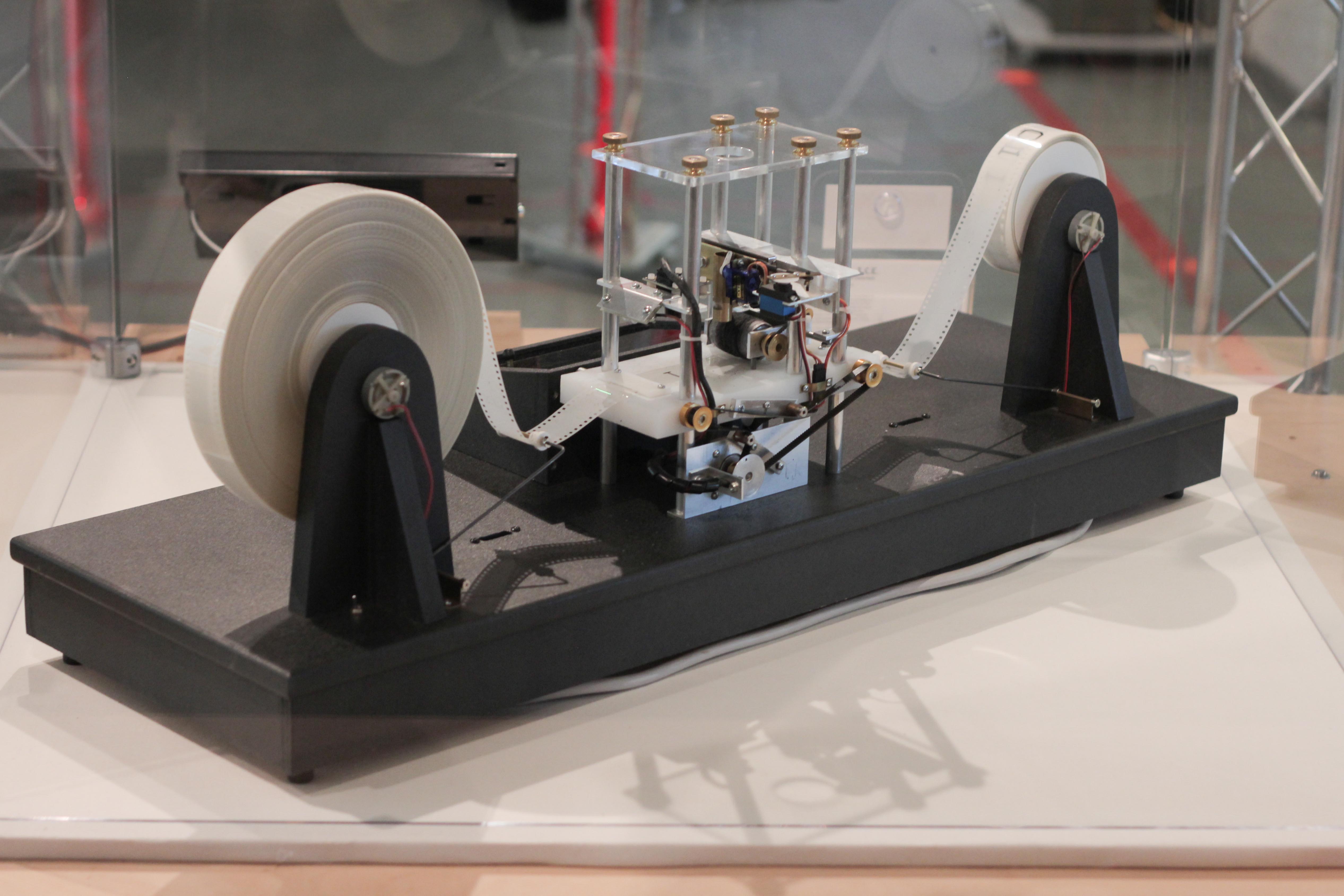

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each comb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Super-recursive Algorithm

In computability theory, super-recursive algorithms are a generalization of ordinary algorithms that are more powerful, that is, compute more than Turing machines. The term was introduced by Mark Burgin, whose book "Super-recursive algorithms" develops their theory and presents several mathematical models. Turing machines and other mathematical models of conventional algorithms allow researchers to find properties of recursive algorithms and their computations. In a similar way, mathematical models of super-recursive algorithms, such as inductive Turing machines, allow researchers to find properties of super-recursive algorithms and their computations. Burgin, as well as other researchers (including Selim Akl, Eugene Eberbach, Peter Kugel, Jan van Leeuwen, Hava Siegelmann, Peter Wegner, and Jiří Wiedermann) who studied different kinds of super-recursive algorithms and contributed to the theory of super-recursive algorithms, have argued that super-recursive algorithms can be used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Identification In The Limit

Language identification in the limit is a formal model for inductive inference of formal languages, mainly by computers (see machine learning and induction of regular languages). It was introduced by E. Mark Gold in a technical report and a journal article with the same title. In this model, a ''teacher'' provides to a ''learner'' some ''presentation'' (i.e. a sequence of strings) of some formal language. The learning is seen as an infinite process. Each time the learner reads an element of the presentation, it should provide a ''representation'' (e.g. a formal grammar) for the language. Gold defines that a learner can ''identify in the limit'' a class of languages if, given any presentation of any language in the class, the learner will produce only a finite number of wrong representations, and then stick with the correct representation. However, the learner need not be able to announce its correctness; and the teacher might present a counterexample to any representation arbitrari ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Limit (math)

In mathematics, a limit is the value that a function (or sequence) approaches as the input (or index) approaches some value. Limits are essential to calculus and mathematical analysis, and are used to define continuity, derivatives, and integrals. The concept of a limit of a sequence is further generalized to the concept of a limit of a topological net, and is closely related to limit and direct limit in category theory. In formulas, a limit of a function is usually written as : \lim_ f(x) = L, (although a few authors may use "Lt" instead of "lim") and is read as "the limit of of as approaches equals ". The fact that a function approaches the limit as approaches is sometimes denoted by a right arrow (→ or \rightarrow), as in :f(x) \to L \text x \to c, which reads "f of x tends to L as x tends to c". History Grégoire de Saint-Vincent gave the first definition of limit (terminus) of a geometric series in his work ''Opus Geometricum'' (1647): "The ''terminus'' of a pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AIXI

AIXI is a theoretical mathematical formalism for artificial general intelligence. It combines Solomonoff induction with sequential decision theory. AIXI was first proposed by Marcus Hutter in 2000 and several results regarding AIXI are proved in Hutter's 2005 book ''Universal Artificial Intelligence''. AIXI is a reinforcement learning (RL) agent. It maximizes the expected total rewards received from the environment. Intuitively, it simultaneously considers every computable hypothesis (or environment). In each time step, it looks at every possible program and evaluates how many rewards that program generates depending on the next action taken. The promised rewards are then weighted by the subjective belief that this program constitutes the true environment. This belief is computed from the length of the program: longer programs are considered less likely, in line with Occam's razor. AIXI then selects the action that has the highest expected total reward in the weighted sum of al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No Free Lunch Theorem

In mathematical folklore, the "no free lunch" (NFL) theorem (sometimes pluralized) of David Wolpert and William Macready appears in the 1997 "No Free Lunch Theorems for Optimization".Wolpert, D.H., Macready, W.G. (1997),No Free Lunch Theorems for Optimization, ''IEEE Transactions on Evolutionary Computation'' 1, 67. Wolpert had previously derived no free lunch theorems for machine learning (statistical inference).Wolpert, David (1996),The Lack of ''A Priori'' Distinctions between Learning Algorithms, ''Neural Computation'', pp. 1341–1390. The name alludes to the saying "there ain't no such thing as a free lunch", that is, there are no easy shortcuts to success. In 2005, Wolpert and Macready themselves indicated that the first theorem in their paper "state that any two optimization algorithms are equivalent when their performance is averaged across all possible problems".Wolpert, D.H., and Macready, W.G. (2005) "Coevolutionary free lunches", ''IEEE Transactions on Evolutionary C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computability

Computability is the ability to solve a problem in an effective manner. It is a key topic of the field of computability theory within mathematical logic and the theory of computation within computer science. The computability of a problem is closely linked to the existence of an algorithm to solve the problem. The most widely studied models of computability are the Turing-computable and μ-recursive functions, and the lambda calculus, all of which have computationally equivalent power. Other forms of computability are studied as well: computability notions weaker than Turing machines are studied in automata theory, while computability notions stronger than Turing machines are studied in the field of hypercomputation. Problems A central idea in computability is that of a (computational) problem, which is a task whose computability can be explored. There are two key types of problems: * A decision problem fixes a set ''S'', which may be a set of strings, natural numbers, or oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how one probability distribution ''P'' is different from a second, reference probability distribution ''Q''. A simple interpretation of the KL divergence of ''P'' from ''Q'' is the expected excess surprise from using ''Q'' as a model when the actual distribution is ''P''. While it is a distance, it is not a metric, the most familiar type of distance: it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for certain classes of distributions (notably an exponential family), it satisfies a generalized Pythagorean theorem (which applies to squared distances). In the simple case, a relative entropy of 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Computer

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each comb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prior Probability

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one's beliefs about this quantity before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable. Bayes' theorem calculates the renormalized pointwise product of the prior and the likelihood function, to produce the ''posterior probability distribution'', which is the conditional distribution of the uncertain quantity given the data. Similarly, the prior probability of a random event or an uncertain proposition is the unconditional probability that is assigned before any relevant evidence is taken into account. Priors can be created using a num ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |