|

Software Pipelining

In computer science, software pipelining is a technique used to optimize loops, in a manner that parallels hardware pipelining. Software pipelining is a type of out-of-order execution, except that the reordering is done by a compiler (or in the case of hand written assembly code, by the programmer) instead of the processor. Some computer architectures have explicit support for software pipelining, notably Intel's IA-64 architecture. It is important to distinguish ''software pipelining'', which is a target code technique for overlapping loop iterations, from ''modulo scheduling'', the currently most effective known compiler technique for generating software pipelined loops. Software pipelining has been known to assembly language programmers of machines with instruction-level parallelism since such architectures existed. Effective compiler generation of such code dates to the invention of modulo scheduling by Rau and Glaeser. Lam showed that special hardware is unnecessary for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Random Access Memory

Random-access memory (RAM; ) is a form of electronic computer memory that can be read and changed in any order, typically used to store working data and machine code. A random-access memory device allows data items to be read or written in almost the same amount of time irrespective of the physical location of data inside the memory, in contrast with other direct-access data storage media (such as hard disks and magnetic tape), where the time required to read and write data items varies significantly depending on their physical locations on the recording medium, due to mechanical limitations such as media rotation speeds and arm movement. In today's technology, random-access memory takes the form of integrated circuit (IC) chips with MOS (metal–oxide–semiconductor) memory cells. RAM is normally associated with volatile types of memory where stored information is lost if power is removed. The two main types of volatile random-access semiconductor memory are static ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Predicate (computer Programming)

In logic, a predicate is a symbol that represents a property or a relation. For instance, in the first-order formula P(a), the symbol P is a predicate that applies to the individual constant a. Similarly, in the formula R(a,b), the symbol R is a predicate that applies to the individual constants a and b. According to Gottlob Frege, the meaning of a predicate is exactly a function from the domain of objects to the truth values "true" and "false". In the semantics of logic, predicates are interpreted as relations. For instance, in a standard semantics for first-order logic, the formula R(a,b) would be true on an interpretation if the entities denoted by a and b stand in the relation denoted by R. Since predicates are non-logical symbols, they can denote different relations depending on the interpretation given to them. While first-order logic only includes predicates that apply to individual objects, other logics may allow predicates that apply to collections of objects defined ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Monica Lam

Monica Sin-Ling Lam is an American computer scientist. She is a professor in the Computer Science Department at Stanford University. Education Monica Lam received a B.Sc. from University of British Columbia in 1980 and a Ph.D. in computer science from Carnegie Mellon University in 1987. Career Lam joined the faculty of Computer Science at Stanford University in 1988. She has contributed to the research of a wide range of computer systems topics including compilers, program analysis, operating systems, security, computer architecture, and high-performance computing. More recently, she is working in natural language processing, and virtual assistants with an emphasis on privacy protection. She is the faculty director of the Open Virtual Assistant Lab, which organized the first workshop for the World Wide Voice Web. The lab developed the open-source Almond voice assistant, which is sponsored by the National Science Foundation. Almond received Popular Science's Best of What's New a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Code Bloat

In computer programming, code bloat is the production of program code (source code or machine code) that is unnecessarily long, slow, or otherwise wasteful of resources. Code bloat can be caused by inadequacies in the programming language in which the code is written, the compiler used to compile it, or the programmer writing it. Thus, while code bloat generally refers to source code size (as produced by the programmer), it can be used to refer instead to the ''generated'' code size or even the binary file size. Examples The following JavaScript algorithm has a large number of redundant variables, unnecessary logic and inefficient string concatenation. // Complex function TK2getImageHTML(size, zoom, sensor, markers) ; The same logic can be stated more efficiently as follows: // Simplified const TK2getImageHTML = (size, zoom, sensor, markers) => ; Code density of different languages The difference in code density between various computer languages is so great that o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical Application software, computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Latency (engineering)

Latency, from a general point of view, is a time delay between the Causality, cause and the effect of some physical change in the system being observed. Lag (video games), Lag, as it is known in Gaming culture, gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games. The original meaning of “latency”, as used widely in psychology, medicine and most other disciplines, derives from “latent”, a word of Latin origin meaning “hidden”. Its different and relatively recent meaning (this topic) of “lateness” or “delay” appears to derive from its superficial similarity to the word “late”, from the old English “laet”. Latency is physically a consequence of the limited velocity at which any Event (relativity), physical interaction can propagate. The magnitude of this velocity is always less than or equal to the speed of light. Therefore, every physical s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Duff's Device

In the C programming language, Duff's device is a way of manually implementing loop unrolling by interleaving two syntactic constructs of C: the - loop and a switch statement. Its discovery is credited to Tom Duff in November 1983, when Duff was working for Lucasfilm and used it to speed up a real-time animation program. Loop unrolling attempts to reduce the overhead of conditional branching needed to check whether a loop is done, by executing a batch of loop bodies per iteration. To handle cases where the number of iterations is not divisible by the unrolled-loop increments, a common technique among assembly language programmers is to jump directly into the middle of the unrolled loop body to handle the remainder. Duff implemented this technique in C by using C's case label fall-through feature to jump into the unrolled body. Original version Duff's problem was to copy 16-bit unsigned integers ("shorts" in most C implementations) from an array into a memory-mapped output regist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

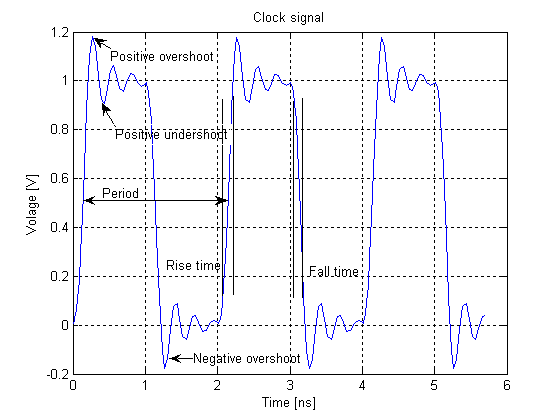

Clock Cycle

In electronics and especially synchronous digital circuits, a clock signal (historically also known as ''logic beat'') is an electronic logic signal (voltage or current) which oscillates between a high and a low state at a constant frequency and is used like a metronome to synchronize actions of digital circuits. In a synchronous logic circuit, the most common type of digital circuit, the clock signal is applied to all storage devices, flip-flops and latches, and causes them all to change state simultaneously, preventing race conditions. A clock signal is produced by an electronic oscillator called a clock generator. The most common clock signal is in the form of a square wave with a 50% duty cycle. Circuits using the clock signal for synchronization may become active at either the rising edge, falling edge, or, in the case of double data rate, both in the rising and in the falling edges of the clock cycle. Digital circuits Most integrated circuits (ICs) of suffi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Processor Register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load items of data from a larger memory into registers where they are used for arithmetic operations, bitwise operations, and other operations, and are manipulated or tested by machine instructions. Manipulated items are then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic random-access memory (RAM) as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Instruction-level Parallelism

Instruction-level parallelism (ILP) is the Parallel computing, parallel or simultaneous execution of a sequence of Instruction set, instructions in a computer program. More specifically, ILP refers to the average number of instructions run per step of this parallel execution. Discussion ILP must not be confused with Concurrency (computer science), concurrency. In ILP, there is a single specific Thread (computing), thread of execution of a Process (computing), process. On the other hand, concurrency involves the assignment of multiple threads to a Central processing unit, CPU's core in a strict alternation, or in true parallelism if there are enough CPU cores, ideally one core for each runnable thread. There are two approaches to instruction-level parallelism: Computer hardware, hardware and software. Hardware-level ILP works upon dynamic parallelism, whereas software-level ILP works on static parallelism. Dynamic parallelism means that the processor decides at run time whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |