|

Single Instruction, Multiple Data

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIMD2

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software thre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

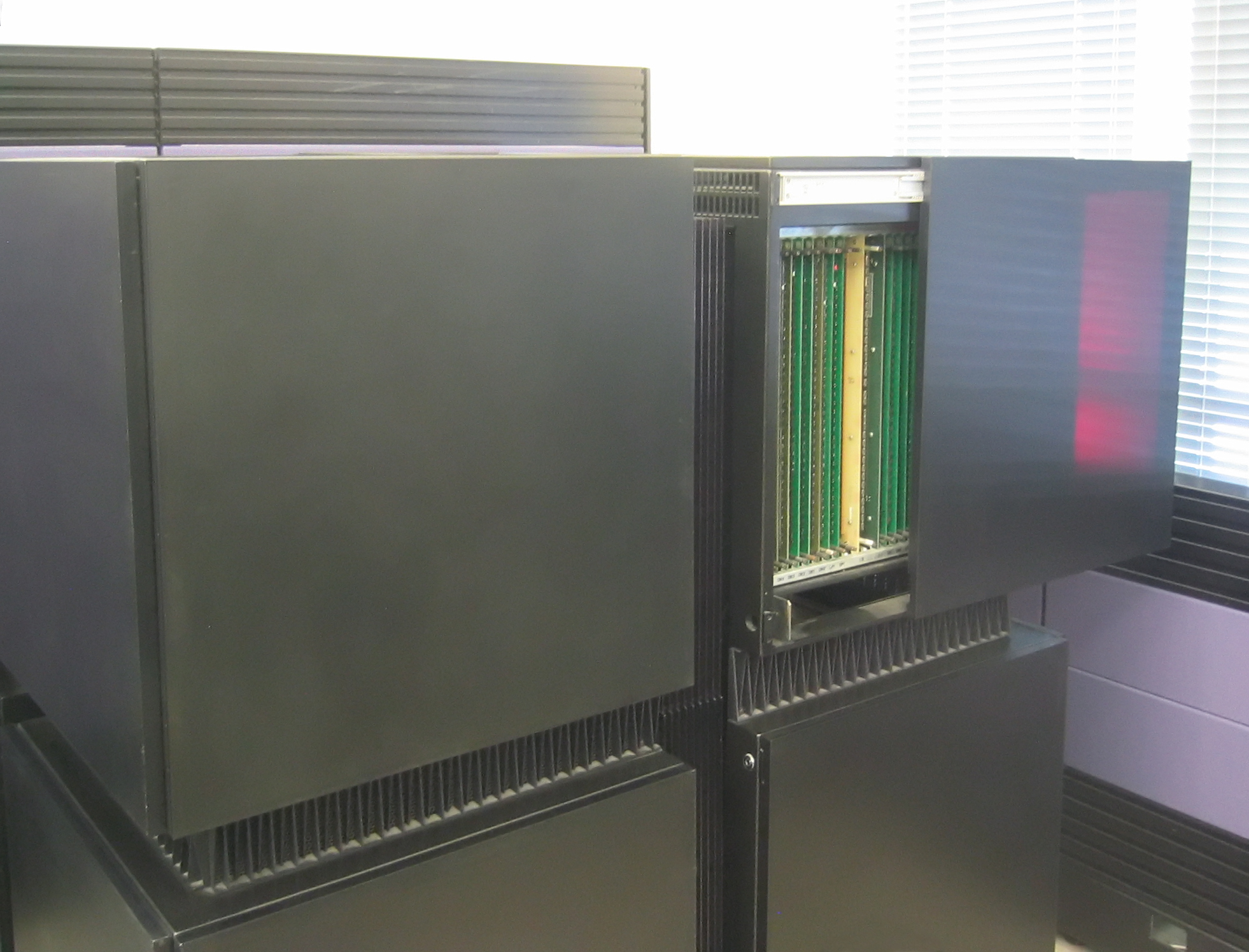

TI Advanced Scientific Computer

The Advanced Scientific Computer (ASC) is a supercomputer designed and manufactured by Texas Instruments (TI) between 1966 and 1973. The ASC's central processing unit (CPU) supported vector processing, a performance-enhancing technique which was key to its high-performance. The ASC, along with the Control Data Corporation STAR-100 supercomputer (which was introduced in the same year), were the first computers to feature vector processing. However, this technique's potential was not fully realized by either the ASC or STAR-100 due to an insufficient understanding of the technique; it was the Cray Research Cray-1 supercomputer, announced in 1975 that would fully realize and popularize vector processing. The more successful implementation of vector processing in the Cray-1 would demarcate the ASC (and STAR-100) as first-generation vector processors, with the Cray-1 belonging in the second. History TI began as a division of Geophysical Service Incorporated (GSI), a company that per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Visual Instruction Set

Visual Instruction Set, or VIS, is a SIMD instruction set extension for SPARC V9 microprocessors developed by Sun Microsystems. There are five versions of VIS: VIS 1, VIS 2, VIS 2+, VIS 3 and VIS 4. History VIS 1 was introduced in 1994 and was first implemented by Sun in their UltraSPARC microprocessor (1995) and by Fujitsu in their SPARC64 GP microprocessors (2000). VIS 2 was first implemented by the UltraSPARC III. All subsequent UltraSPARC and SPARC64 microprocessors implement the instruction set. VIS 3 was first implemented in the SPARC T4 microprocessor. VIS 4 was first implemented in the SPARC M7 microprocessor. Differences vs x86 VIS is not an instruction toolkit like Intel's MMX and SSE. MMX has only 8 registers shared with the FPU stack, while SPARC processors have 32 registers, also aliased to the double-precision (64-bit) floating point registers. As with the SIMD instruction set extensions on other RISC processors, VIS strictly conforms to the main principl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PA-RISC

PA-RISC is an instruction set architecture (ISA) developed by Hewlett-Packard. As the name implies, it is a reduced instruction set computer (RISC) architecture, where the PA stands for Precision Architecture. The design is also referred to as HP/PA for Hewlett Packard Precision Architecture. The architecture was introduced on 26 February 1986, when the HP 3000 Series 930 and HP 9000 Model 840 computers were launched featuring the first implementation, the TS1. PA-RISC has been succeeded by the Itanium (originally IA-64) ISA, jointly developed by HP and Intel. HP stopped selling PA-RISC-based HP 9000 systems at the end of 2008 but supported servers running PA-RISC chips until 2013. History In the late 1980s, HP was building four series of computers, all based on CISC CPUs. One line was the IBM PC compatible Intel i286-based Vectra Series, started in 1986. All others were non-Intel systems. One of them was the HP Series 300 of Motorola 68000-based workstations, another Serie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multimedia Acceleration EXtensions

The Multimedia Acceleration eXtensions or MAX are instruction set extensions to the Hewlett-Packard PA-RISC instruction set architecture (ISA). MAX was developed to improve the performance of multimedia applications that were becoming more prevalent during the 1990s. MAX instructions operate on 32- or 64-bit SIMD data types consisting of multiple 16-bit integers packed in general purpose registers. The available functionality includes additions, subtractions and shifts. The first version, MAX-1, was for the 32-bit PA-RISC 1.1 ISA. The second version, MAX-2, was for the 64-bit PA-RISC 2.0 ISA. Notability The approach is notable because the set of instructions is much smaller than in other multimedia CPUs, and also more general-purpose. The small set and simplicity of the instructions reduce the recurring costs of the electronics, as well as the costs and difficulty of the design. The general-purpose nature of the instructions increases their overall value. These instructions req ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel I860

The Intel i860 (also known as 80860) is a RISC microprocessor design introduced by Intel in 1989. It is one of Intel's first attempts at an entirely new, high-end instruction set architecture since the failed Intel iAPX 432 from the beginning of the 1980s. It was the world's first million-transistor chip. It was released with considerable fanfare, slightly obscuring the earlier Intel i960, which was successful in some niches of embedded systems, and which many considered to be a better design. The i860 never achieved commercial success and the project was terminated in the mid-1990s. Implementations The first implementation of the i860 architecture is the i860 XR microprocessor (code-named N10), which ran at 25, 33, or 40 MHz. The second-generation i860 XP microprocessor (code named N11) added 4 Mbyte pages, larger on-chip caches, second level cache support, faster buses, and hardware support for bus snooping, for cache consistency in multiprocessor systems. A process ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Instruction, Multiple Data

In computing, multiple instruction, multiple data (MIMD) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. These classifications are based on how MIMD processors access memory. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. Examples An example of MIMD system is Intel Xeon Phi, descended from Larrabee microarchitecture. These processors have multiple processing cores (up to 61 as of 2015) that can execute different instr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

A Connection Machine (CM) is a member of a series of massively parallel supercomputers that grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electrical Engineering and Computer Science (1985). The dissertation won the ACM Distinguished Dissertation prize ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thinking Machines Corporation

Thinking Machines Corporation was a supercomputer manufacturer and artificial intelligence (AI) company, founded in Waltham, Massachusetts, in 1983 by Sheryl Handler and Danny Hillis, W. Daniel "Danny" Hillis to turn Hillis's doctoral work at the Massachusetts Institute of Technology (MIT) on massively parallel computing architectures into a commercial product named the Connection Machine. The company moved in 1984 from Waltham to Kendall Square in Cambridge, Massachusetts, close to the MIT AI Lab. Thinking Machines made some of the most powerful supercomputers of the time, and by 1993 the four fastest computers in the world were Connection Machines. The firm filed for bankruptcy in 1994; its hardware and parallel computing software divisions were acquired in time by Sun Microsystems. Supercomputer products On the hardware side, Thinking Machines produced several Connection Machine models (in chronological order): the CM-1, CM-2, CM-200, CM-5, and CM-5E. The CM-1 and 2 came first ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Massive Parallel Processing

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of threads. One approach is grid computing, where the processing power of many computers in distributed, diverse administrative domains is opportunistically used whenever a computer is available.''Grid computing: experiment management, tool integration, and scientific workflows'' by Radu Prodan, Thomas Fahringer 2007 pages 1–4 An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.''Parallel and Distributed Computational Intelligence'' by Francisco Fernández de Vega 2010 pages 65–68 Another approach is grouping many processors in close proximity to each other, as in a computer cluster. In such a centralized system the speed and flexibility of the interconnect b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray-1

The Cray-1 was a supercomputer designed, manufactured and marketed by Cray Research. Announced in 1975, the first Cray-1 system was installed at Los Alamos National Laboratory in 1976. Eventually, over 100 Cray-1s were sold, making it one of the most successful supercomputers in history. It is perhaps best known for its unique shape, a relatively small C-shaped cabinet with a ring of benches around the outside covering the power supplies and the cooling system. The Cray-1 was the first supercomputer to successfully implement the vector processor design. These systems improve the performance of math operations by arranging memory and registers to quickly perform a single operation on a large set of data. Previous systems like the CDC STAR-100 and ASC had implemented these concepts but did so in a way that seriously limited their performance. The Cray-1 addressed these problems and produced a machine that ran several times faster than any similar design. The Cray-1's architect w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |