|

Signalling

In signal processing, a signal is a function that conveys information about a phenomenon. Any quantity that can vary over space or time can be used as a signal to share messages between observers. The ''IEEE Transactions on Signal Processing'' includes audio, video, speech, image, sonar, and radar as examples of signal. A signal may also be defined as observable change in a quantity over space or time (a time series), even if it does not carry information. In nature, signals can be actions done by an organism to alert other organisms, ranging from the release of plant chemicals to warn nearby plants of a predator, to sounds or motions made by animals to alert other animals of food. Signaling occurs in all organisms even at cellular levels, with cell signaling. Signaling theory, in evolutionary biology, proposes that a substantial driver for evolution is the ability of animals to communicate with each other by developing ways of signaling. In human engineering, signals are typi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signaling Theory

Within evolutionary biology, signalling theory is a body of theoretical work examining communication between individuals, both within species and across species. The central question is when organisms with conflicting interests, such as in sexual selection, should be expected to provide honest signals (no presumption being made of conscious intention) rather than cheating. Mathematical models describe how signalling can contribute to an evolutionarily stable strategy. Signals are given in contexts such as mate selection by females, which subjects the advertising males' signals to selective pressure. Signals thus evolve because they modify the behaviour of the receiver to benefit the signaller. Signals may be honest, conveying information which usefully increases the fitness of the receiver, or dishonest. An individual can cheat by giving a dishonest signal, which might briefly benefit that signaller, at the risk of undermining the signalling system for the whole population. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, subjective video quality and to also detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was published in the Bell System Technical Journal. The paper laid the groundwork for later development of information c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

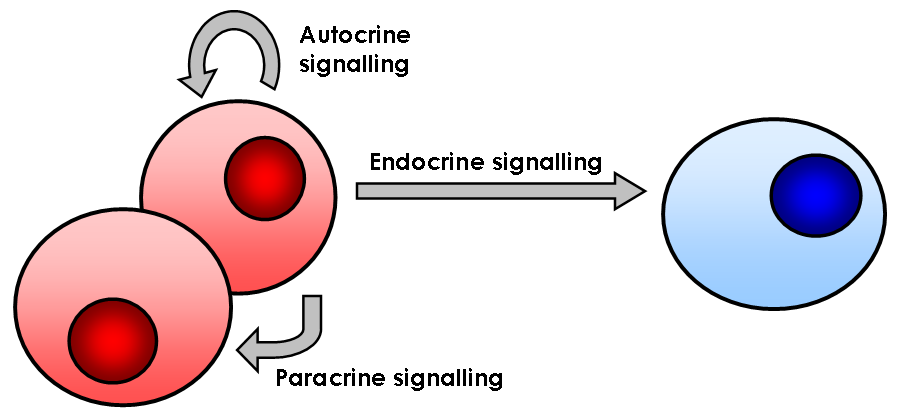

Cell Signaling

In biology, cell signaling (cell signalling in British English) or cell communication is the ability of a cell to receive, process, and transmit signals with its environment and with itself. Cell signaling is a fundamental property of all cellular life in prokaryotes and eukaryotes. Signals that originate from outside a cell (or extracellular signals) can be physical agents like mechanical pressure, voltage, temperature, light, or chemical signals (e.g., small molecules, peptides, or gas). Cell signaling can occur over short or long distances, and as a result can be classified as autocrine, juxtacrine, intracrine, paracrine, or endocrine. Signaling molecules can be synthesized from various biosynthetic pathways and released through passive or active transports, or even from cell damage. Receptors play a key role in cell signaling as they are able to detect chemical signals or physical stimuli. Receptors are generally proteins located on the cell surface or within the interio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Integrity

Signal integrity or SI is a set of measures of the quality of an electrical signal. In digital electronics, a stream of binary values is represented by a voltage (or current) waveform. However, digital signals are fundamentally analog in nature, and all signals are subject to effects such as noise, distortion, and loss. Over short distances and at low bit rates, a simple conductor can transmit this with sufficient fidelity. At high bit rates and over longer distances or through various mediums, various effects can degrade the electrical signal to the point where errors occur and the system or device fails. Signal integrity engineering is the task of analyzing and mitigating these effects. It is an important activity at all levels of electronics packaging and assembly, from internal connections of an integrated circuit (IC), A survey of the field of electronic design automation. Portions of IC section of this article were derived (with permission) from Vol II, Chapter 21, ''Noise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Content

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory. The Shannon information can be interpreted as quantifying the level of "surprise" of a particular outcome. As it is such a basic quantity, it also appears in several other settings, such as the length of a message needed to transmit the event given an optimal source coding of the random variable. The Shannon information is closely related to ''entropy'', which is the expected value of the self-information of a random variable, quantifying how surprising the random variable is "on average". This is the average amount of self-information an observer would expect to gain about a random variable when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noise (electronics)

In electronics, noise is an unwanted disturbance in an electrical signal. Noise generated by electronic devices varies greatly as it is produced by several different effects. In particular, noise is inherent in physics, and central to thermodynamics. Any conductor with electrical resistance will generate thermal noise inherently. The final elimination of thermal noise in electronics can only be achieved cryogenically, and even then quantum noise would remain inherent. Electronic noise is a common component of noise in signal processing. In communication systems, noise is an error or undesired random disturbance of a useful information signal in a communication channel. The noise is a summation of unwanted or disturbing energy from natural and sometimes man-made sources. Noise is, however, typically distinguished from interference, for example in the signal-to-noise ratio (SNR), signal-to-interference ratio (SIR) and signal-to-noise plus interference ratio (SNIR) measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Powell Frith The Signal 1858

William is a male given name of Germanic origin.Hanks, Hardcastle and Hodges, ''Oxford Dictionary of First Names'', Oxford University Press, 2nd edition, , p. 276. It became very popular in the English language after the Norman conquest of England in 1066,All Things William"Meaning & Origin of the Name"/ref> and remained so throughout the Middle Ages and into the modern era. It is sometimes abbreviated "Wm." Shortened familiar versions in English include Will, Wills, Willy, Willie, Bill, and Billy. A common Irish form is Liam. Scottish diminutives include Wull, Willie or Wullie (as in Oor Wullie or the play ''Douglas''). Female forms are Willa, Willemina, Wilma and Wilhelmina. Etymology William is related to the given name ''Wilhelm'' (cf. Proto-Germanic ᚹᛁᛚᛃᚨᚺᛖᛚᛗᚨᛉ, ''*Wiljahelmaz'' > German ''Wilhelm'' and Old Norse ᚢᛁᛚᛋᛅᚼᛅᛚᛘᛅᛋ, ''Vilhjálmr''). By regular sound changes, the native, inherited English form of the name should b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sample of voters. Alternatively, it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transmission

Data transmission and data reception or, more broadly, data communication or digital communications is the transfer and reception of data in the form of a digital bitstream or a digitized analog signal transmitted over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibers, wireless communication using radio spectrum, storage media and computer buses. The data are represented as an electromagnetic signal, such as an electrical voltage, radiowave, microwave, or infrared signal. Analog transmission is a method of conveying voice, data, image, signal or video information using a continuous signal which varies in amplitude, phase, or some other property in proportion to that of a variable. The messages are either represented by a sequence of pulses by means of a line code (''baseband transmission''), or by a limited set of continuously varying waveforms (''passband transmission''), using a digital modulat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |