|

Shannon–Fano Coding

In the field of data compression, Shannon–Fano coding, named after Claude Shannon and Robert Fano, is a name given to two different but related techniques for constructing a prefix code based on a set of symbols and their probabilities (estimated or measured). * Shannon's method chooses a prefix code where a source symbol i is given the codeword length l_i = \lceil - \log_2 p_i\rceil. One common way of choosing the codewords uses the binary expansion of the cumulative probabilities. This method was proposed in Shannon's "A Mathematical Theory of Communication" (1948), his article introducing the field of information theory. * Fano's method divides the source symbols into two sets ("0" and "1") with probabilities as close to 1/2 as possible. Then those sets are themselves divided in two, and so on, until each set contains only one symbol. The codeword for that symbol is the string of "0"s and "1"s that records which half of the divides it fell on. This method was proposed in a la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signal. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bell System Technical Journal

The ''Bell Labs Technical Journal'' is the in-house scientific journal for scientists of Nokia Bell Labs, published yearly by the IEEE society. The managing editor is Charles Bahr. The journal was originally established as the ''Bell System Technical Journal'' (BSTJ) in New York by the American Telephone and Telegraph Company (AT&T) in 1922, published under this name until 1983, when the breakup of the Bell System placed various parts of the system into separate companies. The journal was devoted to the scientific fields and engineering disciplines practiced in the Bell System for improvements in the wide field of electrical communication. After the restructuring of Bell Labs in 1984, the journal was renamed to ''AT&T Bell Laboratories Technical Journal''. In 1985, it was published as the ''AT&T Technical Journal'' until 1996, when it was renamed to ''Bell Labs Technical Journal''. History The ''Bell System Technical Journal'' was published by AT&T in New York City through its I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Priority Queue

In computer science, a priority queue is an abstract data-type similar to a regular queue or stack data structure in which each element additionally has a ''priority'' associated with it. In a priority queue, an element with high priority is served before an element with low priority. In some implementations, if two elements have the same priority, they are served according to the order in which they were enqueued; in other implementations ordering of elements with the same priority remains undefined. While coders often implement priority queues with heaps, they are conceptually distinct from heaps. A priority queue is a concept like a list or a map; just as a list can be implemented with a linked list or with an array, a priority queue can be implemented with a heap or with a variety of other methods such as an unordered array. Operations A priority queue must at least support the following operations: * ''is_empty'': check whether the queue has no elements. * ''insert_wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proceedings Of The IRE

The ''Proceedings of the IEEE'' is a monthly peer-reviewed scientific journal published by the Institute of Electrical and Electronics Engineers (IEEE). The journal focuses on electrical engineering and computer science. According to the ''Journal Citation Reports'', the journal has a 2017 impact factor of 9.107, ranking it sixth in the category "Engineering, Electrical & Electronic." In 2018, it became fifth with an enhanced impact factor of 10.694. History of the Proceedings The journal was established in 1909, known as the ''Proceedings of the Wireless Institute''. Six issues were published under this banner by Greenleaf Pickard and Alfred Goldsmith. Then in 1911, a merger between the Wireless Institute (New York) and the Society of Wireless Telegraph Engineers (Boston) resulted in a society named the Institute of Radio Engineers (IRE). In January 1913 newly formed IRE published the first issue of the ''Proceedings of the IRE''. Later, a 1000-page special issue commemorated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

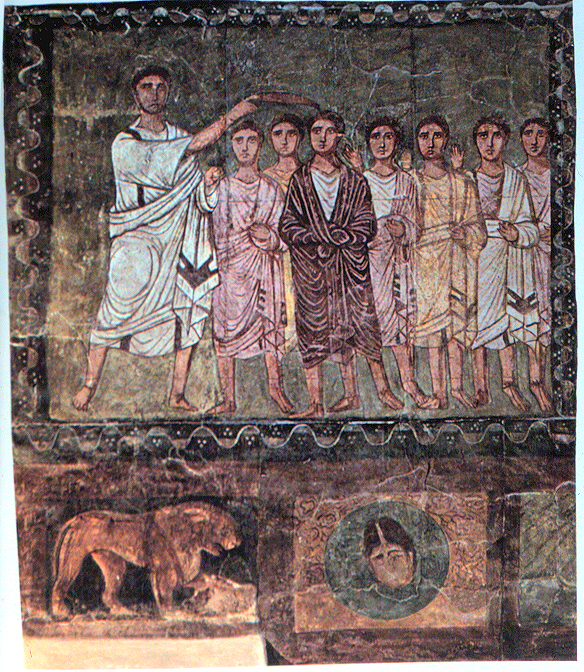

David A

David (; , "beloved one") (traditional spelling), , ''Dāwūd''; grc-koi, Δαυΐδ, Dauíd; la, Davidus, David; gez , ዳዊት, ''Dawit''; xcl, Դաւիթ, ''Dawitʿ''; cu, Давíдъ, ''Davidŭ''; possibly meaning "beloved one". was, according to the Hebrew Bible, the third king of the United Kingdom of Israel. In the Books of Samuel, he is described as a young shepherd and harpist who gains fame by slaying Goliath, a champion of the Philistines, in southern Canaan. David becomes a favourite of Saul, the first king of Israel; he also forges a notably close friendship with Jonathan, a son of Saul. However, under the paranoia that David is seeking to usurp the throne, Saul attempts to kill David, forcing the latter to go into hiding and effectively operate as a fugitive for several years. After Saul and Jonathan are both killed in battle against the Philistines, a 30-year-old David is anointed king over all of Israel and Judah. Following his rise to power, David ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilities

Probability is the branch of mathematics concerning numerical descriptions of how likely an event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty."Kendall's Advanced Theory of Statistics, Volume 1: Distribution Theory", Alan Stuart and Keith Ord, 6th Ed, (2009), .William Feller, ''An Introduction to Probability Theory and Its Applications'', (Vol 1), 3rd Ed, (1968), Wiley, . The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written as 0.5 or 50%). These conce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zip (file Format)

ZIP is an archive file format that supports lossless data compression. A ZIP file may contain one or more files or directories that may have been compressed. The ZIP file format permits a number of compression algorithms, though DEFLATE is the most common. This format was originally created in 1989 and was first implemented in PKWARE, Inc.'s PKZIP utility, as a replacement for the previous ARC compression format by Thom Henderson. The ZIP format was then quickly supported by many software utilities other than PKZIP. Microsoft has included built-in ZIP support (under the name "compressed folders") in versions of Microsoft Windows since 1998 via the "Windows Plus!" addon for Windows 98. Native support was added as of the year 2000 in Windows ME. Apple has included built-in ZIP support in Mac OS X 10.3 (via BOMArchiveHelper, now Archive Utility) and later. Most free operating systems have built in support for ZIP in similar manners to Windows and Mac OS X. ZIP files ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon's Source Coding Theorem

In information theory, Shannon's source coding theorem (or noiseless coding theorem) establishes the limits to possible data compression, and the operational meaning of the Shannon entropy. Named after Claude Shannon, the source coding theorem shows that (in the limit, as the length of a stream of independent and identically-distributed random variable (i.i.d.) data tends to infinity) it is impossible to compress the data such that the code rate (average number of bits per symbol) is less than the Shannon entropy of the source, without it being virtually certain that information will be lost. However it is possible to get the code rate arbitrarily close to the Shannon entropy, with negligible probability of loss. The source coding theorem for symbol codes places an upper and a lower bound on the minimal possible expected length of codewords as a function of the entropy of the input word (which is viewed as a random variable) and of the size of the target alphabet. Statements ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |