|

Semantic Folding

Semantic folding theory describes a procedure for encoding the semantics of natural language text in a semantically grounded binary representation. This approach provides a framework for modelling how language data is processed by the neocortex. Theory Semantic folding theory draws inspiration from Douglas R. Hofstadter's ''Analogy as the Core of Cognition'' which suggests that the brain makes sense of the world by identifying and applying analogies. The theory hypothesises that semantic data must therefore be introduced to the neocortex in such a form as to allow the application of a similarity measure and offers, as a solution, the sparse binary vector employing a two-dimensional topographic semantic space as a distributional reference frame. The theory builds on the computational theory of the human cortex known as hierarchical temporal memory (HTM), and positions itself as a complementary theory for the representation of language semantics. A particular strength claimed by thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy Philosophy (from , ) is the systematized study of general and fundamental questions, such as those about existence, reason, knowledge, values, mind, and language. Such questions are often posed as problems to be studied or resolved. Some ..., linguistics and computer science. History In English, the study of meaning in language has been known by many names that involve the Ancient Greek word (''sema'', "sign, mark, token"). In 1690, a Greek rendering of the term ''semiotics'', the interpretation of signs and symbols, finds an early allusion in John Locke's ''An Essay Concerning Human Understanding'': The third Branch may be called [''simeiotikí'', "semiotics"], or the Doctrine of Signs, the most usual whereof being words, it is aptly enough ter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boolean Algebra

In mathematics and mathematical logic, Boolean algebra is a branch of algebra. It differs from elementary algebra in two ways. First, the values of the variables are the truth values ''true'' and ''false'', usually denoted 1 and 0, whereas in elementary algebra the values of the variables are numbers. Second, Boolean algebra uses logical operators such as conjunction (''and'') denoted as ∧, disjunction (''or'') denoted as ∨, and the negation (''not'') denoted as ¬. Elementary algebra, on the other hand, uses arithmetic operators such as addition, multiplication, subtraction and division. So Boolean algebra is a formal way of describing logical operations, in the same way that elementary algebra describes numerical operations. Boolean algebra was introduced by George Boole in his first book ''The Mathematical Analysis of Logic'' (1847), and set forth more fully in his '' An Investigation of the Laws of Thought'' (1854). According to Huntington, the term "Boolean algebra" wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Keyword Research

Keyword research is a practice search engine optimization (SEO) professionals used to find and research search terms that users enter into search engines when looking for products, services or general information. Keywords are related to queries which are asked by users in search engines. There are four types of queries : 1. Navigational Search Queries 2. Informational Search Queries 3. Transactional Search Queries 4. Commercial Search Queries. Search engine optimization professionals first research keywords, and then align web pages with these keywords to achieve better rankings in search engines. Once they find a niche keyword, they expand on it to find similar keywords. Keyword suggestion tools usually aid the process, like the Google Ads Keyword Planner, which offers a thesaurus and alternative keyword suggestions. Google's first party data also aids this research through the likes of Google autocomplete, related searches or People Also Ask. Importance of research ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rule-based System

In computer science, a rule-based system is used to store and manipulate knowledge to interpret information in a useful way. It is often used in artificial intelligence applications and research. Normally, the term ''rule-based system'' is applied to systems involving human-crafted or curated rule sets. Rule-based systems constructed using automatic rule inference, such as rule-based machine learning, are normally excluded from this system type. Applications A classic example of a rule-based system is the domain-specific expert system that uses rules to make deductions or choices. For example, an expert system might help a doctor choose the correct diagnosis based on a cluster of symptoms, or select tactical moves to play a game. Rule-based systems can be used to perform lexical analysis to compile or interpret computer programs, or in natural language processing. Rule-based programming attempts to derive execution instructions from a starting set of data and rules. This is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ambiguity

Ambiguity is the type of meaning in which a phrase, statement or resolution is not explicitly defined, making several interpretations plausible. A common aspect of ambiguity is uncertainty. It is thus an attribute of any idea or statement whose intended meaning cannot be definitively resolved according to a rule or process with a finite number of steps. (The '' ambi-'' part of the term reflects an idea of "two", as in "two meanings".) The concept of ambiguity is generally contrasted with vagueness. In ambiguity, specific and distinct interpretations are permitted (although some may not be immediately obvious), whereas with information that is vague, it is difficult to form any interpretation at the desired level of specificity. Linguistic forms Lexical ambiguity is contrasted with semantic ambiguity. The former represents a choice between a finite number of known and meaningful context-dependent interpretations. The latter represents a choice between any number of possi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vocabulary Mismatch

Vocabulary mismatch is a common phenomenon in the usage of natural languages, occurring when different people name the same thing or concept differently. Furnas et al. (1987) were perhaps the first to quantitatively study the vocabulary mismatch problem. Their results show that on average 80% of the times different people (experts in the same field) will name the same thing differently. There are usually tens of possible names that can be attributed to the same thing. This research motivated the work on latent semantic indexing. The vocabulary mismatch between user created queries and relevant documents in a corpus causes the term mismatch problem in information retrieval. Zhao and Callan (2010)Zhao, L. and Callan, J., Term Necessity Prediction, Proceedings of the 19th ACM Conference on Information and Knowledge Management (CIKM 2010). Toronto, Canada, 2010. were perhaps the first to quantitatively study the vocabulary mismatch problem in a retrieval setting. Their results sho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sørensen–Dice Coefficient

The Sørensen–Dice coefficient (see below for other names) is a statistic used to gauge the similarity of two samples. It was independently developed by the botanists Thorvald Sørensen and Lee Raymond Dice, who published in 1948 and 1945 respectively. Name The index is known by several other names, especially Sørensen–Dice index, Sørensen index and Dice's coefficient. Other variations include the "similarity coefficient" or "index", such as Dice similarity coefficient (DSC). Common alternate spellings for Sørensen are ''Sorenson'', ''Soerenson'' and ''Sörenson'', and all three can also be seen with the ''–sen'' ending. Other names include: * F1 score * Czekanowski's binary (non-quantitative) index * Measure of genetic similarity * Zijdenbos similarity index, referring to a 1994 paper of Zijdenbos et al. Formula Sørensen's original formula was intended to be applied to discrete data. Given two sets, X and Y, it is defined as : DSC = \frac where , ''X'', and , ''Y' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Levenshtein Distance

In information theory, linguistics, and computer science, the Levenshtein distance is a string metric for measuring the difference between two sequences. Informally, the Levenshtein distance between two words is the minimum number of single-character edits (insertions, deletions or substitutions) required to change one word into the other. It is named after the Soviet mathematician Vladimir Levenshtein, who considered this distance in 1965. Levenshtein distance may also be referred to as ''edit distance'', although that term may also denote a larger family of distance metrics known collectively as edit distance. It is closely related to pairwise string alignments. Definition The Levenshtein distance between two strings a, b (of length , a, and , b, respectively) is given by \operatorname(a, b) where : \operatorname(a, b) = \begin , a, & \text , b, = 0, \\ , b, & \text , a, = 0, \\ \operatorname\big(\operatorname(a),\operatorname(b)\big) & \text a = b \\ 1 + \min ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cosine Similarity

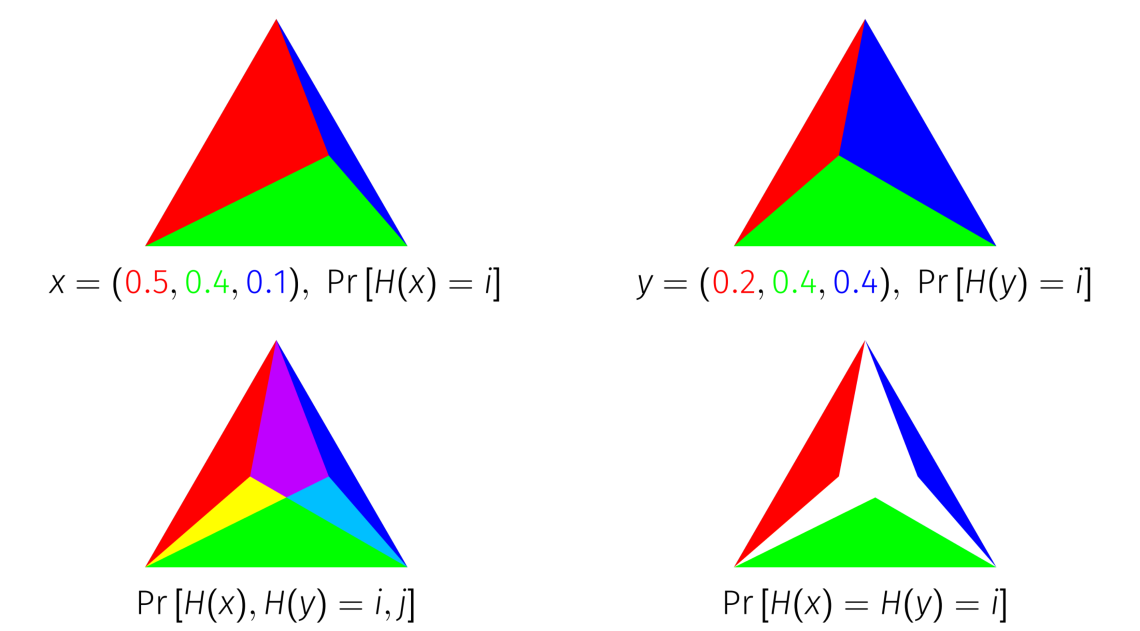

In data analysis, cosine similarity is a measure of similarity between two sequences of numbers. For defining it, the sequences are viewed as vectors in an inner product space, and the cosine similarity is defined as the cosine of the angle between them, that is, the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. The cosine similarity always belongs to the interval 1, 1 For example, two proportional vectors have a cosine similarity of 1, two orthogonal vectors have a similarity of 0, and two opposite vectors have a similarity of -1. The cosine similarity is particularly used in positive space, where the outcome is neatly bounded in ,1/math>. For example, in information retrieval and text mining, each word is assigned a different coordinate and a document is represented by the vector of the numbers of occurrences of each word in the document. Cosi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jaccard Index

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the similarity and diversity of sample sets. It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is frequently referred to as the Critical Success Index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name ''coefficient de communauté'', and independently formulated again by T. Tanimoto. Thus, the Tanimoto index or Tanimoto coefficient are also used in some fields. However, they are identical in generally taking the ratio of Intersection over Union. The Jaccard coefficient measures similarity between finite sample sets, and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A,B) = = . Note that by design, 0\le J(A,B)\le 1. If ''A'' intersection ''B'' is empty, then ''J''(''A'',''B'') = 0. The Jaccard coefficient is widely used in c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming Distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of ''substitutions'' required to change one string into the other, or the minimum number of ''errors'' that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming. A major application is in coding theory, more specifically to block codes, in which the equal-length strings are vectors over a finite field. Definition The Hamming distance between two equal-length strings of symbols is the number of positions at which the corresponding symbols are different. Examples The symbols may be letters, bits, or decimal digits, among other possibilities. For example, the Hamming distance between: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |