|

Semantic Decomposition (natural Language Processing)

A semantic decomposition is an algorithm that breaks down the meanings of phrases or concepts into less complex concepts. The result of a semantic decomposition is a representation of meaning. This representation can be used for tasks, such as those related to artificial intelligence or machine learning. Semantic decomposition is common in natural language processing applications. The basic idea of a semantic decomposition is taken from the learning skills of adult humans, where words are explained using other words. It is based on Meaning-text theory. Meaning-text theory is used as a theoretical linguistic framework to describe the meaning of concepts with other concepts. Background Given that an AI does not inherently have language, it is unable to think about the meanings behind the words of a language. An artificial notion of meaning needs to be created for a strong AI to emerge. AI today is able to capture the syntax of language for many specific problems, but never establ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursion

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics and computer science, where a function being defined is applied within its own definition. While this apparently defines an infinite number of instances (function values), it is often done in such a way that no infinite loop or infinite chain of references ("crock recursion") can occur. Formal definitions In mathematics and computer science, a class of objects or methods exhibits recursive behavior when it can be defined by two properties: * A simple ''base case'' (or cases) — a terminating scenario that does not use recursion to produce an answer * A ''recursive step'' — a set of rules that reduces all successive cases toward the base case. For example, the following is a recursive definition of a person's ''ancestor''. One's ances ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principle Of Compositionality

In semantics, mathematical logic and related disciplines, the principle of compositionality is the principle that the meaning of a complex expression is determined by the meanings of its constituent expressions and the rules used to combine them. This principle is also called Frege's principle, because Gottlob Frege is widely credited for the first modern formulation of it. The principle was never explicitly stated by Frege, and it was arguably already assumed by George Boole decades before Frege's work. The principle of compositionality is highly debated in linguistics, and among its most challenging problems there are the issues of contextuality, the non-compositionality of idiomatic expressions, and the non-compositionality of quotations. History Discussion of compositionality started to appear at the beginning of the 19th century, during which it was debated whether what was most fundamental in language was compositionality or contextuality, and compositionality was usuall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Semantics

Lexical semantics (also known as lexicosemantics), as a subfield of linguistic semantics, is the study of word meanings.Pustejovsky, J. (2005) Lexical Semantics: Overview' in Encyclopedia of Language and Linguistics, second edition, Volumes 1-14Taylor, J. (2017) Lexical Semantics'. In B. Dancygier (Ed.), The Cambridge Handbook of Cognitive Linguistics (Cambridge Handbooks in Language and Linguistics, pp. 246-261). Cambridge: Cambridge University Press. It includes the study of how words structure their meaning, how they act in grammar and compositionality, and the relationships between the distinct senses and uses of a word. The units of analysis in lexical semantics are lexical units which include not only words but also sub-words or sub-units such as affixes and even compound words and phrases. Lexical units include the catalogue of words in a language, the lexicon. Lexical semantics looks at how the meaning of the lexical units correlates with the structure of the language or s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Semantic Analysis

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the documents and terms. LSA assumes that words that are close in meaning will occur in similar pieces of text (the distributional hypothesis). A matrix containing word counts per document (rows represent unique words and columns represent each document) is constructed from a large piece of text and a mathematical technique called singular value decomposition (SVD) is used to reduce the number of rows while preserving the similarity structure among columns. Documents are then compared by cosine similarity between any two columns. Values close to 1 represent very similar documents while values close to 0 represent very dissimilar documents. An information retrieval technique using latent semantic structure was patented in 1988US Patent 4,83 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Understanding

Natural-language understanding (NLU) or natural-language interpretation (NLI) is a subtopic of natural-language processing in artificial intelligence that deals with machine reading comprehension. Natural-language understanding is considered an AI-hard problem. There is considerable commercial interest in the field because of its application to automated reasoning, machine translation, question answering, news-gathering, text categorization, voice-activation, archiving, and large-scale content analysis. History The program STUDENT, written in 1964 by Daniel Bobrow for his PhD dissertation at MIT, is one of the earliest known attempts at natural-language understanding by a computer. Eight years after John McCarthy coined the term artificial intelligence, Bobrow's dissertation (titled ''Natural Language Input for a Computer Problem Solving System'') showed how a computer could understand simple natural language input to solve algebra word problems. A year later, in 1965, J ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chatbot

A chatbot or chatterbot is a Software agent, software application used to conduct an on-line chat conversation via text or Speech synthesis, text-to-speech, in lieu of providing direct contact with a live human agent. Designed to convincingly simulate the way a human would behave as a conversational partner, chatbot systems typically require continuous tuning and testing, and many in production remain unable to adequately converse, while none of them can pass the standard Turing test. The term "ChatterBot" was originally coined by Michael Loren Mauldin, Michael Mauldin (creator of the first Verbot) in 1994 to describe these conversational programs. Chatbots are used in dialog systems for various purposes including customer service, request routing, or information gathering. While some chatbot applications use extensive word-classification processes, natural language processing, natural-language processors, and sophisticated Artificial intelligence, AI, others simply scan for gene ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Semantic Metalanguage

The natural semantic metalanguage (NSM) is a linguistic theory that reduces lexicons down to a set of semantic primitives. It is based on the conception of Polish professor Andrzej Bogusławski. The theory was formally developed by Anna Wierzbicka at Warsaw University and later at the Australian National University in the early 1970s, and Cliff Goddard at Australia's Griffith University. Approach The Natural Semantic Metalanguage (NSM) theory attempts to reduce the semantics of all lexicons down to a restricted set of semantic primitives, or primes. Primes are universal in that they have the same translation in every language, and they are primitive in that they cannot be defined using other words. Primes are ordered together to form explications, which are descriptions of semantic representations consisting solely of primes. Research in the NSM approach deals extensively with language and cognition, and language and culture. Key areas of research include lexical semantics, gra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Primes

Semantic primes or semantic primitives are a set of semantic concepts that are argued to be innately understood by all people but impossible to express in simpler terms. They represent words or phrases that are learned through practice but cannot be defined concretely. For example, although the meaning of "touching" is readily understood, a dictionary might define "touch" as "to make contact" and "contact" as "touching", providing no information if neither of these words is understood. The concept of universal semantic primes was largely introduced by Anna Wierzbicka's book, ''Semantics: Primes and Universals''. List of semantic primes Table adapted from Levisen and Waters 2017 and Goddard and Wierzbicka 2014. A universal ''syntax'' of meaning Semantic primes represent universally meaningful ''concepts'', but to have meaningful ''messages'', or ''statements'', such concepts must combine in a way that they themselves convey meaning. Such meaningful combinations, in their simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wiktionary

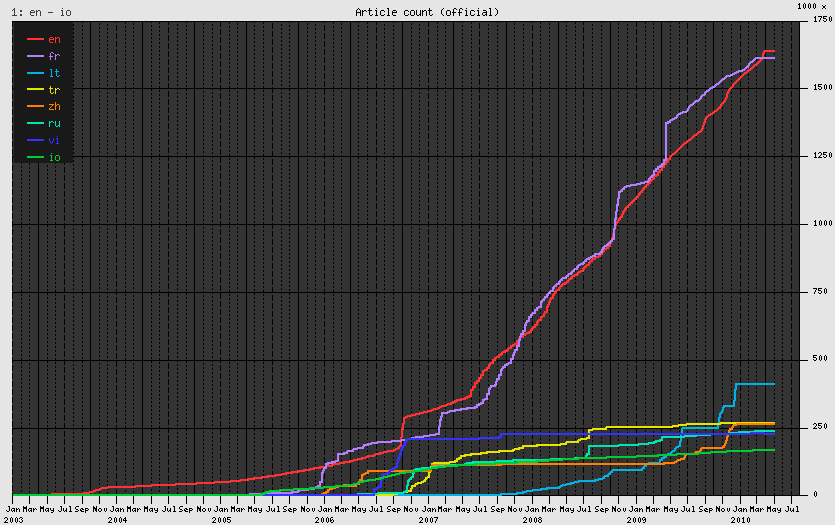

Wiktionary ( , , rhyming with "dictionary") is a multilingual, web-based project to create a free content dictionary of terms (including words, phrases, proverbs, linguistic reconstructions, etc.) in all natural languages and in a number of artificial languages. These entries may contain definitions, images for illustration, pronunciations, etymologies, inflections, usage examples, quotations, related terms, and translations of terms into other languages, among other features. It is collaboratively edited via a wiki. Its name is a portmanteau of the words ''wiki'' and ''dictionary''. It is available in languages and in Simple English. Like its sister project Wikipedia, Wiktionary is run by the Wikimedia Foundation, and is written collaboratively by volunteers, dubbed "Wiktionarians". Its wiki software, MediaWiki, allows almost anyone with access to the website to create and edit entries. Because Wiktionary is not limited by print space considerations, most of Wiktio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WordNet

WordNet is a lexical database of semantic relations between words in more than 200 languages. WordNet links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into '' synsets'' with short definitions and usage examples. WordNet can thus be seen as a combination and extension of a dictionary and thesaurus. While it is accessible to human users via a web browser, its primary use is in automatic text analysis and artificial intelligence applications. WordNet was first created in the English language and the English WordNet database and software tools have been released under a BSD style license and are freely available for download from that WordNet website. History and team members WordNet was first created in English only in the Cognitive Science Laboratory of Princeton University under the direction of psychology professor George Armitage Miller starting in 1985 and was later directed by Christiane Fellbaum. The project was ini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |