|

Self-tuning

In control theory a self-tuning system is capable of optimizing its own internal running parameters in order to maximize or minimize the fulfilment of an objective function; typically the maximization of efficiency or error minimization. Self-tuning and auto-tuning often refer to the same concept. Many software research groups consider auto-tuning the proper nomenclature. Self-tuning systems typically exhibit non-linear adaptive control. Self-tuning systems have been a hallmark of the aerospace industry for decades, as this sort of feedback is necessary to generate optimal multi-variable control for non-linear processes. In the telecommunications industry, adaptive communications are often used to dynamically modify operational system parameters to maximize efficiency and robustness. Examples Examples of self-tuning systems in computing include: * TCP (Transmission Control Protocol) *Microsoft SQL Server (Newer implementations only) *FFTW (Fastest Fourier Transform in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MILEPOST GCC

{{Infobox software , name = MILEPOST GCC , logo = , developer = cTuning foundation / MILEPOST consortium , released = 2009 , latest release version = 4.4.x , latest release date = {{release date, 2010, 5, 21 , operating system = Cross-platform , genre = Compiler , license = GNU General Public License (version 3 or later) , website GitHub MILEPOST GCC is a free, community-driven, open-source, adaptive, self-tuning compiler that combines stable produ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adaptive Control

Adaptive control is the control method used by a controller which must adapt to a controlled system with parameters which vary, or are initially uncertain. For example, as an aircraft flies, its mass will slowly decrease as a result of fuel consumption; a control law is needed that adapts itself to such changing conditions. Adaptive control is different from robust control in that it does not need ''a priori'' information about the bounds on these uncertain or time-varying parameters; robust control guarantees that if the changes are within given bounds the control law need not be changed, while adaptive control is concerned with control law changing itself. Parameter estimation The foundation of adaptive control is parameter estimation, which is a branch of system identification. Common methods of estimation include recursive least squares and gradient descent. Both of these methods provide update laws that are used to modify estimates in real-time (i.e., as the system operates). L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Theory

Control theory is a field of mathematics that deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any ''delay'', ''overshoot'', or ''steady-state error'' and ensuring a level of control stability; often with the aim to achieve a degree of optimality. To do this, a controller with the requisite corrective behavior is required. This controller monitors the controlled process variable (PV), and compares it with the reference or set point (SP). The difference between actual and desired value of the process variable, called the ''error'' signal, or SP-PV error, is applied as feedback to generate a control action to bring the controlled process variable to the same value as the set point. Other aspects which are also studied are controllability and observability. Control theory is used in control system eng ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

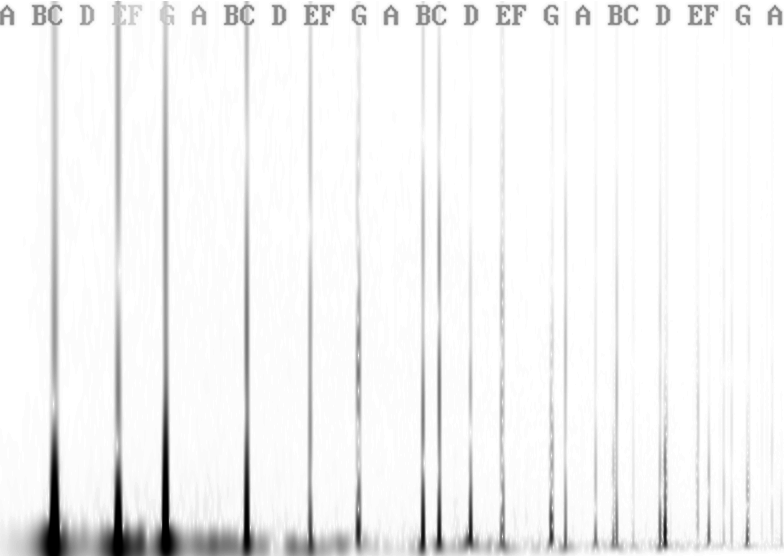

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude (absolute value) of the complex value represents the amplitude of a constituent complex sinusoid with that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Engineering

Control engineering or control systems engineering is an engineering discipline that deals with control systems, applying control theory to design equipment and systems with desired behaviors in control environments. The discipline of controls overlaps and is usually taught along with electrical engineering and mechanical engineering at many institutions around the world. The practice uses sensors and detectors to measure the output performance of the process being controlled; these measurements are used to provide corrective feedback helping to achieve the desired performance. Systems designed to perform without requiring human input are called automatic control systems (such as cruise control for regulating the speed of a car). Multi-disciplinary in nature, control systems engineering activities focus on implementation of control systems mainly derived by mathematical modeling of a diverse range of systems. Overview Modern day control engineering is a relatively new field of s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jack Dongarra

Jack Joseph Dongarra (born July 18, 1950) is an American computer scientist and mathematician. He is the American University Distinguished Professor of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee. Education Dongarra received a BSc degree in Mathematics from Chicago State University in 1972 and a MSc degree in Computer Science from the Illinois Institute of Technology in 1973. In 1980, he received PhD in Ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RISC

In computer engineering, a reduced instruction set computer (RISC) is a computer designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set computer (CISC), a RISC computer might require more instructions (more code) in order to accomplish a task because the individual instructions are written in simpler code. The goal is to offset the need to process more instructions by increasing the speed of each instruction, in particular by implementing an instruction pipeline, which may be simpler given simpler instructions. The key operational concept of the RISC computer is that each instruction performs only one function (e.g. copy a value from memory to a register). The RISC computer usually has many (16 or 32) high-speed, general-purpose registers with a load/store architecture in which the code for the register-register instructions (for performing arithmetic and tests) are separate fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linux

Linux ( or ) is a family of open-source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically packaged as a Linux distribution, which includes the kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name "GNU/Linux" to emphasize the importance of GNU software, causing some controversy. Popular Linux distributions include Debian, Fedora Linux, and Ubuntu, the latter of which itself consists of many different distributions and modifications, including Lubuntu and Xubuntu. Commercial distributions include Red Hat Enterprise Linux and SUSE Linux Enterprise. Desktop Linux distributions include a windowing system such as X11 or Wayland, and a desktop environment such as GNOME or KDE Plasma. Distributions intended for ser ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatically Tuned Linear Algebra Software

Automatically Tuned Linear Algebra Software (ATLAS) is a software library for linear algebra. It provides a mature open source implementation of BLAS APIs for C and Fortran77. ATLAS is often recommended as a way to automatically generate an optimized BLAS library. While its performance often trails that of specialized libraries written for one specific hardware platform, it is often the first or even only optimized BLAS implementation available on new systems and is a large improvement over the generic BLAS available at Netlib. For this reason, ATLAS is sometimes used as a performance baseline for comparison with other products. ATLAS runs on most Unix-like operating systems and on Microsoft Windows (using Cygwin). It is released under a BSD-style license without advertising clause, and many well-known mathematics applications including MATLAB, Mathematica, Scilab, SageMath, and some builds of GNU Octave may use it. Functionality ATLAS provides a full implementation of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft SQL Server

Microsoft SQL Server is a relational database management system developed by Microsoft. As a database server, it is a software product with the primary function of storing and retrieving data as requested by other software applications—which may run either on the same computer or on another computer across a network (including the Internet). Microsoft markets at least a dozen different editions of Microsoft SQL Server, aimed at different audiences and for workloads ranging from small single-machine applications to large Internet-facing applications with many concurrent users. History The history of Microsoft SQL Server begins with the first Microsoft SQL Server product—SQL Server 1.0, a 16-bit server for the OS/2 operating system in 1989—and extends to the current day. Its name is entirely descriptive, it being ''server'' software that responds to queries in the '' SQL'' language. Milestones * MS SQL Server for OS/2 began as a project to port Sybase SQL Server onto O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FFTW

The Fastest Fourier Transform in the West (FFTW) is a software library for computing discrete Fourier transforms (DFTs) developed by Matteo Frigo and Steven G. Johnson at the Massachusetts Institute of Technology. FFTW is one of the fastest free software implementations of the fast Fourier transform (FFT). It implements the FFT algorithm for real and complex-valued arrays of arbitrary size and dimension. Library FFTW expeditiously transforms data by supporting a variety of algorithms and choosing the one (a particular decomposition of the transform into smaller transforms) it estimates or measures to be preferable in the particular circumstances. It works best on arrays of sizes with small prime factors, with powers of two being optimal and large primes being worst case (but still O( n log n)). To decompose transforms of composite sizes into smaller transforms, it chooses among several variants of the Cooley–Tukey FFT algorithm (corresponding to different factorizations and/o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)