|

Structure From Motion

Structure from motion (SfM) is a photogrammetric range imaging technique for estimating three-dimensional structures from two-dimensional image sequences that may be coupled with local motion signals. It is studied in the fields of computer vision and visual perception. In biological vision, SfM refers to the phenomenon by which humans (and other living creatures) can recover 3D structure from the projected 2D (retinal) motion field of a moving object or scene. Principle Humans perceive a great deal of information about the three-dimensional structure in their environment by moving around it. When the observer moves, objects around them move different amounts depending on their distance from the observer. This is known as motion parallax, and from this depth information can be used to generate an accurate 3D representation of the world around them. Finding structure from motion presents a similar problem to finding structure from stereo vision. In both instances, the correspo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Photogrammetry

Photogrammetry is the science and technology of obtaining reliable information about physical objects and the environment through the process of recording, measuring and interpreting photographic images and patterns of electromagnetic radiant imagery and other phenomena. The term photogrammetry was coined by the Prussian architect Albrecht Meydenbauer, which appeared in his 1867 article "Die Photometrographie." There are many variants of photogrammetry. One example is the extraction of three-dimensional measurements from two-dimensional data (i.e. images); for example, the distance between two points that lie on a plane parallel to the photographic image plane can be determined by measuring their distance on the image, if the scale (map), scale of the image is known. Another is the extraction of accurate color ranges and values representing such quantities as albedo, specular reflection, Metallicity#Photometric colors, metallicity, or ambient occlusion from photographs of mater ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RANSAC

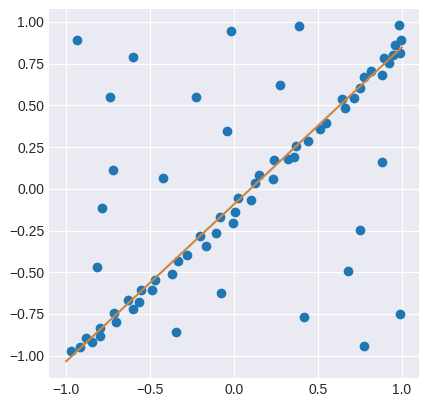

Random sample consensus (RANSAC) is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers, when outliers are to be accorded no influence on the values of the estimates. Therefore, it also can be interpreted as an outlier detection method. It is a non-deterministic algorithm in the sense that it produces a reasonable result only with a certain probability, with this probability increasing as more iterations are allowed. The algorithm was first published by Fischler and Bolles at SRI International in 1981. They used RANSAC to solve the Location Determination Problem (LDP), where the goal is to determine the points in the space that project onto an image into a set of landmarks with known locations. RANSAC uses repeated random sub-sampling. A basic assumption is that the data consists of "inliers", i.e., data whose distribution can be explained by some set of model parameters, though may be subject to noise, and "outlier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-global Matching

Semi-global matching (SGM) is a computer vision algorithm for the estimation of a dense disparity map from a rectified stereo image pair, introduced in 2005 by Heiko Hirschmüller while working at the German Aerospace Center.Hirschmüller (2005), pp. 807-814 Given its predictable run time, its favourable trade-off between quality of the results and computing time, and its suitability for fast parallel implementation in ASIC or FPGA, it has encountered wide adoption in real-time stereo vision applications such as robotics and advanced driver assistance systems.Hirschmüller (2011), pp. 178–184 Problem Pixelwise stereo matching allows to perform real-time calculation of disparity maps by measuring the similarity of each pixel in one stereo image to each pixel within a subset in the other stereo image. Given a rectified stereo image pair, for a pixel with coordinates (x, y) the set of pixels in the other image is usually selected as \, where D is a maximum allowed disparity shi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Motion Parallax

Parallax is a displacement or difference in the apparent position of an object viewed along two different lines of sight and is measured by the angle or semi-angle of inclination between those two lines. Due to foreshortening, nearby objects show a larger parallax than farther objects when observed from different positions, so parallax can be used to determine distances. To measure large distances, such as the distance of a planet or a star from Earth, astronomers use the principle of parallax. Here, the term ''parallax'' is the semi-angle of inclination between two sight-lines to the star, as observed when Earth is on opposite sides of the Sun in its orbit. These distances form the lowest rung of what is called "the cosmic distance ladder", the first in a succession of methods by which astronomers determine the distances to celestial objects, serving as a basis for other distance measurements in astronomy forming the higher rungs of the ladder. Parallax also affects optical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Motion Field

In computer vision the motion field is an ideal representation of 3D motion as it is projected onto a camera image. Given a simplified camera model, each point (y_, y_) in the image is the projection of some point in the 3D scene but the position of the projection of a fixed point in space can vary with time. The motion field can formally be defined as the time derivative of the image position of all image points given that they correspond to fixed 3D points. This means that the motion field can be represented as a function which maps image coordinates to a 2-dimensional vector. The motion field is an ideal description of the projected 3D motion in the sense that it can be formally defined but in practice it is normally only possible to determine an approximation of the motion field from the image data. Introduction A camera model maps each point (x_, x_, x_) in 3D space to a 2D image point (y_, y_) according to some mapping functions m_, m_ : : \begin y_ \\ y_ \en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Match Moving

In visual effects, match moving is a technique that allows the insertion of computer graphics into live-action footage with correct position, scale, orientation, and motion relative to the photographed objects in the shot. The term is used loosely to describe several different methods of extracting camera motion information from a motion picture. Sometimes referred to as motion tracking or camera solving, match moving is related to rotoscoping and photogrammetry. Match moving is sometimes confused with motion capture, which records the motion of objects, often human actors, rather than the camera. Typically, motion capture requires special cameras and sensors and a controlled environment (although recent developments such as the Kinect camera and Apple's Face ID have begun to change this). Match moving is also distinct from motion control photography, which uses mechanical hardware to execute multiple identical camera moves. Match moving, by contrast, is typically a software-base ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Epipolar Geometry

Epipolar geometry is the geometry of stereo vision. When two cameras view a 3D scene from two distinct positions, there are a number of geometric relations between the 3D points and their projections onto the 2D images that lead to constraints between the image points. These relations are derived based on the assumption that the cameras can be approximated by the pinhole camera model. Definitions The figure below depicts two pinhole cameras looking at point X. In real cameras, the image plane is actually behind the focal center, and produces an image that is symmetric about the focal center of the lens. Here, however, the problem is simplified by placing a ''virtual image plane'' in front of the focal center i.e. optical center of each camera lens to produce an image not transformed by the symmetry. OL and OR represent the centers of symmetry of the two cameras lenses. X represents the point of interest in both cameras. Points xL and xR are the projections of point X onto th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Stereo Vision

Computer stereo vision is the extraction of 3D information from digital images, such as those obtained by a CCD camera. By comparing information about a scene from two vantage points, 3D information can be extracted by examining the relative positions of objects in the two panels. This is similar to the biological process of stereopsis. Outline In traditional stereo vision, two cameras, displaced horizontally from one another, are used to obtain two differing views on a scene, in a manner similar to human binocular vision. By comparing these two images, the relative depth information can be obtained in the form of a disparity map, which encodes the difference in horizontal coordinates of corresponding image points. The values in this disparity map are inversely proportional to the scene depth at the corresponding pixel location. For a human to compare the two images, they must be superimposed in a stereoscopic device, with the image from the right camera being shown to the ob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Of Photogrammetry Software

Photogrammetry is the technique to extract geometric information from two-dimensional images or video. Comparison of notable packages See also *MicMac (software) MicMac is an open source software for photogrammetry developed by the French National Geographic Institute. See also *Comparison of photogrammetry software Photogrammetry is the technique to extract geometric information from two-dimensiona ... * PCI Geomatica * Bundle adjustment software * Structure from motion software References {{reflist Photogrammetry software * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bundle Adjustment

In photogrammetry and computer stereo vision, bundle adjustment is simultaneous refining of the 3D coordinates describing the scene geometry, the parameters of the relative motion, and the optical characteristics of the camera(s) employed to acquire the images, given a set of images depicting a number of 3D points from different viewpoints. Its name refers to the '' geometrical bundles'' of light rays originating from each 3D feature and converging on each camera's optical center, which are adjusted optimally according to an optimality criterion involving the corresponding image projections of all points. Uses Bundle adjustment is almost always used as the last step of every feature-based 3D reconstruction algorithm. It amounts to an optimization problem on the 3D structure and viewing parameters (i.e., camera pose and possibly intrinsic calibration and radial distortion), to obtain a reconstruction which is optimal under certain assumptions regarding the noise pertaining t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

3D Reconstruction From Multiple Images

3D reconstruction from multiple images is the creation of three-dimensional models from a set of images. It is the reverse process of obtaining 2D images from 3D scenes. The essence of an image is a projection from a 3D scene onto a 2D plane, during which process the depth is lost. The 3D point corresponding to a specific image point is constrained to be on the line of sight. From a single image, it is impossible to determine which point on this line corresponds to the image point. If two images are available, then the position of a 3D point can be found as the intersection of the two projection rays. This process is referred to as triangulation. The key for this process is the relations between multiple views which convey the information that corresponding sets of points must contain some structure and that this structure is related to the poses and the calibration of the camera. In recent decades, there is an important demand for 3D content for computer graphics, virtual ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |