|

Stochastic Thermodynamics

Overview When a microscopic machine (e.g. a MEM) performs useful work it generates heat and entropy as a byproduct of the process, however it is also predicted that this machine will operate in "reverse" or "backwards" over appreciable short periods. That is, heat energy from the surroundings will be converted into useful work. For larger engines, this would be described as a violation of the second law of thermodynamics, as entropy is consumed rather than generated. Loschmidt's paradox states that in a time reversible system, for every trajectory there exists a time-reversed anti-trajectory. As the entropy production of a trajectory and its equal anti-trajectory are of identical magnitude but opposite sign, then, so the argument goes, one cannot prove that entropy production is positive. For a long time, exact results in thermodynamics were only possible in linear systems capable of reaching equilibrium, leaving other questions like the Loschmidt paradox unsolved. During the las ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Mechanics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles. Statistical mechanics arose out of the development of classical thermodynamics, a field for which it was successful in explaining macroscopic physical properties—such as temperature, pressure, and heat capacity—in terms of microscopic parameters that fluctuate about average values and are characterized by probability distributions. This established the fields of statistical thermodynamics and statistical physics. The founding of the field of statistical mechanics is generally credited to three physicists: *Ludwig Boltzmann, who developed the fundamental interpretation of entropy in terms of a collection of microstates *James Clerk Maxwell, who developed models of probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Crooks Fluctuation Theorem

The Crooks fluctuation theorem (CFT), sometimes known as the Crooks equation, is an equation in statistical mechanics that relates the work done on a system during a non-equilibrium transformation to the free energy difference between the final and the initial state of the transformation. During the non-equilibrium transformation the system is at constant volume and in contact with a heat reservoir. The CFT is named after the chemist Gavin E. Crooks (then at University of California, Berkeley) who discovered it in 1998. The most general statement of the CFT relates the probability of a space-time trajectory x(t) to the time-reversal of the trajectory \tilde(t). The theorem says if the dynamics of the system satisfies microscopic reversibility, then the forward time trajectory is exponentially more likely than the reverse, given that it produces entropy, : \frac = e^. If one defines a generic reaction coordinate of the system as a function of the Cartesian coordinates of the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Statistics

Ludwig Eduard Boltzmann (; 20 February 1844 – 5 September 1906) was an Austrian physicist and philosopher. His greatest achievements were the development of statistical mechanics, and the statistical explanation of the second law of thermodynamics. In 1877 he provided the current definition of entropy, S = k_ \ln \Omega \!, where Ω is the number of microstates whose energy equals the system's energy, interpreted as a measure of statistical disorder of a system. Max Planck named the constant the Boltzmann constant. Statistical mechanics is one of the pillars of modern physics. It describes how macroscopic observations (such as temperature and pressure) are related to microscopic parameters that fluctuate around an average. It connects thermodynamic quantities (such as heat capacity) to microscopic behavior, whereas, in classical thermodynamics, the only available option would be to measure and tabulate such quantities for various materials. Biography Childhood and educatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Annealing

Quantum annealing (QA) is an optimization process for finding the global minimum of a given objective function over a given set of candidate solutions (candidate states), by a process using quantum fluctuations. Quantum annealing is used mainly for problems where the search space is discrete (combinatorial optimization problems) with many local minima; such as finding the ground state of a spin glass or the traveling salesman problem. The term "quantum annealing" was first proposed in 1988 by B. Apolloni, N. Cesa Bianchi and D. De Falco as a quantum-inspired classical algorithm. It was formulated in its present form by T. Kadowaki and H. Nishimori ( ja) in "Quantum annealing in the transverse Ising model" though an imaginary-time variant without quantum coherence had been discussed by A. B. Finnila, M. A. Gomez, C. Sebenik and J. D. Doll, in "Quantum annealing is a new method for minimizing multidimensional functions". Quantum annealing starts from a quantum-mechanical superp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Entanglement

Quantum entanglement is the phenomenon that occurs when a group of particles are generated, interact, or share spatial proximity in a way such that the quantum state of each particle of the group cannot be described independently of the state of the others, including when the particles are separated by a large distance. The topic of quantum entanglement is at the heart of the disparity between classical and quantum physics: entanglement is a primary feature of quantum mechanics not present in classical mechanics. Measurements of physical properties such as position, momentum, spin, and polarization performed on entangled particles can, in some cases, be found to be perfectly correlated. For example, if a pair of entangled particles is generated such that their total spin is known to be zero, and one particle is found to have clockwise spin on a first axis, then the spin of the other particle, measured on the same axis, is found to be anticlockwise. However, this behavior gives ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Many-worlds

The many-worlds interpretation (MWI) is an interpretation of quantum mechanics that asserts that the universal wavefunction is objectively real, and that there is no wave function collapse. This implies that all possible outcomes of quantum measurements are physically realized in some "world" or universe. In contrast to some other interpretations, such as the Copenhagen interpretation, the evolution of reality as a whole in MWI is rigidly deterministic and local. Many-worlds is also called the relative state formulation or the Everett interpretation, after physicist Hugh Everett, who first proposed it in 1957.Hugh Everettbr>Theory of the Universal Wavefunction Thesis, Princeton University, (1956, 1973), pp 1–140 Bryce DeWitt popularized the formulation and named it ''many-worlds'' in the 1970s. See also Cecile M. DeWitt, John A. Wheeler eds, The Everett–Wheeler Interpretation of Quantum Mechanics, ''Battelle Rencontres: 1967 Lectures in Mathematics and Physics'' (1968)Bryce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Copenhagen Interpretation

The Copenhagen interpretation is a collection of views about the meaning of quantum mechanics, principally attributed to Niels Bohr and Werner Heisenberg. It is one of the oldest of numerous proposed interpretations of quantum mechanics, as features of it date to the development of quantum mechanics during 1925–1927, and it remains one of the most commonly taught. There is no definitive historical statement of what the Copenhagen interpretation is. There are some fundamental agreements and disagreements between the views of Bohr and Heisenberg. For example, Heisenberg emphasized a sharp "cut" between the observer (or the instrument) and the system being observed, while Bohr offered an interpretation that is independent of a subjective observer or measurement or collapse, which relies on an "irreversible" or effectively irreversible process, which could take place within the quantum system. Features common to Copenhagen-type interpretations include the idea that quantum mechan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maxwell's Demon

Maxwell's demon is a thought experiment that would hypothetically violate the second law of thermodynamics. It was proposed by the physicist James Clerk Maxwell in 1867. In his first letter Maxwell called the demon a "finite being", while the ''Daemon'' name was first used by Lord Kelvin. In the thought experiment, a demon controls a small massless door between two chambers of gas. As individual gas molecules (or atoms) approach the door, the demon quickly opens and closes the door to allow only fast-moving molecules to pass through in one direction, and only slow-moving molecules to pass through in the other. Because the kinetic temperature of a gas depends on the velocities of its constituent molecules, the demon's actions cause one chamber to warm up and the other to cool down. This would decrease the total entropy of the two gases, without applying any work, thereby violating the second law of thermodynamics. The concept of Maxwell's demon has provoked substantial debate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carnot's Theorem (thermodynamics)

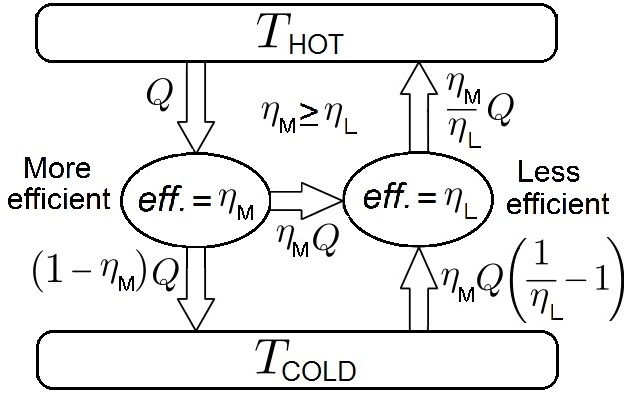

In thermodynamics, Carnot's theorem, developed in 1824 by Nicolas Léonard Sadi Carnot, also called Carnot's rule, is a principle that specifies limits on the maximum efficiency that any heat engine can obtain. Carnot's theorem states that all heat engines operating between the same two thermal or heat reservoirs can't have efficiencies greater than a reversible heat engine operating between the same reservoirs. A corollary of this theorem is that every reversible heat engine operating between a pair of heat reservoirs is equally efficient, regardless of the working substance employed or the operation details. Since a Carnot heat engine is also a reversible engine, the efficiency of all the reversible heat engines is determined as the efficiency of the Carnot heat engine that depends solely on the temperatures of its hot and cold reservoirs. The maximum efficiency (i.e., the Carnot heat engine efficiency) of a heat engine operating between cold and hot reservoirs, denoted ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Correlation

In quantum mechanics, quantum correlation is the expected value of the product of the alternative outcomes. In other words, it is the expected change in physical characteristics as one quantum system passes through an interaction site. In John Bell's 1964 paper that inspired the Bell test, it was assumed that the outcomes A and B could each only take one of two values, -1 or +1. It followed that the product, too, could only be -1 or +1, so that the average value of the product would be :\frac where, for example, N++ is the number of simultaneous instances ("coincidences") of the outcome +1 on both sides of the experiment. However, in actual experiments, detectors are not perfect and produce many null outcomes. The correlation can still be estimated using the sum of coincidences, since clearly zeros do not contribute to the average, but in practice, instead of dividing by Ntotal, it is customary to divide by :N_ + N_+ N_ + N_ the total number of observed coincidences. The leg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |