|

Software Development Effort Estimation

In software development, effort estimation is the process of predicting the most realistic amount of effort (expressed in terms of person-hours or money) required to develop or maintain software based on incomplete, uncertain and noisy input. Effort estimates may be used as input to project plans, iteration plans, budgets, investment analyses, pricing processes and bidding rounds. State-of-practice Published surveys on estimation practice suggest that expert estimation is the dominant strategy when estimating software development effort. Typically, effort estimates are over-optimistic and there is a strong over-confidence in their accuracy. The mean effort overrun seems to be about 30% and not decreasing over time. For a review of effort estimation error surveys, see. However, the measurement of estimation error is problematic, see Assessing the accuracy of estimates. The strong overconfidence in the accuracy of the effort estimates is illustrated by the finding that, on average, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Development

Software development is the process of conceiving, specifying, designing, programming, documenting, testing, and bug fixing involved in creating and maintaining applications, frameworks, or other software components. Software development involves writing and maintaining the source code, but in a broader sense, it includes all processes from the conception of the desired software through to the final manifestation of the software, typically in a planned and structured process. Software development also includes research, new development, prototyping, modification, reuse, re-engineering, maintenance, or any other activities that result in software products. Methodologies One system development methodology is not necessarily suitable for use by all projects. Each of the available methodologies are best suited to specific kinds of projects, based on various technical, organizational, project, and team considerations. Software development activities Identification of need The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

COCOMO

The Constructive Cost Model (COCOMO) is a procedural software cost estimation model developed by Barry W. Boehm. The model parameters are derived from fitting a regression formula using data from historical projects (63 projects for COCOMO 81 and 163 projects for COCOMO II). History The constructive cost model was developed by Barry W. Boehm in the late 1970s and published in Boehm's 1981 book ''Software Engineering Economics'' as a model for estimating effort, cost, and schedule for software projects. It drew on a study of 63 projects at TRW Aerospace where Boehm was Director of Software Research and Technology. The study examined projects ranging in size from 2,000 to 100,000 lines of code, and programming languages ranging from assembly to PL/I. These projects were based on the waterfall model of software development which was the prevalent software development process in 1981. References to this model typically call it ''COCOMO 81''. In 1995 ''COCOMO II'' was developed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Story Point

A burndown chart or burn down chart is a graphical representation of work left to do versus time. The outstanding work (or backlog) is often on the vertical axis, with time along the horizontal. A burn down chart is a run chart of outstanding work. It is useful for predicting when all of the work will be completed. It is often used in agile software development methodologies such as Scrum. However, burn down charts can be applied to any project containing measurable progress over time. Outstanding work can be represented in terms of either time or story points (a sort of arbitrary unit In science and technology, an arbitrary unit (abbreviated arb. unit, '' see below'') or procedure defined unit (p.d.u.) is a relative unit of measurement to show the ratio of amount of substance, intensity, or other quantities, to a predetermined r ...). Reading burn down charts A burn down chart for a completed iteration is shown above and can be read by knowing the following: Measuring ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Use Case

In software and systems engineering, the phrase use case is a polyseme with two senses: # A usage scenario for a piece of software; often used in the plural to suggest situations where a piece of software may be useful. # A potential scenario in which a system receives an external request (such as user input) and responds to it. This article discusses the latter sense. A ''use case'' is a list of actions or event steps typically defining the interactions between a role (known in the Unified Modeling Language (UML) as an '' actor'') and a system to achieve a goal. The actor can be a human or another external system. In systems engineering, use cases are used at a higher level than within software engineering, often representing missions or stakeholder goals. The detailed requirements may then be captured in the Systems Modeling Language (SysML) or as contractual statements. History In 1987, Ivar Jacobson presented the first article on use cases at the OOPSLA'87 confer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Putnam Model

The Putnam model is an empirical software effort estimation model. The original paper by Lawrence H. Putnam published in 1978 is seen as pioneering work in the field of software process modelling. As a group, empirical models work by collecting software project data (for example, effort and size) and Regression analysis, fitting a curve to the data. Future effort estimates are made by providing size and calculating the associated effort using the equation which fit the original data (usually with some Errors and residuals in statistics, error). Created by Lawrence Putnam, Sr. the Putnam model describes the ''time'' and ''effort'' required to finish a software project of specified ''size''. SLIM (Software LIfecycle Management) is the name given by Putnam to the proprietary suite of tools his company QSM, Inc. has developed based on his model. It is one of the earliest of these types of models developed, and is among the most widely used. Closely related software parametric mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Project Management Software

Project management software (PMS) has the capacity to help plan, organize, and manage resource tools and develop resource estimates. Depending on the sophistication of the software, it can manage estimation and planning, scheduling, cost control and budget management, resource allocation, collaboration software, communication, decision-making, quality management, time management and documentation or administration systems. Numerous PC and browser-based project management software and contract management software products and services are available. History Predecessors The first historically relevant year for the development of project management software is 1896, marked by the introduction of the Harmonogram. Polish economist Karol Adamiecki attempted to display task development in a floating chart, and laid the foundation for project management software as it is today. 1912 was the year when Henry Gantt replaced the Harmonogram with the more advanced Gantt chart, a scheduling ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Work Breakdown Structure

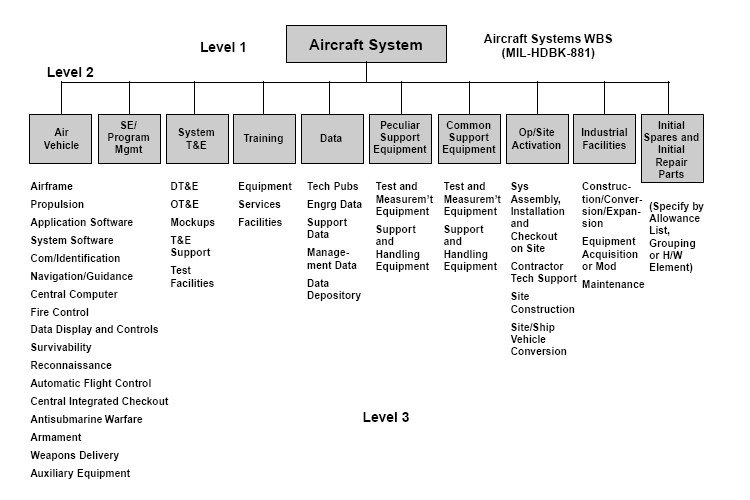

A work-breakdown structure (WBS) in project management and systems engineering is a deliverable-oriented breakdown of a project into smaller components. A work breakdown structure is a key project deliverable that organizes the team's work into manageable sections. The Project Management Body of Knowledge (PMBOK 5) defines the work-breakdown structure as a "hierarchical decomposition of the total scope of work to be carried out by the project team to accomplish the project objectives and create the required deliverables." A work-breakdown structure element may be a product, data, service, or any combination of these. A WBS also provides the necessary framework for detailed cost estimation and control while providing guidance for schedule development and control.Booz, Allen & HamiltoEarned Value Management Tutorial Module 2: Work Breakdown Structure [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Micro Function Points

Weighted Micro Function Points (WMFP) is a modern software sizing algorithm which is a successor to solid ancestor scientific methods as COCOMO, COSYSMO, maintainability index, cyclomatic complexity, function points, and Halstead complexity. It produces more accurate results than traditional software sizing methodologies, while requiring less configuration and knowledge from the end user, as most of the estimation is based on automatic measurements of an existing source code. As many ancestor measurement methods use source lines of code (SLOC) to measure software size, WMFP uses a parser to understand the source code breaking it down into micro functions and derive several code complexity and volume metrics, which are then dynamically interpolated into a final effort score. In addition to compatibility with the waterfall software development life cycle methodology, WMFP is also compatible with newer methodologies, such as Six Sigma, Boehm spiral, and Agile (AUP/Lean/XP/DSDM) meth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analogy

Analogy (from Greek language, Greek ''analogia'', "proportion", from ''ana-'' "upon, according to" [also "against", "anew"] + ''logos'' "ratio" [also "word, speech, reckoning"]) is a cognition, cognitive process of transferring information or Meaning (linguistics), meaning from a particular subject (the analog, or source) to another (the target), or a language, linguistic expression corresponding to such a process. In a narrower sense, analogy is an inference or an Logical argument, argument from one particular to another particular, as opposed to deductive reasoning, deduction, inductive reasoning, induction, and abductive reasoning, abduction, in which at least one of the premises, or the conclusion, is general rather than particular in nature. The term analogy can also refer to the relation between the source and the target themselves, which is often (though not always) a Similarity (philosophy), similarity, as in the analogy (biology), biological notion of analogy. Analogy pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Object Point

Object points are an approach used in software development effort estimation under some models such as COCOMO II. Object points are a way of estimating effort size, similar to Source Lines Of Code (SLOC) or Function Points. They are not necessarily related to objects in Object-oriented programming Object-oriented programming (OOP) is a programming paradigm based on the concept of "objects", which can contain data and code. The data is in the form of fields (often known as attributes or ''properties''), and the code is in the form of pr ..., the objects referred to include screens, reports, and modules of the language. The number of raw objects and complexity of each are estimated and a weighted total Object-Point count is then computed and used to base estimates of the effort needed. See also * COCOMO (Constructive Cost Model) * Comparison of development estimation software * Function point * Software development effort estimation * Software Sizing * Source line ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |