|

Significance Analysis Of Microarrays

Microarray analysis techniques are used in interpreting the data generated from experiments on DNA (Gene chip analysis), RNA, and protein microarrays, which allow researchers to investigate the expression state of a large number of genes - in many cases, an organism's entire genome - in a single experiment. Such experiments can generate very large amounts of data, allowing researchers to assess the overall state of a cell or organism. Data in such large quantities is difficult - if not impossible - to analyze without the help of computer programs. Introduction Microarray data analysis is the final step in reading and processing data produced by a microarray chip. Samples undergo various processes including purification and scanning using the microchip, which then produces a large amount of data that requires processing via computer software. It involves several distinct steps, as outlined in the image below. Changing any one of the steps will change the outcome of the analysis, so t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microarray2

A microarray is a multiplex lab-on-a-chip. Its purpose is to simultaneously detect the expression of thousands of genes from a sample (e.g. from a tissue). It is a two-dimensional array on a solid substrate—usually a glass slide or silicon thin-film cell—that assays (tests) large amounts of biological material using high-throughput screening miniaturized, multiplexed and parallel processing and detection methods. The concept and methodology of microarrays was first introduced and illustrated in antibody microarrays (also referred to as antibody matrix) by Tse Wen Chang in 1983 in a scientific publication and a series of patents. The " gene chip" industry started to grow significantly after the 1995 '' Science Magazine'' article by the Ron Davis and Pat Brown labs at Stanford University. With the establishment of companies, such as Affymetrix, Agilent, Applied Microarrays, Arrayjet, Illumina, and others, the technology of DNA microarrays has become the most sophistic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson Product-moment Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficient ― is a measure of linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of teenagers from a high school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by Kar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phenotype

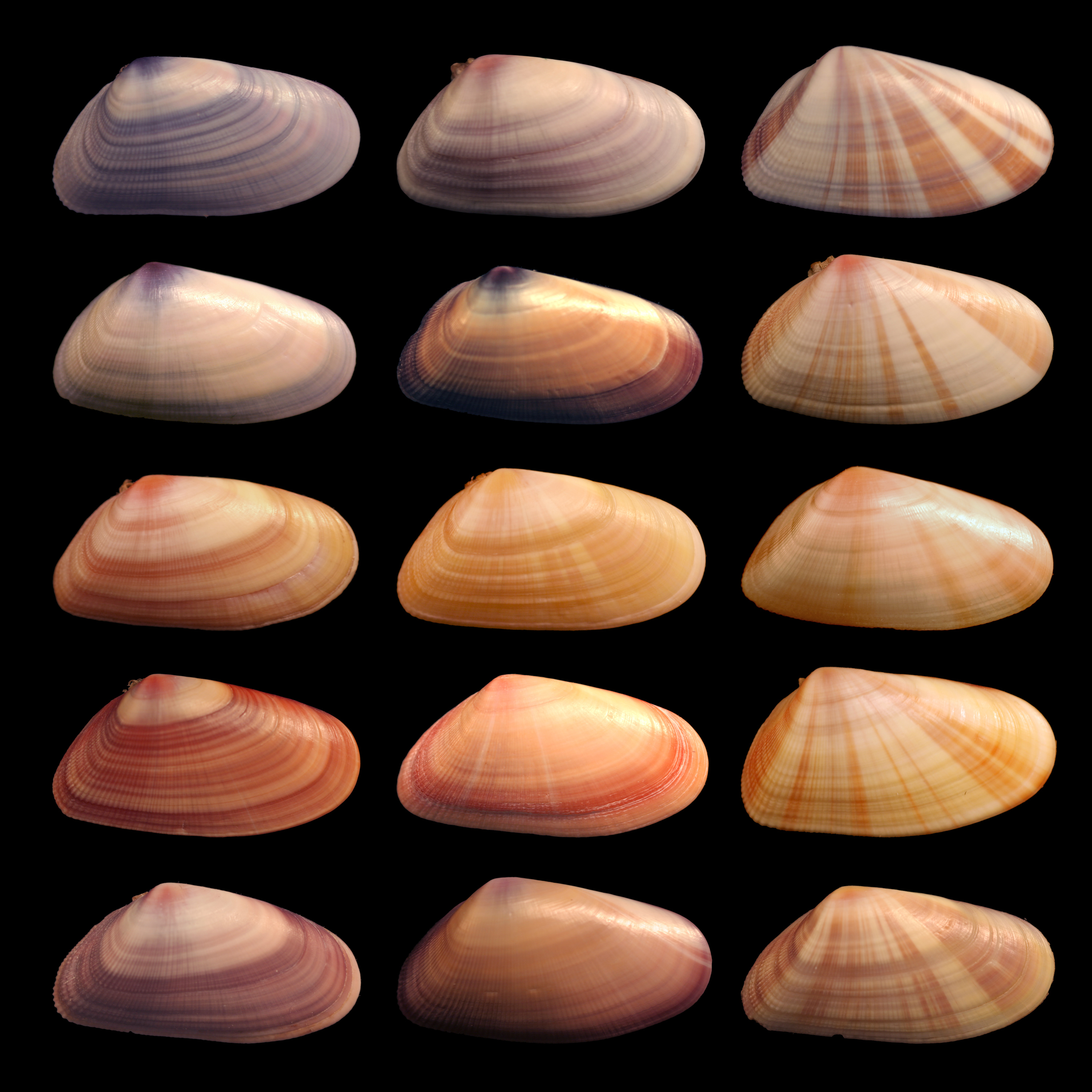

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology or physical form and structure, its developmental processes, its biochemical and physiological properties, its behavior, and the products of behavior. An organism's phenotype results from two basic factors: the expression of an organism's genetic code, or its genotype, and the influence of environmental factors. Both factors may interact, further affecting phenotype. When two or more clearly different phenotypes exist in the same population of a species, the species is called polymorphic. A well-documented example of polymorphism is Labrador Retriever coloring; while the coat color depends on many genes, it is clearly seen in the environment as yellow, black, and brown. Richard Dawkins in 1978 and then again in his 1982 book '' The Extended Phenotype'' suggested that one can regard bird nests and other built structures such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R Programming Language

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bioconductor

Bioconductor is a free, open source and open development software project for the analysis and comprehension of genomic data generated by wet lab experiments in molecular biology. Bioconductor is based primarily on the statistical R programming language, but does contain contributions in other programming languages. It has two releases each year that follow the semiannual releases of R. At any one time there is a release version, which corresponds to the released version of R, and a development version, which corresponds to the development version of R. Most users will find the release version appropriate for their needs. In addition there are many genome annotation packages available that are mainly, but not solely, oriented towards different types of microarrays. While computational methods continue to be developed to interpret biological data, the Bioconductor project is an open source software repository that hosts a wide range of statistical tools developed in the R progra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anduril (workflow Engine)

Anduril is an open source component-based workflow framework for scientific data analysis developed at the Systems Biology Laboratory, University of Helsinki. Anduril is designed to enable systematic, flexible and efficient data analysis, particularly in the field of high-throughput experiments in biomedical research. The workflow system currently provides components for several types of analysis such as sequencing, gene expression, SNP, ChIP-on-chip, comparative genomic hybridization and exon microarray analysis as well as cytometry and cell imaging analysis. Architecture and features A workflow is a series of processing steps connected together so that the output of one step is used as the input of another. Processing steps implement data analysis tasks such as data importing, statistical tests and report generation. In Anduril, processing steps are implemented using components, which are reusable executable code that can be written in any programming language. Components are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GenMAPP

GenMAPP (Gene Map Annotator and Pathway Profiler) is a free, open-source bioinformatics software tool designed to visualize and analyze genomic data in the context of pathways (metabolic, signaling), connecting gene-level datasets to biological processes and disease. First created in 2000, GenMAPP is developed by an open-source team based in an academic research laboratory. GenMAPP maintains databases of gene identifiers and collections of pathway maps in addition to visualization and analysis tools. Together with other public resources, GenMAPP aims to provide the research community with tools to gain insight into biology through the integration of data types ranging from genes to proteins to pathways to disease. History GenMAPP was first created in 2000 as a prototype software tool in the laboratory of Bruce Conklin at the J. David Gladstone Institutes in San Francisco and continues to be developed in the same non-profit, academic research environment. The first release versi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-means

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to Partition of a set, partition ''n'' observations into ''k'' clusters in which each observation belongs to the Cluster (statistics), cluster with the nearest mean (cluster centers or cluster centroid), serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. ''k''-means clustering minimizes within-cluster variances (squared Euclidean distances), but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using K-medians clustering, k-medians and k-medoids. The problem is computationally difficult (NP-hardness, NP-hard); however, efficient heuristic algorithms converge quickly to a local optimum. These are usually similar to the expectation-maximizati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-medoids

The -medoids problem is a clustering problem similar to -means. The name was coined by Leonard Kaufman and Peter J. Rousseeuw with their PAM algorithm. Both the -means and -medoids algorithms are partitional (breaking the dataset up into groups) and attempt to minimize the distance between points labeled to be in a cluster and a point designated as the center of that cluster. In contrast to the -means algorithm, -medoids chooses actual data points as centers (medoids or exemplars), and thereby allows for greater interpretability of the cluster centers than in -means, where the center of a cluster is not necessarily one of the input data points (it is the average between the points in the cluster). Furthermore, -medoids can be used with arbitrary dissimilarity measures, whereas -means generally requires Euclidean distance for efficient solutions. Because -medoids minimizes a sum of pairwise dissimilarities instead of a sum of squared Euclidean distances, it is more robust to noi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Centroid

In mathematics and physics, the centroid, also known as geometric center or center of figure, of a plane figure or solid figure is the arithmetic mean position of all the points in the surface of the figure. The same definition extends to any object in ''n''-dimensional Euclidean space. In geometry, one often assumes uniform mass density, in which case the '' barycenter'' or '' center of mass'' coincides with the centroid. Informally, it can be understood as the point at which a cutout of the shape (with uniformly distributed mass) could be perfectly balanced on the tip of a pin. In physics, if variations in gravity are considered, then a '' center of gravity'' can be defined as the weighted mean of all points weighted by their specific weight. In geography, the centroid of a radial projection of a region of the Earth's surface to sea level is the region's geographical center. History The term "centroid" is of recent coinage (1814). It is used as a substitute for th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UPGMA

UPGMA (unweighted pair group method with arithmetic mean) is a simple agglomerative (bottom-up) hierarchical clustering method. The method is generally attributed to Sokal and Michener. The UPGMA method is similar to its ''weighted'' variant, the WPGMA method. Note that the unweighted term indicates that all distances contribute equally to each average that is computed and does not refer to the math by which it is achieved. Thus the simple averaging in WPGMA produces a weighted result and the proportional averaging in UPGMA produces an unweighted result ('' see the working example''). Algorithm The UPGMA algorithm constructs a rooted tree (dendrogram) that reflects the structure present in a pairwise similarity matrix (or a dissimilarity matrix). At each step, the nearest two clusters are combined into a higher-level cluster. The distance between any two clusters \mathcal and \mathcal, each of size (''i.e.'', cardinality) and , is taken to be the average of all distances d(x, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |