|

Shannon–Fano–Elias Coding

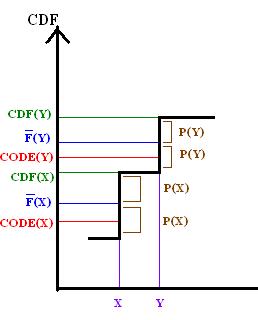

In information theory, Shannon–Fano–Elias coding is a precursor to arithmetic coding, in which probabilities are used to determine codewords. It is named for Claude Shannon, Robert Fano, and Peter Elias. Algorithm description Given a discrete random variable ''X'' of ordered values to be encoded, let p(x) be the probability for any ''x'' in ''X''. Define a function :\bar F(x) = \sum_p(x_i) + \frac 12 p(x) Algorithm: :For each ''x'' in ''X'', ::Let ''Z'' be the binary expansion of \bar F(x). ::Choose the length of the encoding of ''x'', L(x), to be the integer \left\lceil \log_2 \frac \right\rceil + 1 ::Choose the encoding of ''x'', code(x), be the first L(x) most significant bits after the decimal point of ''Z''. Example Let ''X'' = , with probabilities ''p'' = . :For ''A'' ::\bar F(A) = \frac 12 p(A) = \frac 12 \cdot \frac 13 = 0.1666\ldots ::In binary, ''Z''(''A'') = 0.0010101010... :: L(A) = \left\lceil \log_2 \frac 1 \frac 1 3 \right\rceil + 1 = \mathbf 3 ::co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Coding

Arithmetic coding (AC) is a form of entropy encoding used in lossless data compression. Normally, a String (computer science), string of characters is represented using a fixed number of bits per character, as in the American Standard Code for Information Interchange, ASCII code. When a string is converted to arithmetic encoding, frequently used characters will be stored with fewer bits and not-so-frequently occurring characters will be stored with more bits, resulting in fewer bits used in total. Arithmetic coding differs from other forms of entropy encoding, such as Huffman coding, in that rather than separating the input into component symbols and replacing each with a code, arithmetic coding encodes the entire message into a single number, an arbitrary-precision arithmetic, arbitrary-precision fraction ''q'', where . It represents the current information as a range, defined by two numbers. A recent family of entropy coders called asymmetric numeral systems allows for faster imp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, computer scientist, cryptographer and inventor known as the "father of information theory" and the man who laid the foundations of the Information Age. Shannon was the first to describe the use of Boolean algebra—essential to all digital electronic circuits—and helped found artificial intelligence (AI). Roboticist Rodney Brooks declared Shannon the 20th century engineer who contributed the most to 21st century technologies, and mathematician Solomon W. Golomb described his intellectual achievement as "one of the greatest of the twentieth century". At the University of Michigan, Shannon dual degreed, graduating with a Bachelor of Science in electrical engineering and another in mathematics, both in 1936. A 21-year-old master's degree student in electrical engineering at MIT, his thesis, "A Symbolic Analysis of Relay and Switching Circuits", demonstrated that electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert Fano

Roberto Mario "Robert" Fano (11 November 1917 – 13 July 2016) was an Italian-American computer scientist and professor of electrical engineering and computer science at the Massachusetts Institute of Technology. He became a student and working lab partner to Claude Shannon, whom he admired zealously and assisted in the early years of information theory. Early life and education Fano was born in Turin, Italy in 1917 to a Jewish family and grew up in Turin. Fano's father was the mathematician Gino Fano, his older brother was the physicist Ugo Fano, and Giulio Racah was a cousin. Fano studied engineering as an undergraduate at the School of Engineering of Torino (Politecnico di Torino) until 1939, when he emigrated to the United States as a result of anti-Jewish legislation passed under Benito Mussolini. He received his S.B. in electrical engineering from MIT in 1941, and upon graduation joined the staff of the MIT Radiation Laboratory. After World War II, Fano continued on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peter Elias

Peter Elias (November 23, 1923 – December 7, 2001) was a pioneer in the field of information theory. Born in New Brunswick, New Jersey, he was a member of the Massachusetts Institute of Technology faculty from 1953 to 1991. In 1955, Elias introduced convolutional codes as an alternative to block codes. He also established the binary erasure channel and proposed list decoding of error-correcting codes as an alternative to unique decoding. Career Peter Elias was a member of the Massachusetts Institute of Technology faculty from 1953 to 1991. His students included Elwyn Berlekamp and he was a colleague of Claude Shannon. From 1957 until 1966, he served as one of three founding editors of ''Information and Control''. Awards Elias received the Claude E. Shannon Award of the IEEE Information Theory Society (1977); the Golden Jubilee Award for Technological Innovation of the IEEE Information Theory Society (1998); and the IEEE Richard W. Hamming Medal (2002). Family background ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Random Variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. The term 'random variable' in its mathematical definition refers to neither randomness nor variability but instead is a mathematical function in which * the domain is the set of possible outcomes in a sample space (e.g. the set \ which are the possible upper sides of a flipped coin heads H or tails T as the result from tossing a coin); and * the range is a measurable space (e.g. corresponding to the domain above, the range might be the set \ if say heads H mapped to -1 and T mapped to 1). Typically, the range of a random variable is a subset of the real numbers. Informally, randomness typically represents some fundamental element of chance, such as in the roll of a die; it may also represent uncertainty, such as measurement error. However, the interpretation of probability is philosophica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Total Order

In mathematics, a total order or linear order is a partial order in which any two elements are comparable. That is, a total order is a binary relation \leq on some set X, which satisfies the following for all a, b and c in X: # a \leq a ( reflexive). # If a \leq b and b \leq c then a \leq c ( transitive). # If a \leq b and b \leq a then a = b ( antisymmetric). # a \leq b or b \leq a ( strongly connected, formerly called totality). Requirements 1. to 3. just make up the definition of a partial order. Reflexivity (1.) already follows from strong connectedness (4.), but is required explicitly by many authors nevertheless, to indicate the kinship to partial orders. Total orders are sometimes also called simple, connex, or full orders. A set equipped with a total order is a totally ordered set; the terms simply ordered set, linearly ordered set, toset and loset are also used. The term ''chain'' is sometimes defined as a synonym of ''totally ordered set'', but generally refers to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Most Significant Bit

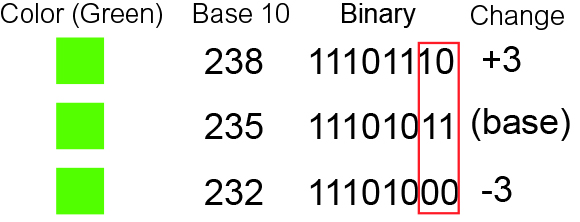

In computing, bit numbering is the convention used to identify the bit positions in a binary numeral system, binary number. Bit significance and indexing In computing, the least significant bit (LSb) is the bit position in a Binary numeral system, binary Integer (computer science), integer representing the lowest-order place of the integer. Similarly, the most significant bit (MSb) represents the highest-order place of the binary integer. The LSb is sometimes referred to as the ''low-order bit''. Due to the convention in positional notation of writing less significant digits further to the right, the LSb also might be referred to as the ''right-most bit''. The MSb is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSb and MSb correlate directly to the least significant Numerical digit, digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefix Code

A prefix code is a type of code system distinguished by its possession of the prefix property, which requires that there is no whole Code word (communication), code word in the system that is a prefix (computer science), prefix (initial segment) of any other code word in the system. It is trivially true for fixed-length codes, so only a point of consideration for variable-length code, variable-length codes. For example, a code with code has the prefix property; a code consisting of does not, because "5" is a prefix of "59" and also of "55". A prefix code is a uniquely decodable code: given a complete and accurate sequence, a receiver can identify each word without requiring a special marker between words. However, there are uniquely decodable codes that are not prefix codes; for instance, the reverse of a prefix code is still uniquely decodable (it is a suffix code), but it is not necessarily a prefix code. Prefix codes are also known as prefix-free codes, prefix condition codes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution Support (measure theory), supported on the real numbers, discrete or "mixed" as well as Continuous variable, continuous, is uniquely identified by a right-continuous Monotonic function, monotone increasing function (a càdlàg function) F \colon \mathbb R \rightarrow [0,1] satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from negative infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon Fano Elias Cdf Graph

Shannon may refer to: People * Shannon (given name) * Shannon (surname) * Shannon (American singer), stage name of singer Brenda Shannon Greene (born 1958) * Shannon (South Korean singer), British-South Korean singer and actress Shannon Arrum Williams (born 1998) * Shannon, intermittent stage name of English singer-songwriter Marty Wilde (born 1939) Places Australia * Shannon, Tasmania, a locality * Hundred of Shannon, a cadastral unit in South Australia * Shannon, a former name for the area named Calomba, South Australia since 1916 * Shannon River (Western Australia) * Shannon, Western Australia, a locality in the Shire of Manjimup * Shannon National Park, a national park in Western Australia Canada * Shannon, New Brunswick, a community * Shannon, Quebec, a city * Shannon Bay, former name of Darrell Bay, British Columbia * Shannon Falls, a waterfall in British Columbia Ireland * River Shannon, the longest river in Ireland ** Shannon Cave, a subterranean section of the Ri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to[0, 1], the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or "shannon (unit), shannons"), while base Euler's number, ''e'' gives "natural units" nat (unit), nat, and base 10 gives units of "dits", "bans", or "Hartley (unit), hartleys". An equivalent definition of entropy is the expected value of the self-information of a v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |