|

Server Hardware

A server is a computer that provides information to other computers called " clients" on a computer network. This architecture is called the client–server model. Servers can provide various functionalities, often called "services", such as sharing data or resources among multiple clients or performing computations for a client. A single server can serve multiple clients, and a single client can use multiple servers. A client process may run on the same device or may connect over a network to a server on a different device. Typical servers are database servers, file servers, mail servers, print servers, web servers, game servers, and application servers. Client–server systems are usually most frequently implemented by (and often identified with) the request–response model: a client sends a request to the server, which performs some action and sends a response back to the client, typically with a result or acknowledgment. Designating a computer as "server-class hardware" imp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Application Server

An application server is a server that hosts applications or software that delivers a business application through a communication protocol. For a typical web application, the application server sits behind the web servers. An application server framework is a service layer model. It includes software components available to a software developer through an application programming interface. An application server may have features such as clustering, fail-over, and load-balancing. The goal is for developers to focus on the business logic. Java application servers Jakarta EE (formerly Java EE or J2EE) defines the core set of API and features of Java application servers. The Jakarta EE infrastructure is partitioned into logical containers. *EJB container: Enterprise Beans are used to manage transactions. According to the Java BluePrints, the business logic of an application resides in Enterprise Beans—a modular server component providing many features, including dec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Daemon (computing)

In computing, a daemon is a program that runs as a background process, rather than being under the direct control of an interactive user. Customary convention is to name a daemon process with the letter ''d'' as a suffix to indicate that it's a daemon. For example, is a daemon that implements system logging facility, and is a daemon that serves incoming SSH connections. Even though the concept can apply to many computing systems, the term ''daemon'' is used almost exclusively in the context of Unix-based systems. In other contexts, different terms are used for the same concept. Systems often start daemons at boot time that will respond to network requests, hardware activity, or other programs by performing some task. Daemons such as cron may also perform defined tasks at scheduled times. Terminology In the context of computing, the word is generally pronounced either as or . The term was coined by the programmers at MIT's Project MAC. According to Fernando J. Cor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jargon File

The Jargon File is a glossary and usage dictionary of slang used by computer programmers. The original Jargon File was a collection of terms from technical cultures such as the MIT Computer Science and Artificial Intelligence Laboratory, MIT AI Lab, the Stanford University centers and institutes#Stanford Artificial Intelligence Laboratory, Stanford AI Lab (SAIL) and others of the old ARPANET Artificial intelligence, AI/Lisp programming language, LISP/PDP-10 communities, including BBN Technologies, Bolt, Beranek and Newman (BBN), Carnegie Mellon University, and Worcester Polytechnic Institute. It was published in paperback form in 1983 as ''The Hacker's Dictionary'' (edited by Guy L. Steele Jr., Guy Steele) and revised in 1991 as ''The New Hacker's Dictionary'' (ed. Eric S. Raymond; third edition published 1996). The concept of the file began with the Tech Model Railroad Club (TMRC) that came out of early TX-0 and PDP-1 hackers in the 1950s, where the term ''hacker'' emerged and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Host (network)

A network host is a computer or other device connected to a computer network. A host may work as a server offering information resources, services, and applications to users or other hosts on the network. Hosts are assigned at least one network address. A computer participating in networks that use the Internet protocol suite may also be called an IP host. Specifically, computers participating in the Internet are called Internet hosts. Internet hosts and other IP hosts have one or more IP addresses assigned to their network interfaces. The addresses are configured either manually by an administrator, automatically at startup by means of the Dynamic Host Configuration Protocol (DHCP), or by stateless address autoconfiguration methods. Network hosts that participate in applications that use the client–server model of computing are classified as server or client systems. Network hosts may also function as nodes in peer-to-peer applications, in which all nodes share and consum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet

The Internet (or internet) is the Global network, global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a internetworking, network of networks that consists of Private network, private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, Wireless network, wireless, and optical networking technologies. The Internet carries a vast range of information resources and services, such as the interlinked hypertext documents and Web application, applications of the World Wide Web (WWW), email, electronic mail, internet telephony, streaming media and file sharing. The origins of the Internet date back to research that enabled the time-sharing of computer resources, the development of packet switching in the 1960s and the design of computer networks for data communication. The set of rules (communication protocols) to enable i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ARPANET

The Advanced Research Projects Agency Network (ARPANET) was the first wide-area packet-switched network with distributed control and one of the first computer networks to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet. The ARPANET was established by the Advanced Research Projects Agency (now DARPA) of the United States Department of Defense. Building on the ideas of J. C. R. Licklider, Robert Taylor (computer scientist), Bob Taylor initiated the ARPANET project in 1966 to enable resource sharing between remote computers. Taylor appointed Lawrence Roberts (scientist), Larry Roberts as program manager. Roberts made the key decisions about the request for proposal to build the network. He incorporated Donald Davies' concepts and designs for packet switching, and sought input from Paul Baran on dynamic routing. In 1969, ARPA awarded the contract to build the Interface Message Processors (IMPs) for the network to Bolt Berane ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

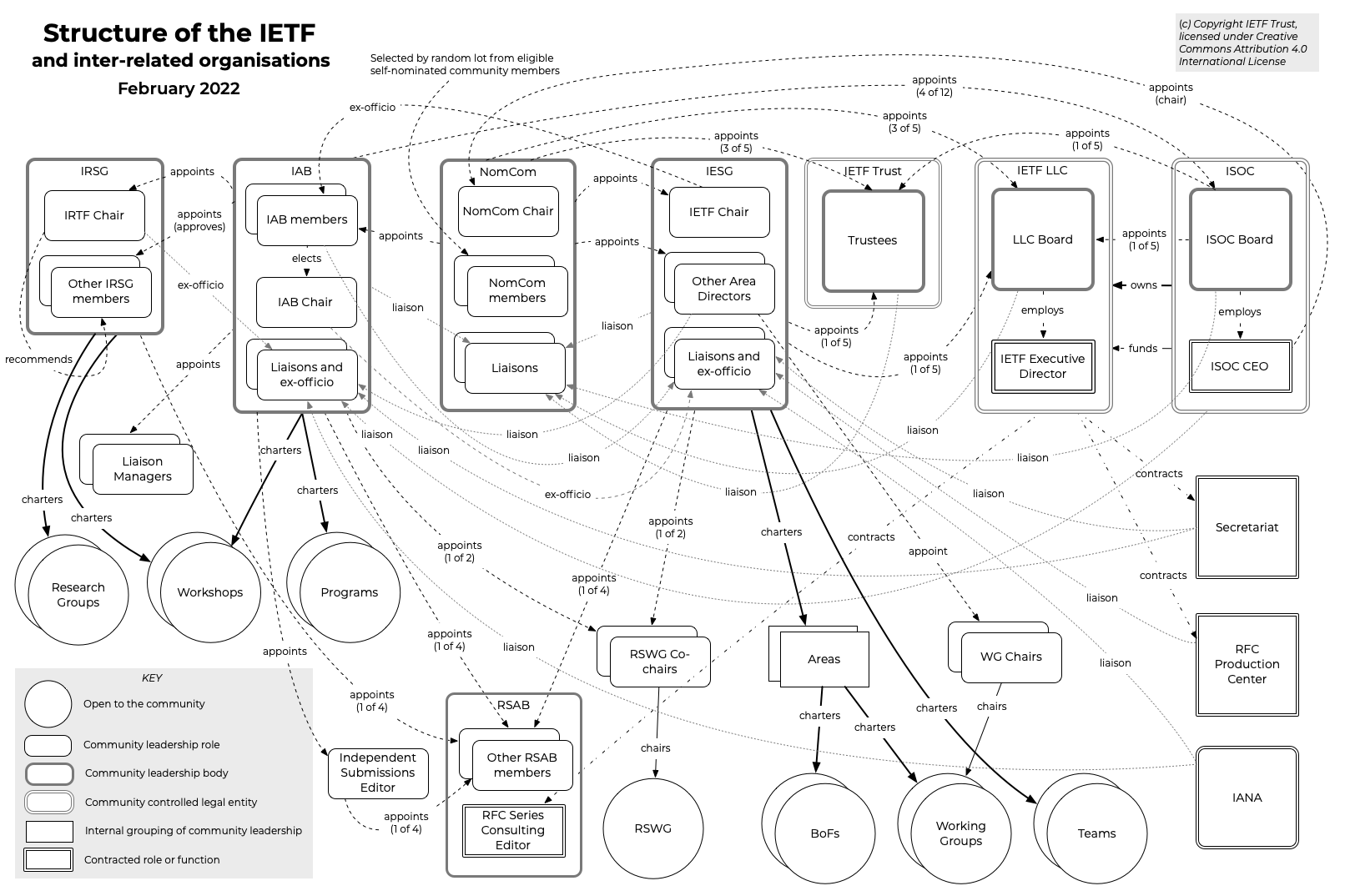

Internet Engineering Task Force

The Internet Engineering Task Force (IETF) is a standards organization for the Internet standard, Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster or requirements and all its participants are volunteers. Their work is usually funded by employers or other sponsors. The IETF was initially supported by the federal government of the United States but since 1993 has operated under the auspices of the Internet Society, a non-profit organization with local chapters around the world. Organization There is no membership in the IETF. Anyone can participate by signing up to a working group mailing list, or registering for an IETF meeting. The IETF operates in a bottom-up task creation mode, largely driven by working groups. Each working group normally has appointed two co-chairs (occasionally three); a charter that describes its focus; and what it is expected to produce, and when. It is open ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

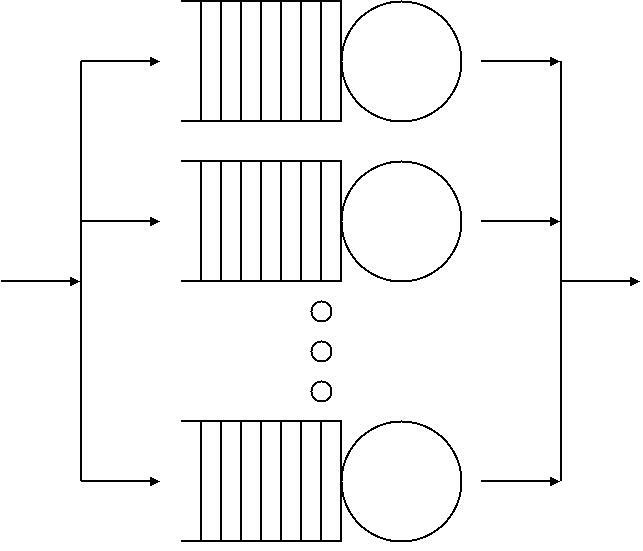

Kendall's Notation

In queueing theory, a discipline within the mathematical theory of probability Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expre ..., Kendall's notation (or sometimes Kendall notation) is the standard system used to describe and classify a queueing node. David George Kendall, D. G. Kendall proposed describing queueing models using three factors written A/S/''c'' in 1953 where A denotes the time between arrivals to the queue, S the service time distribution and ''c'' the number of service channels open at the node. It has since been extended to A/S/''c''/''K''/''N''/D where ''K'' is the capacity of the queue, ''N'' is the size of the population of jobs to be served, and D is the queueing discipline. When the final three parameters are not specified (e.g. M/M/1 queue), it is assumed ''K' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Queueing Theory

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang, who created models to describe the system of incoming calls at the Copenhagen Telephone Exchange Company. These ideas were seminal to the field of teletraffic engineering and have since seen applications in telecommunications, traffic engineering, computing, project management, and particularly industrial engineering, where they are applied in the design of factories, shops, offices, and hospitals. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fact, one of the flagship journals of the field is '' Queue ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |