|

Scale Analysis (statistics)

In statistics, scale analysis is a set of methods to analyze survey data, in which responses to questions are combined to measure a latent variable. These items can be dichotomous (e.g. yes/no, agree/disagree, correct/incorrect) or polytomous (e.g. disagree strongly/disagree/neutral/agree/agree strongly). Any measurement for such data is required to be reliable, valid, and homogeneous with comparable results over different studies. Constructing scales The item-total correlation approach is a way of identifying a group of questions whose responses can be combined into a single measure or scale. This is a simple approach that works by ensuring that, when considered across a whole population, responses to the questions in the group tend to vary together and, in particular, that responses to no individual question are poorly related to an average calculated from the others. Measurement models Measurement is the assignment of numbers to subjects in such a way that the relations betwee ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

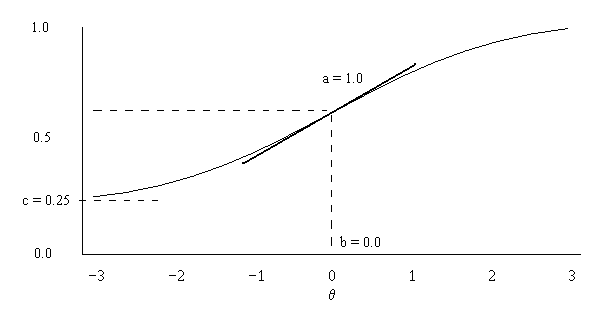

Item Response Theory

In psychometrics, item response theory (IRT) (also known as latent trait theory, strong true score theory, or modern mental test theory) is a paradigm for the design, analysis, and scoring of tests, questionnaires, and similar instruments measuring abilities, attitudes, or other variables. It is a theory of testing based on the relationship between individuals' performances on a test item and the test takers' levels of performance on an overall measure of the ability that item was designed to measure. Several different statistical models are used to represent both item and test taker characteristics. Unlike simpler alternatives for creating scales and evaluating questionnaire responses, it does not assume that each item is equally difficult. This distinguishes IRT from, for instance, Likert scaling, in which ''"''All items are assumed to be replications of each other or in other words items are considered to be parallel instruments".A. van Alphen, R. Halfens, A. Hasman and T. Imbos. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NOMINATE (scaling Method)

NOMINATE (an acronym for Nominal Three-Step Estimation) is a multidimensional scaling application developed by US political scientists Keith T. Poole and Howard Rosenthal in the early 1980s to analyze preferential and choice data, such as legislative roll-call voting behavior. In its most well-known application, members of the US Congress are placed on a two-dimensional map, with politicians who are ideologically similar (i.e. who often vote the same) being close together. One of these two dimensions corresponds to the familiar left-right (or liberal-conservative) spectrum. As computing capabilities grew, Poole and Rosenthal developed multiple iterations of their NOMINATE procedure: the original D-NOMINATE method, W-NOMINATE, and most recently DW-NOMINATE (for dynamic, weighted NOMINATE). In 2009, Poole and Rosenthal were the first recipients of the Society for Political Methodology's Best Statistical Software Award for their development of NOMINATE. In 2016, the society awa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multidimensional Scaling

Multidimensional scaling (MDS) is a means of visualizing the level of similarity of individual cases of a dataset. MDS is used to translate "information about the pairwise 'distances' among a set of n objects or individuals" into a configuration of n points mapped into an abstract Cartesian space. More technically, MDS refers to a set of related ordination techniques used in information visualization, in particular to display the information contained in a distance matrix. It is a form of non-linear dimensionality reduction. Given a distance matrix with the distances between each pair of objects in a set, and a chosen number of dimensions, ''N'', an MDS algorithm places each object into ''N''-dimensional space (a lower-dimensional representation) such that the between-object distances are preserved as well as possible. For ''N'' = 1, 2, and 3, the resulting points can be visualized on a scatter plot. Core theoretical contributions to MDS were made by James O. Ramsay of M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Class Analysis

In statistics, a latent class model (LCM) relates a set of observed (usually discrete) multivariate variables to a set of latent variables. It is a type of latent variable model. It is called a latent class model because the latent variable is discrete. A class is characterized by a pattern of conditional probabilities that indicate the chance that variables take on certain values. Latent class analysis (LCA) is a subset of structural equation modeling, used to find groups or subtypes of cases in multivariate categorical data. These subtypes are called "latent classes".Lazarsfeld, P.F. and Henry, N.W. (1968) ''Latent structure analysis''. Boston: Houghton Mifflin Formann, A. K. (1984). ''Latent Class Analyse: Einführung in die Theorie und Anwendung atent class analysis: Introduction to theory and application'. Weinheim: Beltz. Confronted with a situation as follows, a researcher might choose to use LCA to understand the data: Imagine that symptoms a-d have been measured in a ran ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rasch Model

The Rasch model, named after Georg Rasch, is a psychometric model for analyzing categorical data, such as answers to questions on a reading assessment or questionnaire responses, as a function of the trade-off between the respondent's abilities, attitudes, or personality traits, and the item difficulty. For example, they may be used to estimate a student's reading ability or the extremity of a person's attitude to capital punishment from responses on a questionnaire. In addition to psychometrics and educational research, the Rasch model and its extensions are used in other areas, including the health profession, agriculture, and market research The mathematical theory underlying Rasch models is a special case of item response theory. However, there are important differences in the interpretation of the model parameters and its philosophical implications that separate proponents of the Rasch model from the item response modeling tradition. A central aspect of this divide relates to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mokken Scale

The Mokken scale is a psychometric method of data reduction. A Mokken scale is a unidimensional scale that consists of hierarchically-ordered items that measure the same underlying, latent concept. This method is named after the political scientist Rob Mokken who suggested it in 1971. Mokken Scales have been used in psychology, education,Straat, J.H., Van Ark, L.A. and Sijtsma, K. (2014Minimum Sample Size Requirements for Mokken Scale Analysisin ''Educational and Psychological Measurement'Volume: 74 issue: 5, page(s): 809-822/ref>Palmgren, P.J., Brodin, U., Nilsson G.H., Watson, R., Stenfors, T. (2018) Investigating psychometric properties and dimensional structure of an educational environment measure (DREEM) using Mokken scale analysis – a pragmatic approach ''BMC Medical Education'' volume = 18, issue = 1, article 235 political science, public opinion, medicine and nursing.Cook, N.F., McCance, T., McCormack, B., Barr, O., Slater, P. (2018) Perceived caring attributes and prior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Guttman Scale

In the analysis of multivariate observations designed to assess subjects with respect to an attribute, a Guttman scale (named after Louis Guttman) is a single (unidimensional) ordinal scale for the assessment of the attribute, from which the original observations may be reproduced. The discovery of a Guttman scale in data depends on their multivariate distribution's conforming to a particular structure (see below). Hence, a Guttman scale is a ''hypothesis'' about the structure of the data, formulated with respect to a specified attribute and a specified population and cannot be constructed for any given set of observations. Contrary to a widespread belief, a Guttman scale is not limited to dichotomous variables and does not necessarily determine an order among the variables. But if variables are all dichotomous, the variables are indeed ordered by their sensitivity in recording the assessed attribute, as illustrated by Example 1. Deterministic model Example 1: Dichotomous variab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, persona ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Survey Data

Survey methodology is "the study of survey methods". As a field of applied statistics concentrating on human-research surveys, survey methodology studies the sampling of individual units from a population and associated techniques of survey data collection, such as questionnaire construction and methods for improving the number and accuracy of responses to surveys. Survey methodology targets instruments or procedures that ask one or more questions that may or may not be answered. Researchers carry out statistical surveys with a view towards making statistical inferences about the population being studied; such inferences depend strongly on the survey questions used. Polls about public opinion, public-health surveys, market-research surveys, government surveys and censuses all exemplify quantitative research that uses survey methodology to answer questions about a population. Although censuses do not include a "sample", they do include other aspects of survey methodology, lik ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cronbach's Alpha

Cronbach's alpha (Cronbach's \alpha), also known as tau-equivalent reliability (\rho_T) or coefficient alpha (coefficient \alpha), is a reliability coefficient that provides a method of measuring internal consistency of tests and measures. Numerous studies warn against using it unconditionally, and note that reliability coefficients based on structural equation modeling (SEM) are in many cases a suitable alternative.Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's alpha. Psychometrika, 74(1), 107–120. Green, S. B., & Yang, Y. (2009). Commentary on coefficient alpha: A cautionary tale. Psychometrika, 74(1), 121–135. Revelle, W., & Zinbarg, R. E. (2009). Coefficients alpha, beta, omega, and the glb: Comments on Sijtsma. Psychometrika, 74(1), 145–154. Cho, E., & Kim, S. (2015). Cronbach's coefficient alpha: Well known but poorly understood. Organizational Research Methods, 18(2), 207–230. Raykov, T., & Marcoulides, G. A. (2017). Thanks ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Test Theory

Classical test theory (CTT) is a body of related psychometric theory that predicts outcomes of psychological testing such as the difficulty of items or the ability of test-takers. It is a theory of testing based on the idea that a person's observed or obtained score on a test is the sum of a true score (error-free score) and an error score. Generally speaking, the aim of classical test theory is to understand and improve the reliability of psychological tests. ''Classical test theory'' may be regarded as roughly synonymous with ''true score theory''. The term "classical" refers not only to the chronology of these models but also contrasts with the more recent psychometric theories, generally referred to collectively as item response theory, which sometimes bear the appellation "modern" as in "modern latent trait theory". Classical test theory as we know it today was codified by Novick (1966) and described in classic texts such as Lord & Novick (1968) and Allen & Yen (1979/2002). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |