|

Scalable Parallelism

Software is said to exhibit scalable parallelism if it can make use of additional processors to solve larger problems, i.e. this term refers to software for which Gustafson's law holds. Consider a program whose execution time is dominated by one or more loops, each of that updates every element of an array --- for example, the following finite difference heat equation stencil calculation: for t := 0 to T do for i := 1 to N-1 do new(i) := (A(i-1) + A(i) + A(i) + A(i+1)) * .25 // explicit forward-difference with R = 0.25 end for i := 1 to N-1 do A(i) := new(i) end end In the above code, we can execute all iterations of each "i" loop concurrently, i.e., turn each into a parallel loop. In such cases, it is often possible to make effective use of twice as many processors for a problem of array size 2N as for a problem of array size N. As in this example, scalable parallelism is typically a form of data parallelism. This form of parallel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gustafson's Law

In computer architecture, Gustafson's law (or Gustafson–Barsis's law) gives the speedup in the execution time of a task that theoretically gains from parallel computing, using a hypothetical run of ''the task'' on a single-core machine as the baseline. To put it another way, it is the theoretical "slowdown" of an ''already parallelized'' task if running on a serial machine. It is named after computer scientist John L. Gustafson and his colleague Edwin H. Barsis, and was presented in the article ''Reevaluating Amdahl's Law'' in 1988. Definition Gustafson estimated the speedup S of a program gained by using parallel computing as follows: : \begin S &= s + p \times N \\ &= s + (1 - s) \times N \\ &= N + (1 - N) \times s \end where * S is the theoretical speedup of the program with parallelism (scaled speedup); *N is the number of processors; * s and p are the fractions of time spent executing the serial parts and the parallel parts of the program on the ''parallel' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite Difference Method

In numerical analysis, finite-difference methods (FDM) are a class of numerical techniques for solving differential equations by approximating derivatives with finite differences. Both the spatial domain and time interval (if applicable) are discretized, or broken into a finite number of steps, and the value of the solution at these discrete points is approximated by solving algebraic equations containing finite differences and values from nearby points. Finite difference methods convert ordinary differential equations (ODE) or partial differential equations (PDE), which may be nonlinear, into a system of linear equations that can be solved by matrix algebra techniques. Modern computers can perform these linear algebra computations efficiently which, along with their relative ease of implementation, has led to the widespread use of FDM in modern numerical analysis. Today, FDM are one of the most common approaches to the numerical solution of PDE, along with finite element metho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heat Equation

In mathematics and physics, the heat equation is a certain partial differential equation. Solutions of the heat equation are sometimes known as caloric functions. The theory of the heat equation was first developed by Joseph Fourier in 1822 for the purpose of modeling how a quantity such as heat diffuses through a given region. As the prototypical parabolic partial differential equation, the heat equation is among the most widely studied topics in pure mathematics, and its analysis is regarded as fundamental to the broader field of partial differential equations. The heat equation can also be considered on Riemannian manifolds, leading to many geometric applications. Following work of Subbaramiah Minakshisundaram and Åke Pleijel, the heat equation is closely related with spectral geometry. A seminal nonlinear variant of the heat equation was introduced to differential geometry by James Eells and Joseph Sampson in 1964, inspiring the introduction of the Ricci flow by Richard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stencil (numerical Analysis)

In mathematics, especially the areas of numerical analysis concentrating on the numerical solution of partial differential equations, a stencil is a geometric arrangement of a nodal group that relate to the point of interest by using a numerical approximation routine. Stencils are the basis for many algorithms to numerically solve partial differential equations (PDE). Two examples of stencils are the five-point stencil and the Crank–Nicolson method stencil. Stencils are classified into two categories: compact and non-compact, the difference being the layers from the point of interest that are also used for calculation. In the notation used for one-dimensional stencils n-1, n, n+1 indicate the time steps where timestep n and n-1 have known solutions and time step n+1 is to be calculated. The spatial location of finite volumes used in the calculation are indicated by j-1, j and j+1. Etymology Graphical representations of node arrangements and their coefficients arose early i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Parallelism

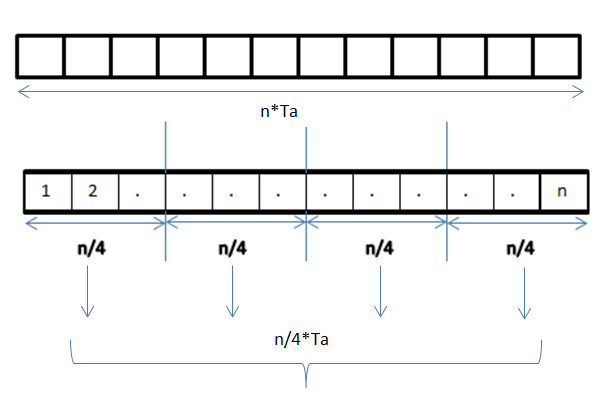

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. One important thing to no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Parallelization

Automatic may refer to: Music Bands * Automatic (band), Australian rock band * Automatic (American band), American rock band * The Automatic, a Welsh alternative rock band Albums * ''Automatic'' (Jack Bruce album), a 1983 electronic rock album * ''Automatic'' (Sharpe & Numan album), a 1989 synthpop album * ''Automatic'' (The Jesus and Mary Chain album), a 1989 alternative rock album * ''Automatic'', a 1997 electronic album by Le Car * ''Automatic'' (Dweezil Zappa album), a 2000 hard rock album, or the title song * ''Automatic'', a 2003 punk rock album by The Turbo A.C.'s * ''Automatic'' (Stitches album), a 2006 punk rock album, or the title song * ''Automatic'' (VNV Nation album), a 2011 futurepop album * ''Automatic'', a 2013 reggae-rock album by Iration * ''Automatic'' (Don Broco album), a 2015 rock album * ''Automatic'' (Kaskade album), 2015 album by Kaskade * ''Automatic'' (Mildlife album), 2020 album by Mildlife Songs * "Automatic" (Danny Fernandes song), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Optimization

In compiler theory, loop optimization is the process of increasing execution speed and reducing the overheads associated with loops. It plays an important role in improving cache performance and making effective use of parallel processing capabilities. Most execution time of a scientific program is spent on loops; as such, many compiler optimization techniques have been developed to make them faster. Representation of computation and transformations Since instructions inside loops can be executed repeatedly, it is frequently not possible to give a bound on the number of instruction executions that will be impacted by a loop optimization. This presents challenges when reasoning about the correctness and benefits of a loop optimization, specifically the representations of the computation being optimized and the optimization(s) being performed.In the book Reasoning About Program Transformations', Jean-Francois Collard discusses in depth the general question of representing exe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Computing

A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. Distributed computing is a field of computer science that studies distributed systems. The components of a distributed system interact with one another in order to achieve a common goal. Three significant challenges of distributed systems are: maintaining concurrency of components, overcoming the lack of a global clock, and managing the independent failure of components. When a component of one system fails, the entire system does not fail. Examples of distributed systems vary from SOA-based systems to massively multiplayer online games to peer-to-peer applications. A computer program that runs within a distributed system is called a distributed program, and ''distributed programming'' is the process of writing such programs. There are many different types of implementations for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

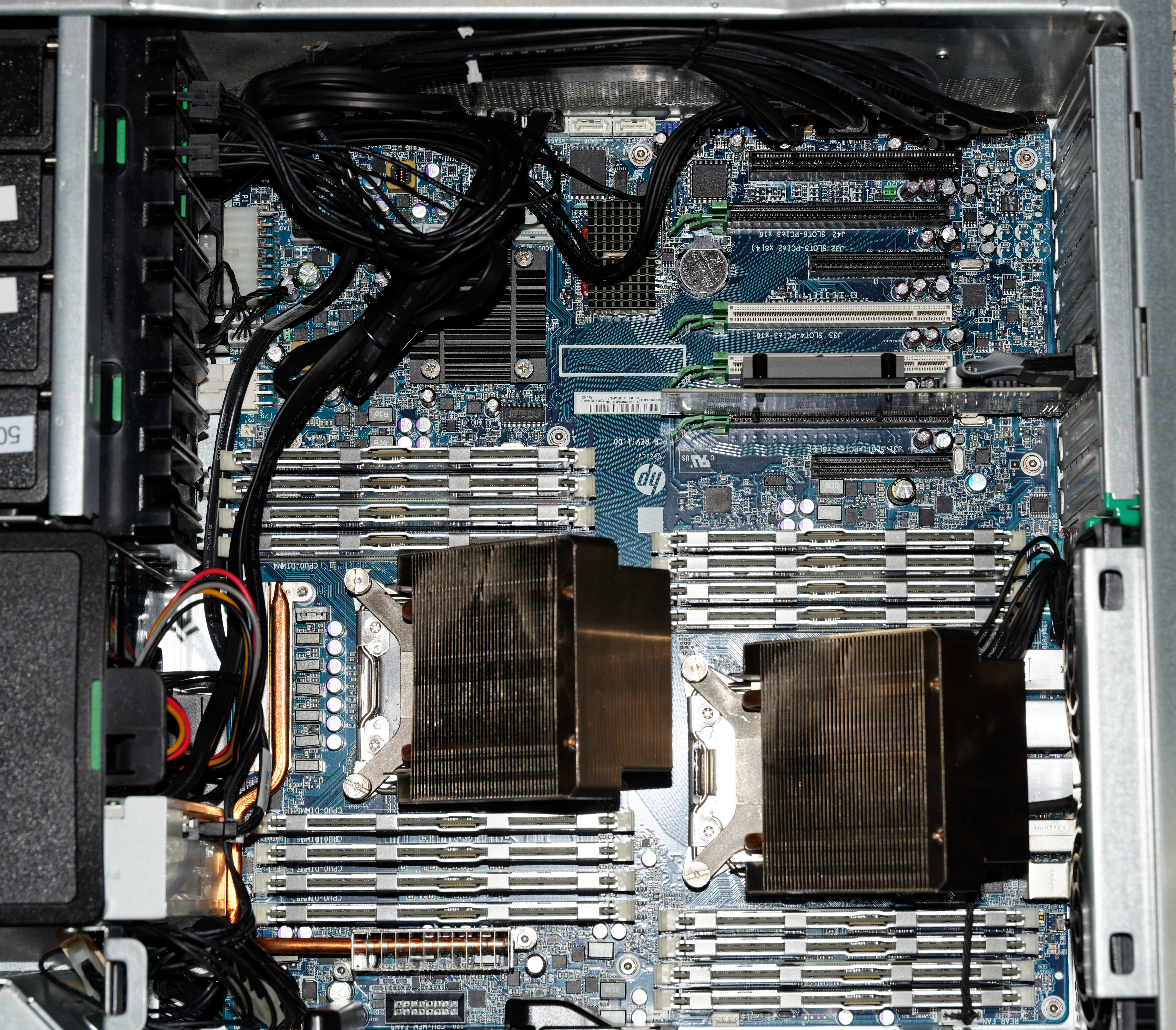

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital (later Compaq, then HP, now HPE). Techniques developed by these companies later ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Embarrassingly Parallel

In parallel computing, an embarrassingly parallel workload or problem (also called embarrassingly parallelizable, perfectly parallel, delightfully parallel or pleasingly parallel) is one where little or no effort is needed to separate the problem into a number of parallel tasks. This is often the case where there is little or no dependency or need for communication between those parallel tasks, or for results between them.Section 1.4.4 of: Thus, these are different from distributed computing problems that need communication between tasks, especially communication of intermediate results. They are easy to perform on server farms which lack the special infrastructure used in a true supercomputer cluster. They are thus well suited to large, Internet-based volunteer computing platforms such as BOINC, and do not suffer from parallel slowdown. The opposite of embarrassingly parallel problems are inherently serial problems, which cannot be parallelized at all. A common example of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Locality

Computer software is said to exhibit scalable localityDavid Wonnacott. ''Achieving Scalable Locality with Time Skewing.'' International Journal of Parallel Programming 30.3 (2002) if it can continue to make use of processors that out-pace their memory systems, to solve ever larger problems. This term is a high-performance uniprocessor analog of the use of scalable parallelism to refer to software for which increasing numbers of processors can be employed for larger problems. Overview Consider the memory usage patterns of the following loop nest (an iterative two-dimensional stencil computation): for t := 0 to T do for i := 1 to N-1 do for j := 1 to N-1 do new(i,j) := (A(i-1,j) + A(i,j-1) + A(i,j) + A(i,j+1) + A(i+1,j)) * .2 end end for i := 1 to N-1 do for j := 1 to N-1 do A(i,j) := new(i,j) end end end The entire loop nest touches about 2*N**2 array elements, and performs about 5*T*N**2 floating-point o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |