|

SUIF

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Parallelization

Automatic parallelization, also auto parallelization, or autoparallelization refers to converting sequential code into multi-threaded and/or vectorized code in order to use multiple processors simultaneously in a shared-memory multiprocessor ( SMP) machine. Fully automatic parallelization of sequential programs is a challenge because it requires complex program analysis and the best approach may depend upon parameter values that are not known at compilation time. The programming control structures on which autoparallelization places the most focus are loops, because, in general, most of the execution time of a program takes place inside some form of loop. There are two main approaches to parallelization of loops: pipelined multi-threading and cyclic multi-threading. For example, consider a loop that on each iteration applies a hundred operations, and runs for a thousand iterations. This can be thought of as a grid of 100 columns by 1000 rows, a total of 100,000 operations. Cyc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Computing

Distributed computing is a field of computer science that studies distributed systems, defined as computer systems whose inter-communicating components are located on different networked computers. The components of a distributed system communicate and coordinate their actions by passing messages to one another in order to achieve a common goal. Three significant challenges of distributed systems are: maintaining concurrency of components, overcoming the lack of a global clock, and managing the independent failure of components. When a component of one system fails, the entire system does not fail. Examples of distributed systems vary from SOA-based systems to microservices to massively multiplayer online games to peer-to-peer applications. Distributed systems cost significantly more than monolithic architectures, primarily due to increased needs for additional hardware, servers, gateways, firewalls, new subnets, proxies, and so on. Also, distributed systems are prone to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called Exascale computing, exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the TOP500, world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computer

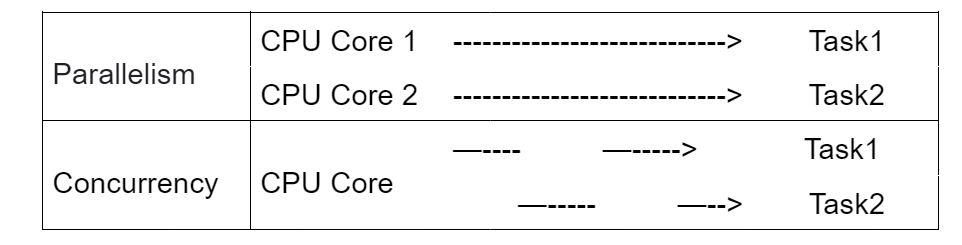

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than mak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Nest Optimization

In computer science and particularly in compiler design, loop nest optimization (LNO) is an optimization technique that applies a set of loop transformations for the purpose of locality optimization or parallelization or another loop overhead reduction of the loop nests. ( Nested loops occur when one loop is inside of another loop.) One classical usage is to reduce memory access latency or the cache bandwidth necessary due to cache reuse for some common linear algebra algorithms. The technique used to produce this optimization is called loop tiling, also known as loop blocking or strip mine and interchange. Overview Loop tiling partitions a loop's iteration space into smaller chunks or blocks, so as to help ensure data used in a loop stays in the cache until it is reused. The partitioning of loop iteration space leads to partitioning of a large array into smaller blocks, thus fitting accessed array elements into cache size, enhancing cache reuse and eliminating cache size r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polyhedral Model

The polyhedral model (also called the polytope method) is a mathematical framework for programs that perform large numbers of operations -- too large to be explicitly enumerated -- thereby requiring a ''compact'' representation. Nested loop programs are the typical, but not the only example, and the most common use of the model is for loop nest optimization in program optimization. The polyhedral method treats each loop iteration within nested loops as lattice points inside mathematical objects called polyhedra, performs affine transformations or more general non-affine transformations such as tiling on the polytopes, and then converts the transformed polytopes into equivalent, but optimized (depending on targeted optimization goal), loop nests through polyhedra scanning. Simple example Consider the following example written in C: const int n = 100; int i, j; int a n] = ; for (i = 1; i < n; i++) for (i = 0; i < n; i++) The essential problem with this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nanohub

nanoHUB.org is a science and engineering gateway comprising community-contributed resources and geared toward education, professional networking, and interactive simulation tools for nanotechnology. Funded by the United States National Science Foundation (NSF), it is a product of the Network for Computational Nanotechnology (NCN). NCN supports research efforts in nanoelectronics; nanomaterials; nanoelectromechanical systems (NEMS); nanofluidics; nanomedicine, nanobiology; and nanophotonics. History The Network for Computational Nanotechnology was established in 2002 to create a resource for nanoscience and nanotechnology via online services for research, education, and professional collaboration. Initially a multi-university initiative of eight member institutions including Purdue University, the University of California at Berkeley, the University of Illinois at Urbana-Champaign, Massachusetts Institute of Technology, the Molecular Foundry at Lawrence Berkeley Nationa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Client–server Model

The client–server model is a distributed application structure that partitions tasks or workloads between the providers of a resource or service, called servers, and service requesters, called clients. Often clients and servers communicate over a computer network on separate hardware, but both client and server may be on the same device. A server host runs one or more server programs, which share their resources with clients. A client usually does not share its computing resources, but it requests content or service from a server and may share its own content as part of the request. Clients, therefore, initiate communication sessions with servers, which await incoming requests. Examples of computer applications that use the client–server model are email, network printing, and the World Wide Web. Client and server role The server component provides a function or service to one or many clients, which initiate requests for such services. Servers are classified by the servic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speedup

In computer architecture, speedup is a number that measures the relative performance of two systems processing the same problem. More technically, it is the improvement in speed of execution of a task executed on two similar architectures with different resources. The notion of speedup was established by Amdahl's law, which was particularly focused on parallel processing. However, speedup can be used more generally to show the effect on performance after any resource enhancement. Definitions Speedup can be defined for two different types of quantities: '' latency'' and ''throughput''. ''Latency'' of an architecture is the reciprocal of the execution speed of a task: : L = \frac = \frac, where * ''v'' is the execution speed of the task; * ''T'' is the execution time of the task; * ''W'' is the execution workload of the task. ''Throughput'' of an architecture is the execution rate of a task: : Q = \rho vA = \frac = \frac, where * ''ρ'' is the execution density (e.g., the numbe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical User Interface

A graphical user interface, or GUI, is a form of user interface that allows user (computing), users to human–computer interaction, interact with electronic devices through Graphics, graphical icon (computing), icons and visual indicators such as secondary notation. In many applications, GUIs are used instead of text-based user interface, text-based UIs, which are based on typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard. The actions in a GUI are usually performed through direct manipulation interface, direct manipulation of the graphical elements. Beyond computers, GUIs are used in many handheld mobile devices such as MP3 players, portable media players, gaming devices, smartphones and smaller household, office and Distributed control system, industrial controls. The term ''GUI'' tends not to be applied to other lower-displa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Induction Variable

In computer science, an induction variable is a variable that gets increased or decreased by a fixed amount on every iteration of a loop or is a linear function of another induction variable. For example, in the following loop, i and j are induction variables: for (i = 0; i < 10; ++i) Application to strength reduction A common is to recognize the existence of induction variables and replace them with simpler computations; for example, the code above could be rewritten by the compiler as follows, on the assumption that the addition of a constant will be cheaper than a multiplication. |

Privatization

Privatization (rendered privatisation in British English) can mean several different things, most commonly referring to moving something from the public sector into the private sector. It is also sometimes used as a synonym for deregulation when a heavily regulated private company or industry becomes less regulated. Government functions and services may also be privatised (which may also be known as "franchising" or "out-sourcing"); in this case, private entities are tasked with the implementation of government programs or performance of government services that had previously been the purview of state-run agencies. Some examples include revenue collection, law enforcement, water supply, and prison management. Another definition is that privatization is the sale of a state-owned enterprise or municipally owned corporation to private investors; in this case shares may be traded in the public market for the first time, or for the first time since an enterprise's previous natio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |