|

SUDAAN

SUDAAN is a proprietary statistical software package for the analysis of correlated data, including correlated data encountered in complex sample surveys. SUDAAN originated in 1972 at RTI International (the trade name of Research Triangle Institute). Individual commercial licenses are sold for $1,460 a year, or $3,450 permanently. Current versionSUDAAN Release 11.0.3 released in May 2018, is a single program consisting of a family of thirteen analytic procedures used to analyze data from complex s and other observational and experimental studies involving repeated measures and [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Software

Statistical software are specialized computer programs for analysis in statistics and econometrics. Open-source * ADaMSoft – a generalized statistical software with data mining algorithms and methods for data management * ADMB – a software suite for non-linear statistical modeling based on C++ which uses automatic differentiation * Chronux – for neurobiological time series data * DAP – free replacement for SAS * Environment for DeveLoping KDD-Applications Supported by Index-Structures (ELKI) a software framework for developing data mining algorithms in Java * Epi Info – statistical software for epidemiology developed by Centers for Disease Control and Prevention (CDC). Apache 2 licensed * Fityk – nonlinear regression software (GUI and command line) * GNU Octave – programming language very similar to MATLAB with statistical features * gretl – gnu regression, econometrics and time-series library * intrinsic Noise Analyzer (iNA) – For analyzing intrinsic fluctua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Social Statistics

Social statistics is the use of statistical measurement systems to study human behavior in a social environment. This can be accomplished through polling a group of people, evaluating a subset of data obtained about a group of people, or by observation and statistical analysis of a set of data that relates to people and their behaviors. Statistics in the social sciences History Adolph Quetelet was a proponent of social physics. In his book ''Physique sociale'' he presents distributions of human heights, age of marriage, time of birth and death, time series of human marriages, births and deaths, a survival density for humans and curve describing fecundity as a function of age. He also developed the Quetelet Index. Francis Ysidro Edgeworth published "On Methods of Ascertaining Variations in the Rate of Births, Deaths, and Marriages" in 1885 which uses squares of differences for studying fluctuations and George Udny Yule published "On the Correlation of total Pauperism with Prop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

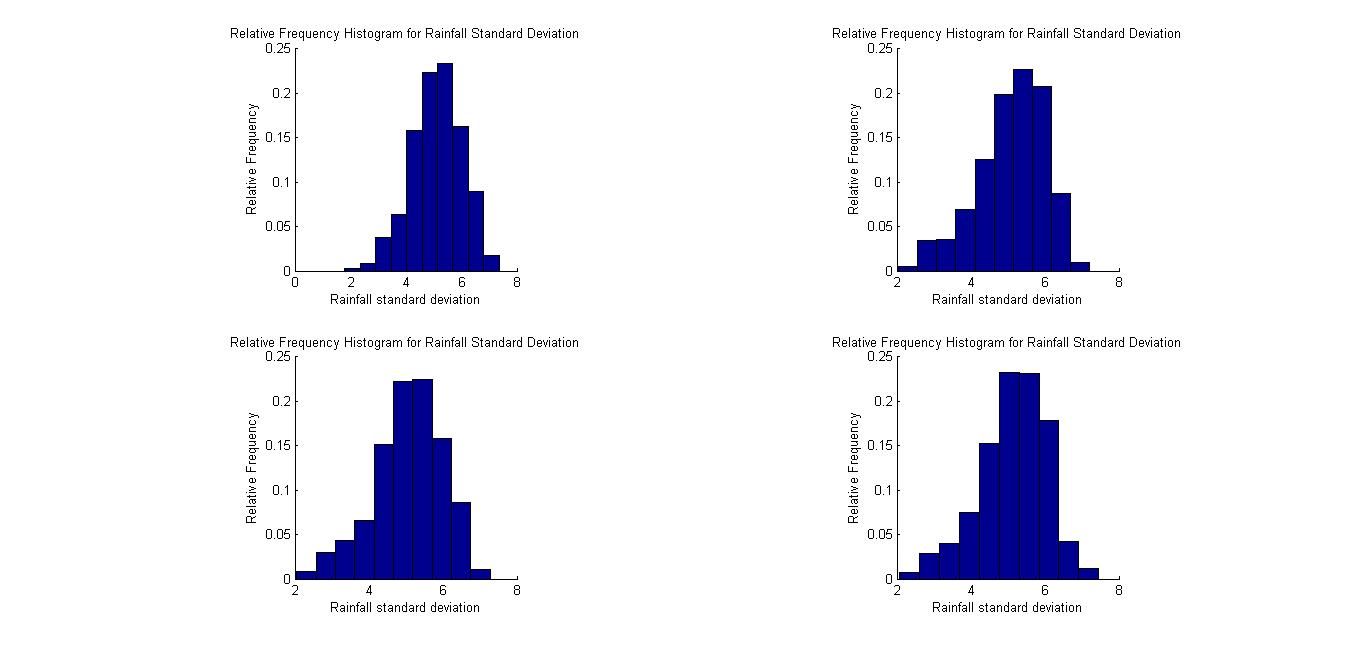

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, Mon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Taylor Series

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor series are equal near this point. Taylor series are named after Brook Taylor, who introduced them in 1715. A Taylor series is also called a Maclaurin series, when 0 is the point where the derivatives are considered, after Colin Maclaurin, who made extensive use of this special case of Taylor series in the mid-18th century. The partial sum formed by the first terms of a Taylor series is a polynomial of degree that is called the th Taylor polynomial of the function. Taylor polynomials are approximations of a function, which become generally better as increases. Taylor's theorem gives quantitative estimates on the error introduced by the use of such approximations. If the Taylor series of a function is convergent, its sum is the limit of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighting

The process of weighting involves emphasizing the contribution of particular aspects of a phenomenon (or of a set of data) over others to an outcome or result; thereby highlighting those aspects in comparison to others in the analysis. That is, rather than each variable in the data set contributing equally to the final result, some of the data is adjusted to make a greater contribution than others. This is analogous to the practice of adding (extra) weight to one side of a pair of scales in order to favour either the buyer or seller. While weighting may be applied to a set of data, such as epidemiological data, it is more commonly applied to measurements of light, heat, sound, gamma radiation, and in fact any stimulus that is spread over a spectrum of frequencies. Weighting and loudness In the measurement of loudness, for example, a weighting filter is commonly used to emphasise frequencies around 3 to 6 kHz where the human ear is most sensitive, while attenuating very ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record of the data set in question. The data set lists values for each of the variables, such as for example height and weight of an object, for each member of the data set. Data sets can also consist of a collection of documents or files. In the open data discipline, data set is the unit to measure the information released in a public open data repository. The European data.europa.eu portal aggregates more than a million data sets. Some other issues ( real-time data sources, non-relational data sets, etc.) increases the difficulty to reach a consensus about it. Properties Several characteristics define a data set's structure and properties. These include the number and types of the attributes or variables, and various statistical measures applic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value (magnitude and sign) of a given data set. For a data set, the ''arithmetic mean'', also known as "arithmetic average", is a measure of central tendency of a finite set of numbers: specifically, the sum of the values divided by the number of values. The arithmetic mean of a set of numbers ''x''1, ''x''2, ..., x''n'' is typically denoted using an overhead bar, \bar. If the data set were based on a series of observations obtained by sampling from a statistical population, the arithmetic mean is the ''sample mean'' (\bar) to distinguish it from the mean, or expected value, of the underlying distribution, the ''population mean'' (denoted \mu or \mu_x).Underhill, L.G.; Bradfield d. (1998) ''Introstat'', Juta and Company Ltd.p. 181/ref> Outside probability and statistics, a wide range of other notions of mean are o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error (statistics)

The standard error (SE) of a statistic (usually an estimate of a parameter) is the standard deviation of its sampling distribution or an estimate of that standard deviation. If the statistic is the sample mean, it is called the standard error of the mean (SEM). The sampling distribution of a mean is generated by repeated sampling from the same population and recording of the sample means obtained. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely around the population mean. Therefore, the relationship between the standard error of the mean and the standard deviation is such that, for a given sample size, the standard error of the mean equals the standard deviation divided by the square root of the sample size. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proprietary Software

Proprietary software is software that is deemed within the free and open-source software to be non-free because its creator, publisher, or other rightsholder or rightsholder partner exercises a legal monopoly afforded by modern copyright and intellectual property law to exclude the recipient from freely sharing the software or modifying it, and—in some cases, as is the case with some patent-encumbered and EULA-bound software—from making use of the software on their own, thereby restricting his or her freedoms. It is often contrasted with open-source or free software. For this reason, it is also known as non-free software or closed-source software. Types Origin Until the late 1960s computers—large and expensive mainframe computers, machines in specially air-conditioned computer rooms—were usually leased to customers rather than sold. Service and all software available were usually supplied by manufacturers without separate charge until 1969. Computer vendors ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are ''linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the so-called demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. However ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |