|

Rule Of Succession

In probability theory, the rule of succession is a formula introduced in the 18th century by Pierre-Simon Laplace in the course of treating the sunrise problem. The formula is still used, particularly to estimate underlying probabilities when there are few observations or for events that have not been observed to occur at all in (finite) sample data. Statement of the rule of succession If we repeat an experiment that we know can result in a success or failure, ''n'' times independently, and get ''s'' successes, and ''n − s'' failures, then what is the probability that the next repetition will succeed? More abstractly: If ''X''1, ..., ''X''''n''+1 are conditionally independent random variables that each can assume the value 0 or 1, then, if we know nothing more about them, :P(X_=1 \mid X_1+\cdots+X_n=s)=. Interpretation Since we have the prior knowledge that we are looking at an experiment for which both success and failure are possible, our estimate is as if we had obse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta Function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral : \Beta(z_1,z_2) = \int_0^1 t^(1-t)^\,dt for complex number inputs z_1, z_2 such that \Re(z_1), \Re(z_2)>0. The beta function was studied by Leonhard Euler and Adrien-Marie Legendre and was given its name by Jacques Binet; its symbol is a Greek capital beta. Properties The beta function is symmetric, meaning that \Beta(z_1,z_2) = \Beta(z_2,z_1) for all inputs z_1 and z_2.Davis (1972) 6.2.2 p.258 A key property of the beta function is its close relationship to the gamma function: : \Beta(z_1,z_2)=\frac. A proof is given below in . The beta function is also closely related to binomial coefficients. When (or , by symmetry) is a positive integer, it follows from the definition of the gamma function thatDavis (1972) 6.2.1 p.258 : \Beta(m,n) =\dfrac = \frac \B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes Rule

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule), named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately (by conditioning it on their age) than simply assuming that the individual is typical of the population as a whole. One of the many applications of Bayes' theorem is Bayesian inference, a particular approach to statistical inference. When applied, the probabilities involved in the theorem may have different probability interpretations. With Bayesian probability interpretation, the theorem expresses how a degree of belief, expressed as a probability, should rationally change to account for the availability of related evidence. Bayesian inference is fundamental to Bayesia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cromwell's Rule

Cromwell's rule, named by statistician Dennis Lindley, states that the use of prior probabilities of 1 ("the event will definitely occur") or 0 ("the event will definitely not occur") should be avoided, except when applied to statements that are logically true or false, such as 2+2 equaling 4 or 5. The reference is to Oliver Cromwell, who wrote to the General Assembly of the Church of Scotland on 3 August 1650, shortly before the Battle of Dunbar, including a phrase that has become well known and frequently quoted: As Lindley puts it, assigning a probability should "leave a little probability for the moon being made of green cheese; it can be as small as 1 in a million, but have it there since otherwise an army of astronauts returning with samples of the said cheese will leave you unmoved." Similarly, in assessing the likelihood that tossing a coin will result in either a head or a tail facing upwards, there is a possibility, albeit remote, that the coin will land on its edge a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Laplace

Pierre-Simon, marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French scholar and polymath whose work was important to the development of engineering, mathematics, statistics, physics, astronomy, and philosophy. He summarized and extended the work of his predecessors in his five-volume ''Mécanique céleste'' (''Celestial Mechanics'') (1799–1825). This work translated the geometric study of classical mechanics to one based on calculus, opening up a broader range of problems. In statistics, the Bayesian interpretation of probability was developed mainly by Laplace. Laplace formulated Laplace's equation, and pioneered the Laplace transform which appears in many branches of mathematical physics, a field that he took a leading role in forming. The Laplacian differential operator, widely used in mathematics, is also named after him. He restated and developed the nebular hypothesis of the origin of the Solar System and was one of the first scientists to suggest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conjugate Prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and the prior is called a conjugate prior for the likelihood function p(x \mid \theta). A conjugate prior is an algebraic convenience, giving a closed-form expression for the posterior; otherwise, numerical integration may be necessary. Further, conjugate priors may give intuition by more transparently showing how a likelihood function updates a prior distribution. The concept, as well as the term "conjugate prior", were introduced by Howard Raiffa and Robert Schlaifer in their work on Bayesian decision theory.Howard Raiffa and Robert Schlaifer. ''Applied Statistical Decision Theory''. Division of Research, Graduate School of Business Administration, Harvard University, 1961. A similar concept had been discovered independently by George Alfred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirichlet Distribution

In probability and statistics, the Dirichlet distribution (after Peter Gustav Lejeune Dirichlet), often denoted \operatorname(\boldsymbol\alpha), is a family of continuous multivariate probability distributions parameterized by a vector \boldsymbol\alpha of positive reals. It is a multivariate generalization of the beta distribution, (Chapter 49: Dirichlet and Inverted Dirichlet Distributions) hence its alternative name of multivariate beta distribution (MBD). Dirichlet distributions are commonly used as prior distributions in Bayesian statistics, and in fact, the Dirichlet distribution is the conjugate prior of the categorical distribution and multinomial distribution. The infinite-dimensional generalization of the Dirichlet distribution is the ''Dirichlet process''. Definitions Probability density function The Dirichlet distribution of order ''K'' ≥ 2 with parameters ''α''1, ..., ''α''''K'' > 0 has a probability density function with respect to Lebesgue m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multinomial Distribution

In probability theory, the multinomial distribution is a generalization of the binomial distribution. For example, it models the probability of counts for each side of a ''k''-sided dice rolled ''n'' times. For ''n'' independent trials each of which leads to a success for exactly one of ''k'' categories, with each category having a given fixed success probability, the multinomial distribution gives the probability of any particular combination of numbers of successes for the various categories. When ''k'' is 2 and ''n'' is 1, the multinomial distribution is the Bernoulli distribution. When ''k'' is 2 and ''n'' is bigger than 1, it is the binomial distribution. When ''k'' is bigger than 2 and ''n'' is 1, it is the categorical distribution. The term "multinoulli" is sometimes used for the categorical distribution to emphasize this four-way relationship (so ''n'' determines the prefix, and ''k'' the suffix). The Bernoulli distribution models the outcome of a single Bernoulli trial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithm

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a number to the base is the exponent to which must be raised, to produce . For example, since , the ''logarithm base'' 10 of is , or . The logarithm of to ''base'' is denoted as , or without parentheses, , or even without the explicit base, , when no confusion is possible, or when the base does not matter such as in big O notation. The logarithm base is called the decimal or common logarithm and is commonly used in science and engineering. The natural logarithm has the number as its base; its use is widespread in mathematics and physics, because of its very simple derivative. The binary logarithm uses base and is frequently used in computer science. Logarithms were introduced by John Napier in 1614 as a means of simplifying calculations. They were rapidly adopted by navigators, scientists, engineers, surveyors and others to perform high-a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Logarithm

The natural logarithm of a number is its logarithm to the base of the mathematical constant , which is an irrational and transcendental number approximately equal to . The natural logarithm of is generally written as , , or sometimes, if the base is implicit, simply . Parentheses are sometimes added for clarity, giving , , or . This is done particularly when the argument to the logarithm is not a single symbol, so as to prevent ambiguity. The natural logarithm of is the power to which would have to be raised to equal . For example, is , because . The natural logarithm of itself, , is , because , while the natural logarithm of is , since . The natural logarithm can be defined for any positive real number as the area under the curve from to (with the area being negative when ). The simplicity of this definition, which is matched in many other formulas involving the natural logarithm, leads to the term "natural". The definition of the natural logarithm can then b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

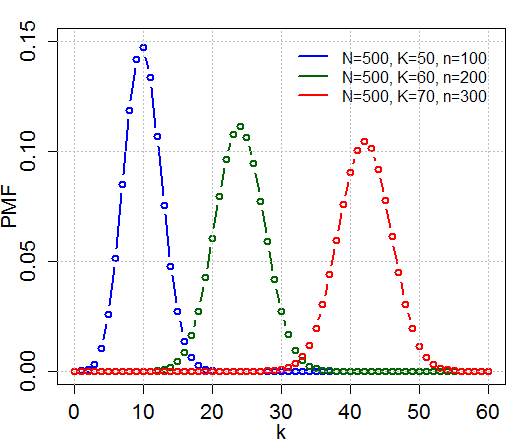

Hypergeometric Distribution

In probability theory and statistics, the hypergeometric distribution is a discrete probability distribution that describes the probability of k successes (random draws for which the object drawn has a specified feature) in n draws, ''without'' replacement, from a finite population of size N that contains exactly K objects with that feature, wherein each draw is either a success or a failure. In contrast, the binomial distribution describes the probability of k successes in n draws ''with'' replacement. Definitions Probability mass function The following conditions characterize the hypergeometric distribution: * The result of each draw (the elements of the population being sampled) can be classified into one of two mutually exclusive categories (e.g. Pass/Fail or Employed/Unemployed). * The probability of a success changes on each draw, as each draw decreases the population (''sampling without replacement'' from a finite population). A random variable X follows the hyperg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prior Probability

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one's beliefs about this quantity before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable. Bayes' theorem calculates the renormalized pointwise product of the prior and the likelihood function, to produce the ''posterior probability distribution'', which is the conditional distribution of the uncertain quantity given the data. Similarly, the prior probability of a random event or an uncertain proposition is the unconditional probability that is assigned before any relevant evidence is taken into account. Priors can be created using a num ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)