|

Rigid Motion Segmentation

In computer vision, rigid motion segmentation is the process of separating regions, features, or trajectories from a video sequence into coherent subsets of space and time. These subsets correspond to independent rigidly moving objects in the scene. The goal of this segmentation is to differentiate and extract the meaningful rigid motion from the background and analyze it. Image segmentation techniques labels the pixels to be a part of pixels with certain characteristics at a particular time. Here, the pixels are segmented depending on its relative movement over a period of time i.e. the time of the video sequence. There are a number of methods that have been proposed to do so. There is no consistent way to classify motion segmentation due to its large variation in literature. Depending on the segmentation criterion used in the algorithm it can be broadly classified into the following categories: image difference, statistical methods, wavelets, layering, optical flow and factorizati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thresholding (image Processing)

In digital image processing, thresholding is the simplest method of segmenting images. From a grayscale image, thresholding can be used to create binary images. Definition The simplest thresholding methods replace each pixel in an image with a black pixel if the image intensity I_ is less than a fixed value called the threshold T, or a white pixel if the pixel intensity is greater than that threshold. In the example image on the right, this results in the dark tree becoming completely black, and the bright snow becoming completely white. Automatic thresholding While in some cases, the threshold T can be selected manually by the user, there are many cases where the user wants the threshold to be automatically set by an algorithm. In those cases, the threshold should be the "best" threshold in the sense that the partition of the pixels above and below the threshold should match as closely as possible the actual partition between the two classes of objects represented by those ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RANSAC

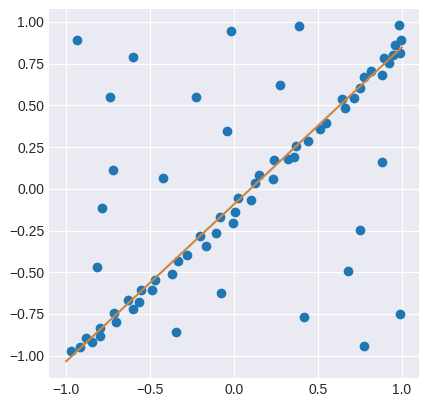

Random sample consensus (RANSAC) is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers, when outliers are to be accorded no influence on the values of the estimates. Therefore, it also can be interpreted as an outlier detection method. It is a non-deterministic algorithm in the sense that it produces a reasonable result only with a certain probability, with this probability increasing as more iterations are allowed. The algorithm was first published by Fischler and Bolles at SRI International in 1981. They used RANSAC to solve the Location Determination Problem (LDP), where the goal is to determine the points in the space that project onto an image into a set of landmarks with known locations. RANSAC uses repeated random sub-sampling. A basic assumption is that the data consists of "inliers", i.e., data whose distribution can be explained by some set of model parameters, though may be subject to noise, and "outlier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homography

In projective geometry, a homography is an isomorphism of projective spaces, induced by an isomorphism of the vector spaces from which the projective spaces derive. It is a bijection that maps lines to lines, and thus a collineation. In general, some collineations are not homographies, but the fundamental theorem of projective geometry asserts that is not so in the case of real projective spaces of dimension at least two. Synonyms include projectivity, projective transformation, and projective collineation. Historically, homographies (and projective spaces) have been introduced to study perspective and projections in Euclidean geometry, and the term ''homography'', which, etymologically, roughly means "similar drawing", dates from this time. At the end of the 19th century, formal definitions of projective spaces were introduced, which differed from extending Euclidean or affine spaces by adding points at infinity. The term "projective transformation" originated in these abs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Epipolar Geometry

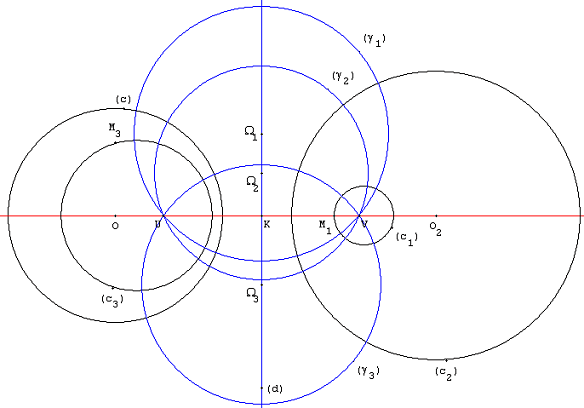

Epipolar geometry is the geometry of stereo vision. When two cameras view a 3D scene from two distinct positions, there are a number of geometric relations between the 3D points and their projections onto the 2D images that lead to constraints between the image points. These relations are derived based on the assumption that the cameras can be approximated by the pinhole camera model. Definitions The figure below depicts two pinhole cameras looking at point X. In real cameras, the image plane is actually behind the focal center, and produces an image that is symmetric about the focal center of the lens. Here, however, the problem is simplified by placing a ''virtual image plane'' in front of the focal center i.e. optical center of each camera lens to produce an image not transformed by the symmetry. OL and OR represent the centers of symmetry of the two cameras lenses. X represents the point of interest in both cameras. Points xL and xR are the projections of point X onto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Outlier

In statistics, an outlier is a data point that differs significantly from other observations. An outlier may be due to a variability in the measurement, an indication of novel data, or it may be the result of experimental error; the latter are sometimes excluded from the data set. An outlier can be an indication of exciting possibility, but can also cause serious problems in statistical analyses. Outliers can occur by chance in any distribution, but they can indicate novel behaviour or structures in the data-set, measurement error, or that the population has a heavy-tailed distribution. In the case of measurement error, one wishes to discard them or use statistics that are robust to outliers, while in the case of heavy-tailed distributions, they indicate that the distribution has high skewness and that one should be very cautious in using tools or intuitions that assume a normal distribution. A frequent cause of outliers is a mixture of two distributions, which may be two dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Noise Reduction

Noise reduction is the process of removing noise from a signal. Noise reduction techniques exist for audio and images. Noise reduction algorithms may distort the signal to some degree. Noise rejection is the ability of a circuit to isolate an undesired signal component from the desired signal component, as with common-mode rejection ratio. All signal processing devices, both analog and digital, have traits that make them susceptible to noise. Noise can be random with an even frequency distribution (white noise), or frequency-dependent noise introduced by a device's mechanism or signal processing algorithms. In electronic systems, a major type of noise is ''hiss'' created by random electron motion due to thermal agitation. These agitated electrons rapidly add and subtract from the output signal and thus create detectable noise. In the case of photographic film and magnetic tape, noise (both visible and audible) is introduced due to the grain structure of the medium. In photogra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Singular Value Decomposition

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any \ m \times n\ matrix. It is related to the polar decomposition. Specifically, the singular value decomposition of an \ m \times n\ complex matrix is a factorization of the form \ \mathbf = \mathbf\ , where is an \ m \times m\ complex unitary matrix, \ \mathbf\ is an \ m \times n\ rectangular diagonal matrix with non-negative real numbers on the diagonal, is an n \times n complex unitary matrix, and \ \mathbf\ is the conjugate transpose of . Such decomposition always exists for any complex matrix. If is real, then and can be guaranteed to be real orthogonal matrices; in such contexts, the SVD is often denoted \ \mathbf^\mathsf\ . The diagonal entries \ \sigma_i = \Sigma_\ of \ \mathbf\ are uniquely determined by and are known as the singular values ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tomasi–Kanade Factorization

The Tomasi–Kanade factorization is the seminal work by Carlo Tomasi and Takeo Kanade in the early 1990s. It charted out an elegant and simple solution based on a SVD-based factorization scheme for analysing image measurements of a rigid object captured from different views using a weak perspective camera model. The crucial observation made by authors was that if all the measurements (i.e., image co-ordinates of all the points in all the views) are collected in a single matrix, the point trajectories will reside in a certain subspace. The dimension of the subspace in which the image data resides is a direct consequence of two factors: # The type of camera that projects the scene (for example, affine or perspective) # The nature of inspected object (for instance, rigid or non-rigid). The low-dimensionality of the subspace is mirrored (captured) trivially as reduced rank of the measurement matrix. This reduced rank of measurement matrix can be motivated from the fact that, the po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stereo Vision

Stereopsis () is the component of depth perception retrieved through binocular vision. Stereopsis is not the only contributor to depth perception, but it is a major one. Binocular vision happens because each eye receives a different image because they are in slightly different positions on one’s head (left and right eyes). These positional differences are referred to as "horizontal disparities" or, more generally, " binocular disparities". Disparities are processed in the visual cortex of the brain to yield depth perception. While binocular disparities are naturally present when viewing a real three-dimensional scene with two eyes, they can also be simulated by artificially presenting two different images separately to each eye using a method called stereoscopy. The perception of depth in such cases is also referred to as "stereoscopic depth". The perception of depth and three-dimensional structure is, however, possible with information visible from one eye alone, such as diffe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fundamental Matrix (computer Vision)

In computer vision, the fundamental matrix \mathbf is a 3×3 matrix which relates corresponding points in stereo images. In epipolar geometry, with homogeneous image coordinates, x and x′, of corresponding points in a stereo image pair, Fx describes a line (an epipolar line) on which the corresponding point x′ on the other image must lie. That means, for all pairs of corresponding points holds : \mathbf'^ \mathbf = 0. Being of rank two and determined only up to scale, the fundamental matrix can be estimated given at least seven point correspondences. Its seven parameters represent the only geometric information about cameras that can be obtained through point correspondences alone. The term "fundamental matrix" was coined by QT Luong in his influential PhD thesis. It is sometimes also referred to as the "bifocal tensor". As a tensor it is a two-point tensor in that it is a bilinear form relating points in distinct coordinate systems. The above relation which defin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optical Flow

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image. The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world. Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment. Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion. The term optical flow is also used by roboticists, encompassing related techn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)