|

Real-mode

Real mode, also called real address mode, is an operating mode of all x86-compatible CPUs. The mode gets its name from the fact that addresses in real mode always correspond to real locations in memory. Real mode is characterized by a 20- bit segmented memory address space (giving 1 MB of addressable memory) and unlimited direct software access to all addressable memory, I/O addresses and peripheral hardware. Real mode provides no support for memory protection, multitasking, or code privilege levels. Before the introduction of protected mode with the release of the 80286, real mode was the only available mode for x86 CPUs; and for backward compatibility, all x86 CPUs start in real mode when reset, though it is possible to emulate real mode on other systems when starting in other modes. History The 80286 architecture introduced protected mode, allowing for (among other things) hardware-level memory protection. Using these new features, however, required a new operating sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

X86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set architecture, instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit computing, 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit Processor register, vector registers (XMM registers). Each of these vector registers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unix

Unix (, ; trademarked as UNIX) is a family of multitasking, multi-user computer operating systems that derive from the original AT&T Unix, whose development started in 1969 at the Bell Labs research center by Ken Thompson, Dennis Ritchie, and others. Initially intended for use inside the Bell System, AT&T licensed Unix to outside parties in the late 1970s, leading to a variety of both academic and commercial Unix variants from vendors including University of California, Berkeley ( BSD), Microsoft (Xenix), Sun Microsystems ( SunOS/ Solaris), HP/ HPE ( HP-UX), and IBM ( AIX). The early versions of Unix—which are retrospectively referred to as " Research Unix"—ran on computers such as the PDP-11 and VAX; Unix was commonly used on minicomputers and mainframes from the 1970s onwards. It distinguished itself from its predecessors as the first portable operating system: almost the entire operating system is written in the C programming language (in 1973), which allows U ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DOS Extender

A DOS extender is a computer software program running under DOS that enables software to run in a protected mode environment even though the host operating system is only capable of operating in real mode. DOS extenders were initially developed in the 1980s following the introduction of the Intel 80286 processor (and later expanded upon with the Intel 80386), to cope with the memory limitations of DOS. DOS extender operation A DOS extender is a program that "extends" DOS so that programs running in protected mode can transparently interface with the underlying DOS API. This was necessary because many of the functions provided by DOS require 16-bit segment and offset addresses pointing to memory locations within the Conventional memory, first 640 kilobytes of memory. Protected mode, however, uses an incompatible addressing method where the segment registers (now called selectors) are used to point to an entry in the Global Descriptor Table which describes the characteristics of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DOS Protected Mode Interface

In computing, the DOS Protected Mode Interface (DPMI) is a specification introduced in 1989 which allows a DOS program to run in protected mode, giving access to many features of the new PC processors of the time not available in real mode. It was initially developed by Microsoft for Windows 3.0, although Microsoft later turned control of the specification over to an industry committee with open membership. Almost all modern DOS extenders are based on DPMI and allow DOS programs to address all memory available in the PC and to run in protected mode (mostly in ring (computer security), ring 3, least privileged). Overview DPMI stands for DOS Protected Mode Interface. It is an API that allows a program to run in protected mode on 80286 series and later processors, and do the calls to real mode without having to set up these CPU modes manually. DPMI also provides the functions for managing various resources, notably Computer Memory, memory. This allows the DPMI-enabled programs to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

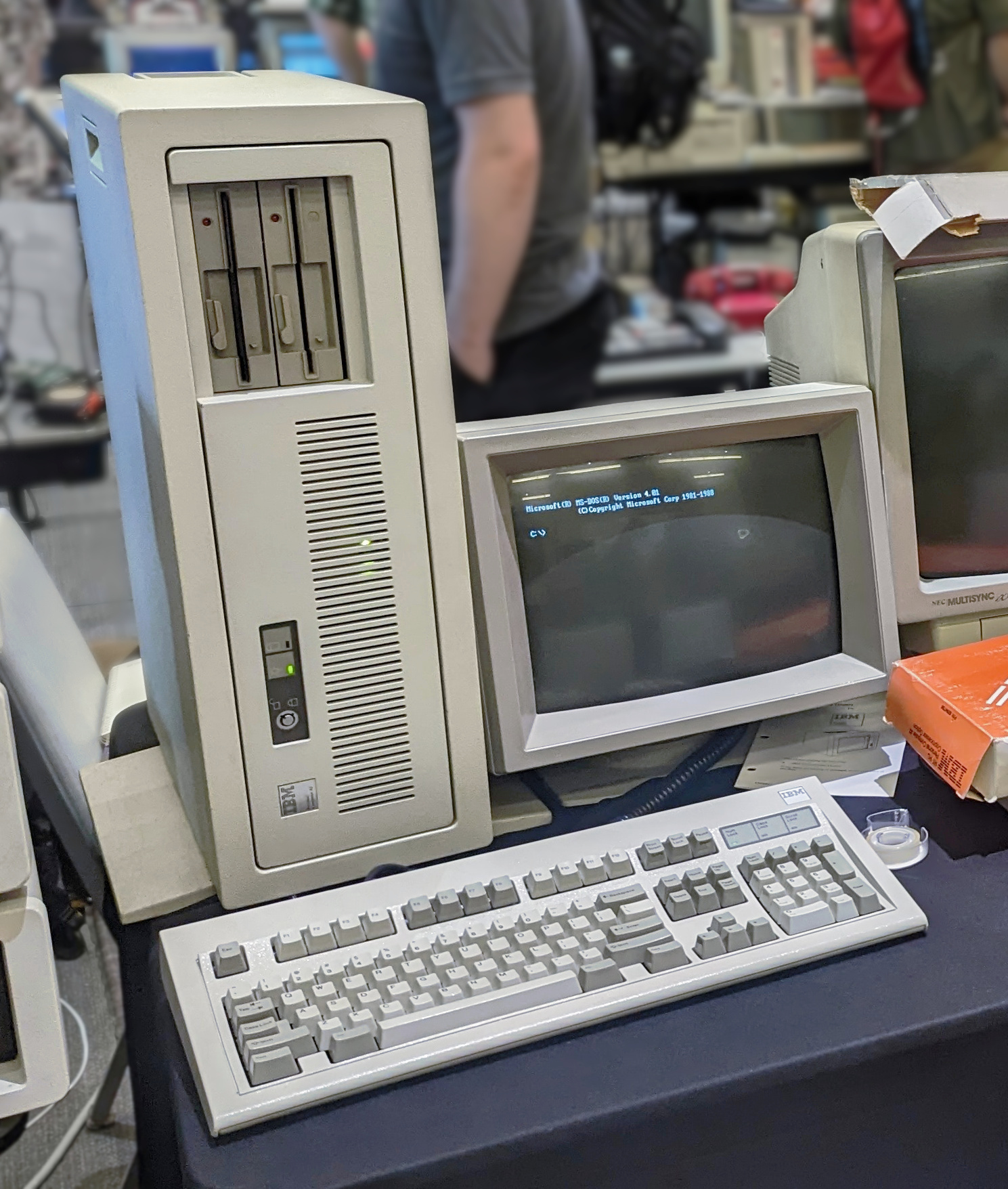

IBM Personal Computer

The IBM Personal Computer (model 5150, commonly known as the IBM PC) is the first microcomputer released in the IBM PC model line and the basis for the IBM PC compatible ''de facto'' standard. Released on August 12, 1981, it was created by a team of engineers and designers at International Business Machines (IBM), directed by William C. Lowe and Philip Don Estridge in Boca Raton, Florida. Powered by an x86-architecture Intel 8088 processor, the machine was based on open architecture and third-party peripherals. Over time, expansion cards and software technology increased to support it. The PC had a substantial influence on the personal computer market; the specifications of the IBM PC became one of the most popular computer design standards in the world. The only significant competition it faced from a non-compatible platform throughout the 1980s was from Apple's Macintosh product line, as well as consumer-grade platforms created by companies like Commodore and Atari. Mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

GNU GRUB

GNU GRUB (short for GNU GRand Unified Bootloader, commonly referred to as GRUB) is a boot loader package from the GNU Project. GRUB is the reference implementation of the Free Software Foundation's Multiboot Specification, which provides a user the choice to boot one of multiple operating systems installed on a computer set up for multi-booting or select a specific Kernel (operating system), kernel configuration available on a particular operating system's partitions. GNU GRUB was developed from a package called the ''Grand Unified Bootloader'' (a play on Grand Unified Theory). It is predominantly used for Unix-like systems. Operation Booting When a computer is turned on, its BIOS finds the primary bootable device (usually the computer's hard disk) and runs the initial Bootstrapping (computing), bootstrap program from the master boot record (MBR). The MBR is the first Disk sector, sector of the hard disk. This bootstrap program must be small because it has to fit in a sin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

IBM Personal Computer/AT

The IBM Personal Computer AT (model 5170, abbreviated as IBM AT or PC/AT) was released in 1984 as the fourth model in the IBM Personal Computer line, following the IBM PC/XT and its IBM Portable PC variant. It was designed around the Intel 80286 microprocessor. Name IBM did not specify an expanded form of ''AT'' on the machine, press releases, brochures or documentation, but some sources expand the term as ''Advanced Technology'', including at least one internal IBM document. History IBM's 1984 introduction of the AT was seen as an unusual move for the company, which typically waited for competitors to release new products before producing its own models. At $4,000–6,000, it was only slightly more expensive than considerably slower IBM models. The announcement surprised rival executives, who admitted that matching IBM's prices would be difficult. No major competitor showed a comparable computer at COMDEX Las Vegas that year. Features The AT is IBM PC compatible, wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Kilobyte

The kilobyte is a multiple of the unit byte for Computer data storage, digital information. The International System of Units (SI) defines the prefix ''kilo-, kilo'' as a multiplication factor of 1000 (103); therefore, one kilobyte is 1000 bytes.International Standard IEC 80000-13 Quantities and Units – Part 13: Information science and technology, International Electrotechnical Commission (2008). The internationally recommended unit symbol for the kilobyte is kB. In some areas of information technology, particularly in reference to random-access memory capacity, ''kilobyte'' instead often refers to 1024 (210) bytes. This arises from the prevalence of sizes that are powers of two in modern digital memory architectures, coupled with the coincidence that 210 differs from 103 by less than 2.5%. The kibibyte is defined as 1024 bytes, avoiding the ambiguity issues of the ''kilobyte''.International Standard IEC 80000-13 Quantities and Units – Part 13: Information scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Megabyte

The megabyte is a multiple of the unit byte for digital information. Its recommended unit symbol is MB. The unit prefix ''mega'' is a multiplier of (106) in the International System of Units (SI). Therefore, one megabyte is one million bytes of information. This definition has been incorporated into the International System of Quantities. In the computer and information technology fields, other definitions have been used that arose for historical reasons of convenience. A common usage has been to designate one megabyte as (220 B), a quantity that conveniently expresses the binary architecture of digital computer memory. Standards bodies have deprecated this binary usage of the mega- prefix in favor of a new set of binary prefixes, by means of which the quantity 220 B is named mebibyte (symbol MiB). Definitions The unit megabyte is commonly used for 10002 (one million) bytes or 10242 bytes. The interpretation of using base 1024 originated as technical jargon for the byte m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |