|

Random-access Turing Machine

In computational complexity, a field of computer science, random-access Turing machines are an extension of Turing machines used to speak about small complexity classes, especially for classes using logarithmic time, like DLOGTIME and the logarithmic hierarchy. Definition On a random-access Turing machine, there is a special ''pointer'' tape of logarithmic space accepting a binary vocabulary. The Turing machine has a special state such that when the binary number on the ''pointer'' tape is 'p', the Turing machine will write on the working tape the ''p''th symbol of the input. The pointer tape facility lets the Turing machine read any letter of the input without taking time to move over the entire input. This is mandatory for complexity classes using less than linear time. References * Neil Immerman ''Descriptive Complexity ''Descriptive Complexity'' is a book in mathematical logic and computational complexity theory by Neil Immerman. It concerns descriptive complexity th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turing Machine

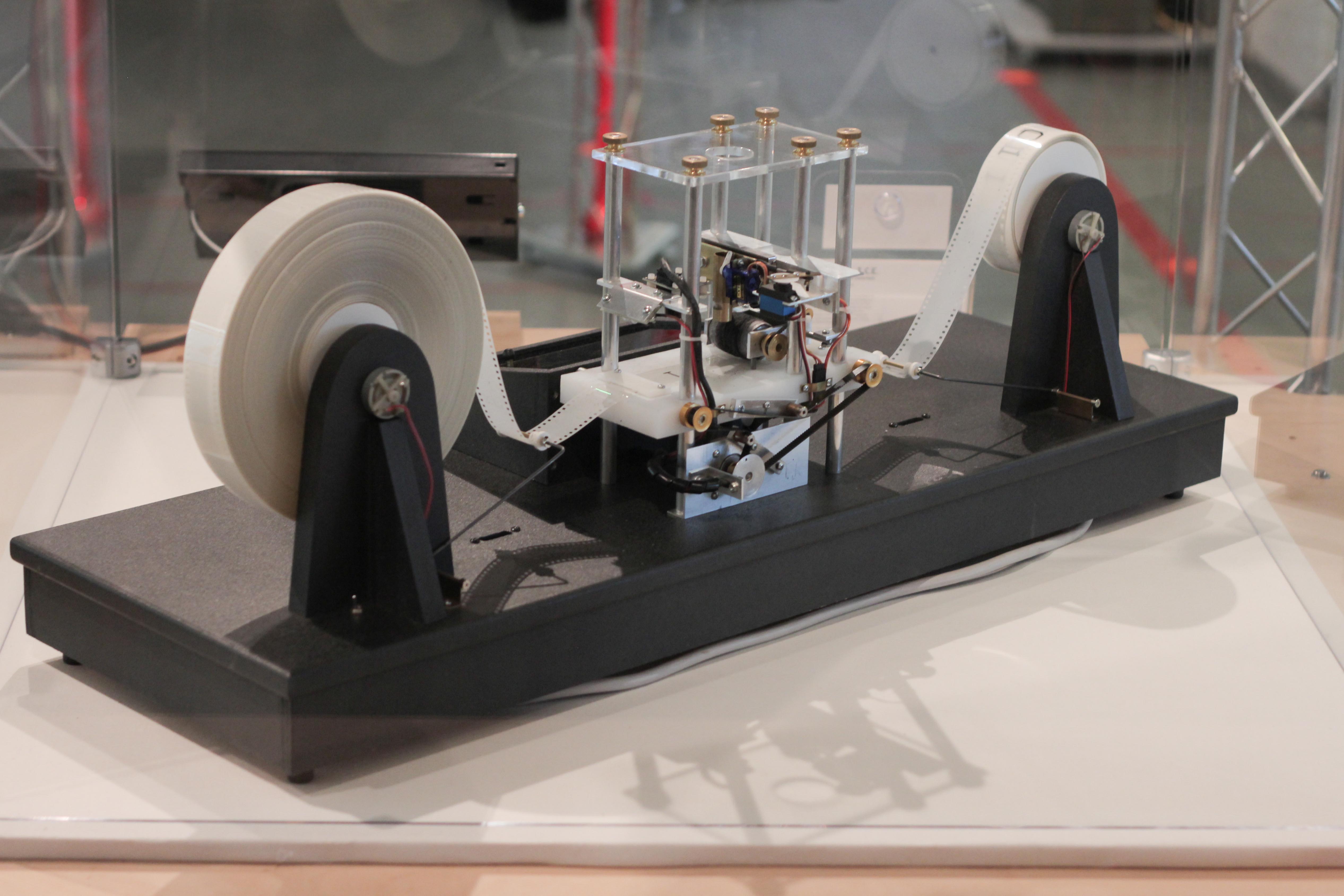

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Complexity

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DLOGTIME

In computational complexity theory, DLOGTIME is the complexity class of all computational problems solvable in a logarithmic amount of computation time on a deterministic Turing machine. It must be defined on a random-access Turing machine, since otherwise the input tape is longer than the range of cells that can be accessed by the machine. It is a very weak model of time complexity: no random-access Turing machine with a smaller deterministic time bound can access the whole input.. See in particulap. 140 Examples DLOGTIME includes problems relating to verifying the length of the input, for example the problem "''Is the input of even length?''", which can be solved in logarithmic time using binary search. Applications DLOGTIME- uniformity is important in circuit complexity In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LH (complexity)

In computational complexity, the logarithmic time hierarchy (LH) is the complexity class of all computational problems solvable in a logarithmic amount of computation time on an alternating Turing machine with a bounded number of alternations. It is a particular case of a bounded alternating Turing machine hierarchy. It is equal to FO and to FO-uniform AC0. The ith level of the logarithmic time hierarchy is the set of languages recognised by alternating Turing machines in logarithmic time with random access Random access (more precisely and more generally called direct access) is the ability to access an arbitrary element of a sequence in equal time or any datum from a population of addressable elements roughly as easily and efficiently as any othe ... and i-1 alternations, beginning with an existential state. LH is the union of all levels. References Complexity classes {{Comp-sci-theory-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pointer (computing)

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer ''references'' a location in memory, and obtaining the value stored at that location is known as ''dereferencing'' the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture. Using pointers significantly improves performance for repetitive operations, like traversing iterable data structures (e.g. strings, lookup tables, control tables and tree structures). In particular, it is often much cheaper in time and space to copy and dereference point ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally expresse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neil Immerman

Neil Immerman (born 24 November 1953, Manhasset, New York) is an American theoretical computer scientist, a professor of computer science at the University of Massachusetts Amherst.Faculty directory: Neil Immerman Computer Science Department, , retrieved 2010-01-23. He is one of the key developers of descriptive complexity, an approach he is currently applying to research in model checking, database theory, and computational complexity theory. Professor Immerman is an editor of the '' |

Descriptive Complexity

''Descriptive Complexity'' is a book in mathematical logic and computational complexity theory by Neil Immerman. It concerns descriptive complexity theory, an area in which the expressibility of mathematical properties using different types of logic is shown to be equivalent to their computability in different types of resource-bounded models of computation. It was published in 1999 by Springer-Verlag in their book series Graduate Texts in Computer Science. Topics The book has 15 chapters, roughly grouped into five chapters on first-order logic, three on second-order logic, and seven independent chapters on advanced topics. The first two chapters provide background material in first-order logic (including first-order arithmetic, the BIT predicate, and the notion of a first-order query) and complexity theory (including formal languages, resource-bounded complexity classes, and complete problems). Chapter three begins the connection between logic and complexity, with a proof that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |