|

Processor Consistency

Processor consistency is one of the consistency models used in the domain of concurrent computing (e.g. in distributed shared memory, distributed transactions, etc.). A system exhibits processor consistency if the order in which other processors see the writes from any individual processor is the same as the order they were issued. Because of this, processor consistency is only applicable to systems with multiple processors. It is weaker than the causal consistency model because it does not require writes from ''all'' processors to be seen in the same order, but stronger than the PRAM consistency model because it requires cache coherence. Another difference between causal consistency and processor consistency is that processor consistency removes the requirements for loads to wait for stores to complete, and for Write Atomicity. Processor consistency is also stronger than cache consistency because processor consistency requires all writes by a processor to be seen in order, not ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Consistency Model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be data consistency, consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in Distributed computing, distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cache coherence, cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java (progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrent Computing

Concurrent computing is a form of computing in which several computations are executed '' concurrently''—during overlapping time periods—instead of ''sequentially—''with one completing before the next starts. This is a property of a system—whether a program, computer, or a network—where there is a separate execution point or "thread of control" for each process. A ''concurrent system'' is one where a computation can advance without waiting for all other computations to complete. Concurrent computing is a form of modular programming. In its paradigm an overall computation is factored into subcomputations that may be executed concurrently. Pioneers in the field of concurrent computing include Edsger Dijkstra, Per Brinch Hansen, and C.A.R. Hoare. Introduction The concept of concurrent computing is frequently confused with the related but distinct concept of parallel computing, Pike, Rob (2012-01-11). "Concurrency is not Parallelism". ''Waza conference'', 11 Ja ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

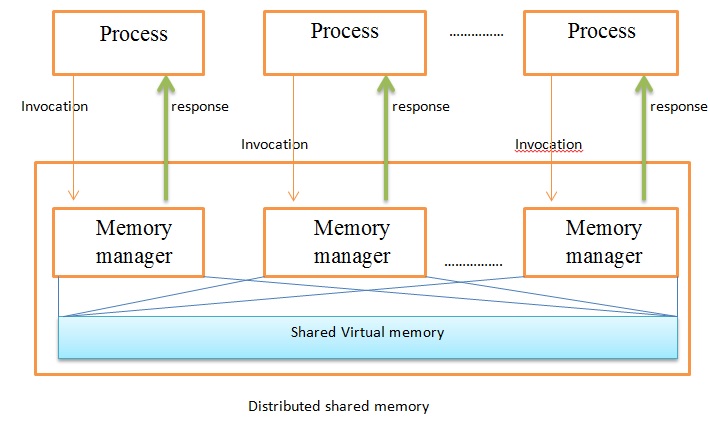

Distributed Shared Memory

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two Processor (computing), processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node (networking), node of a computer cluster, cluster has access to shared memory architecture, shared memory in addition to each node's private (i.e., not shared) Random-access memory, memory. Overview DSM can be achieved via software as well as hardware. Hardware examples include cache coherence circuits and network interface controllers. There are three ways of implementing DSM: * Page (computer memory), Page-based approach using virtual memory * Sha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Distributed Transaction

A distributed transaction operates within a distributed environment, typically involving multiple nodes across a network depending on the location of the data. A key aspect of distributed transactions is atomicity, which ensures that the transaction is completed in its entirety or not executed at all. It's essential to note that distributed transactions are not limited to databases. The Open Group, a vendor consortium, proposed the X/Open Distributed Transaction Processing Model (X/Open XA), which became a de facto standard for the behavior of transaction model components. Databases are common transactional resources and, often, transactions span a couple of such databases. In this case, a distributed transaction can be seen as a database transaction that must be synchronized (or provide ACID properties) among multiple participating databases which are distributed among different physical locations. The isolation property (the I of ACID) poses a special challenge for multi d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Causal Consistency

Causal consistency is one of the major memory consistency models. In concurrent programming, where concurrent processes are accessing a shared memory, a consistency model restricts which accesses are legal. This is useful for defining correct data structures in distributed shared memory or distributed transactions. Causal Consistency is “Available under Partition”, meaning that a process can read and write the memory (memory is Available) even while there is no functioning network connection (network is Partitioned) between processes; it is an asynchronous model. Contrast to strong consistency models, such as sequential consistency or linearizability, which cannot be both safe and live under partition, and are slow to respond because they require synchronisation. Causal consistency was proposed in 1990s as a weaker consistency model for shared memory models. Causal consistency is closely related to the concept of Causal Broadcast in communication protocols. In these ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

PRAM Consistency

PRAM consistency (pipelined random access memory) also known as '' FIFO consistency''. All processes see memory writes from one process in the order they were issued from the process. Writes from different processes may be seen in a different order on different processes. Only the write order needs to be consistent, thus the name ''pipelined''. PRAM consistency is easy to implement. In effect it says that there are no guarantees about the order in which different processes see writes, except that two or more writes from a single source must arrive in order, as though they were in a pipeline. P1:W(x)1 P2: R(x)1W(x)2 P3: R(x)1R(x)2 P4: R(x)2R(x)1 Time ----> Fig: A valid sequence of events for PRAM consistency. The above sequence is not valid for Causal consistency Causal consistency is one of the major memory consistency models. In concurrent programming, where concurrent processes are accessing a shared memory, a consi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Coherence

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all copies are the same. Without cache coherence, a change made to the region by one client may not be seen by others, and errors can result when the data used by different clients is mismatched. A cache coherence protocol is used to maintain cache coherency. The two main types are snooping and directory-based protocols. Cache coherence is of particular relevance in multiprocessing systems, where each CPU may have its own local cache of a shared memory resource. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Linearizability

In concurrent programming, an operation (or set of operations) is linearizable if it consists of an ordered list of invocation and response events, that may be extended by adding response events such that: # The extended list can be re-expressed as a sequential history (is serializable). # That sequential history is a subset of the original unextended list. Informally, this means that the unmodified list of events is linearizable if and only if its invocations were serializable, but some of the responses of the serial schedule have yet to return. In a concurrent system, processes can access a shared object at the same time. Because multiple processes are accessing a single object, a situation may arise in which while one process is accessing the object, another process changes its contents. Making a system linearizable is one solution to this problem. In a linearizable system, although operations overlap on a shared object, each operation appears to take place instantaneousl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Consistency

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all copies are the same. Without cache coherence, a change made to the region by one client may not be seen by others, and errors can result when the data used by different clients is mismatched. A cache coherence protocol is used to maintain cache coherency. The two main types are snooping and directory-based protocols. Cache coherence is of particular relevance in multiprocessing systems, where each CPU may have its own local cache of a shared memory resource. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is ch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sequential Consistency

Sequential consistency is a consistency model used in the domain of concurrent computing (e.g. in distributed shared memory, distributed transactions, etc.). It is the property that "... the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operations of each individual processor appear in this sequence in the order specified by its program." That is, the execution order of a program in the same processor (or thread) is the same as the program order, while the execution order of a program on different processors (or threads) is undefined. In an example like this: execution order between A1, B1 and C1 is preserved, that is, A1 runs before B1, and B1 before C1. The same for A2 and B2. But, as execution order between processors is undefined, B2 might run before or after C1 (B2 might physically run before C1, but the effect of B2 might be seen after that of C1, which is the same as "B2 run after C1") Conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Instruction Prefetch

Instruction or instructions may refer to: A specific direction or order given to someone to perform a task or carry out a procedure. They provide clear guidance on how to achieve a desired outcome. They can be written or verbal, and they typically include detailed steps or actions to follow. Instructions are crucial for ensuring tasks are completed correctly and efficiently. Computing * Instruction, one operation of a processor within a computer architecture instruction set * Computer program, a collection of instructions Music * Instruction (band), a 2002 rock band from New York City, US * "Instruction" (song), a 2017 song by English DJ Jax Jones * ''Instructions'' (album), a 2001 album by Jermaine Dupri Other uses * Instruction, teaching or education performed by a teacher * Sebayt, a work of the ancient Egyptian didactic literature aiming to teach ethical behaviour * Instruction, the pre-trial phase of an investigation led by a judge in an inquisitorial system of justice * I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |