|

Probabilistic Latent Semantic Analysis

Probabilistic latent semantic analysis (PLSA), also known as probabilistic latent semantic indexing (PLSI, especially in information retrieval circles) is a statistical technique for the analysis of two-mode and co-occurrence data. In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved. Compared to standard latent semantic analysis which stems from linear algebra and downsizes the occurrence tables (usually via a singular value decomposition), probabilistic latent semantic analysis is based on a mixture decomposition derived from a latent class model. Model Considering observations in the form of co-occurrences (w,d) of words and documents, PLSA models the probability of each co-occurrence as a mixture of conditionally independent multinomial distributions: : P(w,d) = \sum_c P(c) P(d, c) P(w, c) = P(d) \sum_c P(c, d) P(w, c) with c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Technique

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset (Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with the su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aspect Model

Aspect or Aspects may refer to: Entertainment * ''Aspect magazine'', a biannual DVD magazine showcasing new media art * Aspect Co., a Japanese video game company * Aspects (band), a hip hop group from Bristol, England * ''Aspects'' (Benny Carter album), a 1959 album by Benny Carter * ''Aspects'' (The Eleventh House album), a 1976 album by Larry Coryell and The Eleventh House * ''Aspects'' (novel), a posthumous novel by John M. Ford Persons * Alain Aspect, a French physicist and Nobel prize recipient (1947–) Other * Aspect (computer programming), a feature linked to many parts of a program but not necessarily the primary function of the program * Aspect (geography), the compass direction that a slope faces * Aspect (religion), a particular manifestation of a deity * Aspect (trade union), a trade union in the United Kingdom * Aspect Software, an American call center technology and customer experience company * Astrological aspect, an angle the planets have to each other * Gramm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classification Algorithms

Classification is a process related to categorization, the process in which ideas and objects are recognized, differentiated and understood. Classification is the grouping of related facts into classes. It may also refer to: Business, organizations, and economics * Classification of customers, for marketing (as in Master data management) or for profitability (e.g. by Activity-based costing) * Classified information, as in legal or government documentation * Job classification, as in job analysis * Standard Industrial Classification, economic activities Mathematics * Attribute-value system, a basic knowledge representation framework * Classification theorems in mathematics * Mathematical classification, grouping mathematical objects based on a property that all those objects share * Statistical classification, identifying to which of a set of categories a new observation belongs, on the basis of a training set of data Media * Classification (literature), a figure of speech li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, tho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space Model

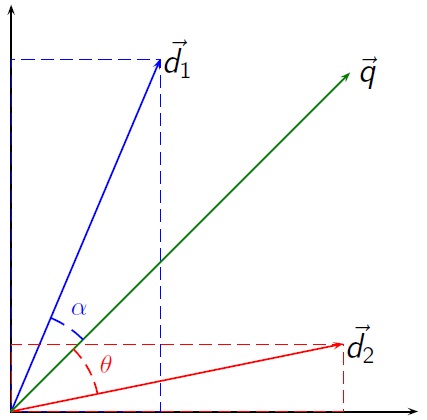

Vector space model or term vector model is an algebraic model for representing text documents (and any objects, in general) as vectors of identifiers (such as index terms). It is used in information filtering, information retrieval, indexing and relevancy rankings. Its first use was in the SMART Information Retrieval System. Definitions Documents and queries are represented as vectors. :d_j = ( w_ ,w_ , \dotsc ,w_ ) :q = ( w_ ,w_ , \dotsc ,w_ ) Each dimension corresponds to a separate term. If a term occurs in the document, its value in the vector is non-zero. Several different ways of computing these values, also known as (term) weights, have been developed. One of the best known schemes is tf-idf weighting (see the example below). The definition of ''term'' depends on the application. Typically terms are single words, keywords, or longer phrases. If words are chosen to be the terms, the dimensionality of the vector is the number of words in the vocabulary (the number of dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pachinko Allocation

In machine learning and natural language processing, the pachinko allocation model (PAM) is a topic model. Topic models are a suite of algorithms to uncover the hidden thematic structure of a collection of documents. The algorithm improves upon earlier topic models such as latent Dirichlet allocation (LDA) by modeling correlations between topics in addition to the word correlations which constitute topics. PAM provides more flexibility and greater expressive power than latent Dirichlet allocation. While first described and implemented in the context of natural language processing, the algorithm may have applications in other fields such as bioinformatics. The model is named for pachinko machines—a game popular in Japan, in which metal balls bounce down around a complex collection of pins until they land in various bins at the bottom. History Pachinko allocation was first described by Wei Li and Andrew McCallum in 2006. The idea was extended with hierarchical Pachinko alloca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compound Term Processing

Compound-term processing, in information-retrieval, is search result matching on the basis of compound terms. Compound terms are built by combining two or more simple terms; for example, "triple" is a single word term, but "triple heart bypass" is a compound term. Compound-term processing is a new approach to an old problem: how can one improve the relevance of search results while maintaining ease of use? Using this technique, a search for ''survival rates following a triple heart bypass in elderly people'' will locate documents about this topic even if this precise phrase is not contained in any document. This can be performed by a concept search, which itself uses compound-term processing. This will extract the key concepts automatically (in this case "survival rates", "triple heart bypass" and "elderly people") and use these concepts to select the most relevant documents. Techniques In August 2003, Concept Searching Limited introduced the idea of using statistical compound-t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

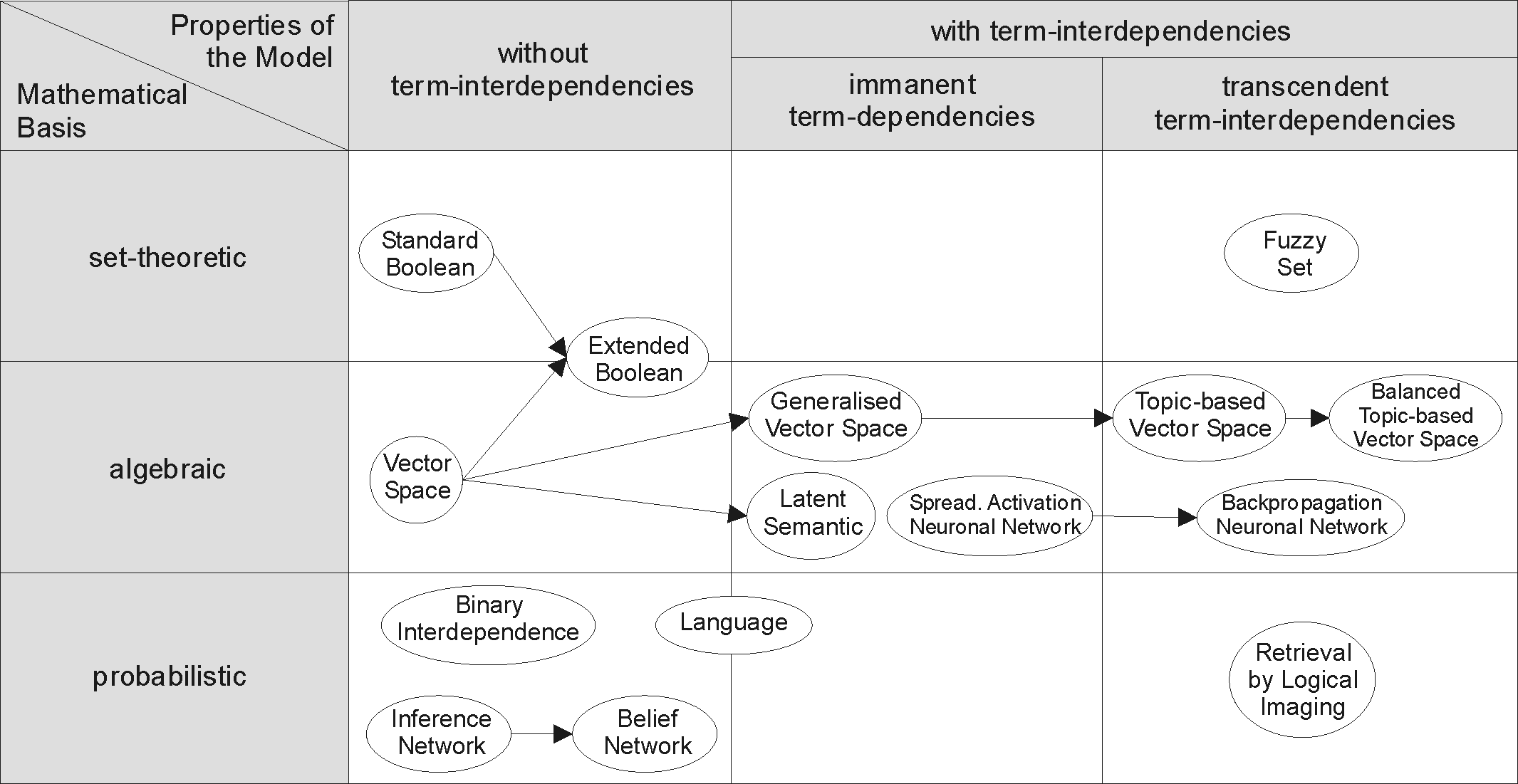

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Special Interest Group On Information Retrieval

SIGIR is the Association for Computing Machinery's Special Interest Group on Information Retrieval. The scope of the group's specialty is the theory and application of computers to the acquisition, organization, storage, retrieval and distribution of information; emphasis is placed on working with non-numeric information, ranging from natural language to highly structured data bases. Conferences The annual international SIGIR conference, which began in 1978, is considered the most important in the field of information retrieval. SIGIR also sponsors the annual Joint Conference on Digital Libraries (JCDL) in association with SIGWEB, the Conference on Information and Knowledge Management (CIKM), and the International Conference on Web Search and Data Mining (WSDM) in association with SIGKDD, SIGMOD, and SIGWEB. SIGIR conference locations Awards The group gives out several awards to contributions to the field of information retrieval. The most important award is the G ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-negative Matrix Factorization

Non-negative matrix factorization (NMF or NNMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix is factorized into (usually) two matrices and , with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms or muscular activity, non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically. NMF finds applications in such fields as astronomy, computer vision, document clustering, missing data imputation, chemometrics, audio signal processing, recommender systems, and bioinformatics. History In chemometrics non-negative matrix factorization has a long history under the name "self modeling curve resolution". In this framework the vectors in the right matrix are continuous curves ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirichlet Distribution

In probability and statistics, the Dirichlet distribution (after Peter Gustav Lejeune Dirichlet), often denoted \operatorname(\boldsymbol\alpha), is a family of continuous multivariate probability distributions parameterized by a vector \boldsymbol\alpha of positive reals. It is a multivariate generalization of the beta distribution, (Chapter 49: Dirichlet and Inverted Dirichlet Distributions) hence its alternative name of multivariate beta distribution (MBD). Dirichlet distributions are commonly used as prior distributions in Bayesian statistics, and in fact, the Dirichlet distribution is the conjugate prior of the categorical distribution and multinomial distribution. The infinite-dimensional generalization of the Dirichlet distribution is the ''Dirichlet process''. Definitions Probability density function The Dirichlet distribution of order ''K'' ≥ 2 with parameters ''α''1, ..., ''α''''K'' > 0 has a probability density function with respect to Lebesgue m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Dirichlet Allocation

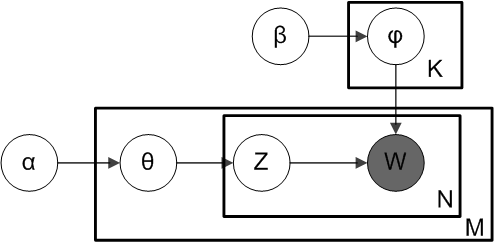

In natural language processing, Latent Dirichlet Allocation (LDA) is a generative statistical model that explains a set of observations through unobserved groups, and each group explains why some parts of the data are similar. The LDA is an example of a topic model. In this, observations (e.g., words) are collected into documents, and each word's presence is attributable to one of the document's topics. Each document will contain a small number of topics. History In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000. LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003. Overview Evolutionary biology and bio-medicine In evolutionary biology and bio-medicine, the model is used to detect the presence of structured genetic variation in a group of individuals. The model assumes that alleles carried by individuals under study have origin in various extant or past populations. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |