|

Parallel Virtual Machine

Parallel Virtual Machine (PVM) is a software tool for parallel networking of computers. It is designed to allow a network of heterogeneous Unix and/or Windows machines to be used as a single distributed parallel processor. Thus large computational problems can be solved more cost effectively by using the aggregate power and memory of many computers. The software is very portable; the source code, available free through netlib, has been compiled on everything from laptops to Crays. PVM enables users to exploit their existing computer hardware to solve much larger problems at less additional cost. PVM has been used as an educational tool to teach parallel programming but has also been used to solve important practical problems. It was developed by the University of Tennessee, Oak Ridge National Laboratory and Emory University. The first version was written at ORNL in 1989, and after being rewritten by University of Tennessee, version 2 was released in March 1991. Version 3 was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Oak Ridge National Laboratory

Oak Ridge National Laboratory (ORNL) is a federally funded research and development centers, federally funded research and development center in Oak Ridge, Tennessee, United States. Founded in 1943, the laboratory is sponsored by the United States Department of Energy and administered by UT–Battelle, UT–Battelle, LLC. Established in 1943, ORNL is the largest science and energy national laboratory in the Department of Energy system by size and third largest by annual budget. It is located in the Roane County, Tennessee, Roane County section of Oak Ridge. Its scientific programs focus on materials science, materials, nuclear power, nuclear science, neutron science, energy, high-performance computing, environmental science, systems biology and national security, sometimes in partnership with the state of Tennessee, universities and other industries. ORNL has several of the world's top supercomputers, including Frontier (supercomputer), Frontier, ranked by the TOP500 as the wo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Grid Computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished from conventional high-performance computing systems such as cluster computing in that grid computers have each node set to perform a different task/application. Grid computers also tend to be more heterogeneous and geographically dispersed (thus not physically coupled) than cluster computers. Although a single grid can be dedicated to a particular application, commonly a grid is used for a variety of purposes. Grids are often constructed with general-purpose grid middleware software libraries. Grid sizes can be quite large. Grids are a form of distributed computing composed of many networked loosely coupled computers acting together to perform large tasks. For certain applications, distributed or grid computing can be seen as a special ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Message Passing

In computer science, message passing is a technique for invoking behavior (i.e., running a program) on a computer. The invoking program sends a message to a process (which may be an actor or object) and relies on that process and its supporting infrastructure to then select and run some appropriate code. Message passing differs from conventional programming where a process, subroutine, or function is directly invoked by name. Message passing is key to some models of concurrency and object-oriented programming. Message passing is ubiquitous in modern computer software. It is used as a way for the objects that make up a program to work with each other and as a means for objects and systems running on different computers (e.g., the Internet) to interact. Message passing may be implemented by various mechanisms, including channels. Overview Message passing is a technique for invoking behavior (i.e., running a program) on a computer. In contrast to the traditional technique of ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fiber Distributed Data Interface

Fiber Distributed Data Interface (FDDI) is a standard for data transmission in a local area network. It uses optical fiber as its standard underlying physical medium. It was also later specified to use copper cable, in which case it may be called CDDI (Copper Distributed Data Interface), standardized as TP-PMD (Twisted-Pair Physical Medium-Dependent), also referred to as TP-DDI (Twisted-Pair Distributed Data Interface). FDDI was effectively made obsolete in local networks by Fast Ethernet which offered the same 100 Mbit/s speeds, but at a much lower cost and, from 1998 on, by Gigabit Ethernet due to its speed, even lower cost, and ubiquity. Description FDDI provides a 100 Mbit/s optical standard for data transmission in local area network that can extend in length up to . Although FDDI logical topology is a ring-based token network, it did not use the IEEE 802.5 Token Ring protocol as its basis; instead, its protocol was derived from the IEEE 802.4 token bus ''ti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ethernet

Ethernet ( ) is a family of wired computer networking technologies commonly used in local area networks (LAN), metropolitan area networks (MAN) and wide area networks (WAN). It was commercially introduced in 1980 and first standardized in 1983 as IEEE 802.3. Ethernet has since been refined to support higher bit rates, a greater number of nodes, and longer link distances, but retains much backward compatibility. Over time, Ethernet has largely replaced competing wired LAN technologies such as Token Ring, FDDI and ARCNET. The original 10BASE5 Ethernet uses a thick coaxial cable as a shared medium. This was largely superseded by 10BASE2, which used a thinner and more flexible cable that was both less expensive and easier to use. More modern Ethernet variants use Ethernet over twisted pair, twisted pair and fiber optic links in conjunction with Network switch, switches. Over the course of its history, Ethernet data transfer rates have been increased from the original to the lates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Personal Computer

A personal computer, commonly referred to as PC or computer, is a computer designed for individual use. It is typically used for tasks such as Word processor, word processing, web browser, internet browsing, email, multimedia playback, and PC game, gaming. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or technician. Unlike large, costly minicomputers and mainframes, time-sharing by many people at the same time is not used with personal computers. The term home computer has also been used, primarily in the late 1970s and 1980s. The advent of personal computers and the concurrent Digital Revolution have significantly affected the lives of people. Institutional or corporate computer owners in the 1960s had to write their own programs to do any useful work with computers. While personal computer users may develop their applications, usually these systems run commercial software, free-of-charge software ("freeware"), which i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Workstation

A workstation is a special computer designed for technical or computational science, scientific applications. Intended primarily to be used by a single user, they are commonly connected to a local area network and run multi-user operating systems. The term ''workstation'' has been used loosely to refer to everything from a mainframe computer terminal to a Personal computer, PC connected to a Computer network, network, but the most common form refers to the class of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, Digital Equipment Corporation, DEC, HP Inc., HP, NeXT, and IBM which powered the 3D computer graphics revolution of the late 1990s. Workstations formerly offered higher performance than mainstream personal computers, especially in Central processing unit, CPU, Graphics processing unit, graphics, memory, and multitasking. Workstations are optimized for the Visualization (graphics), visualization and ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scalar Processor

Scalar processors are a class of computer processors that process only one data item at a time. Typical data items include integers and floating point numbers. Classification A scalar processor is classified as a single instruction, single data (SISD) processor in Flynn's taxonomy. The Intel 486 is an example of a scalar processor. It is to be contrasted with a vector processor where a single instruction operates simultaneously on multiple data items (and thus is referred to as a single instruction, multiple data (SIMD) processor). The difference is analogous to the difference between scalar and vector arithmetic. The term ''scalar'' in computing dates to the 1970 and 1980s when vector processors were first introduced. It was originally used to distinguish the older designs from the new vector processors. Superscalar processor A superscalar processor (such as the Intel P5) may execute more than one instruction during a clock cycle by simultaneously dispatching multiple in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Supercomputer

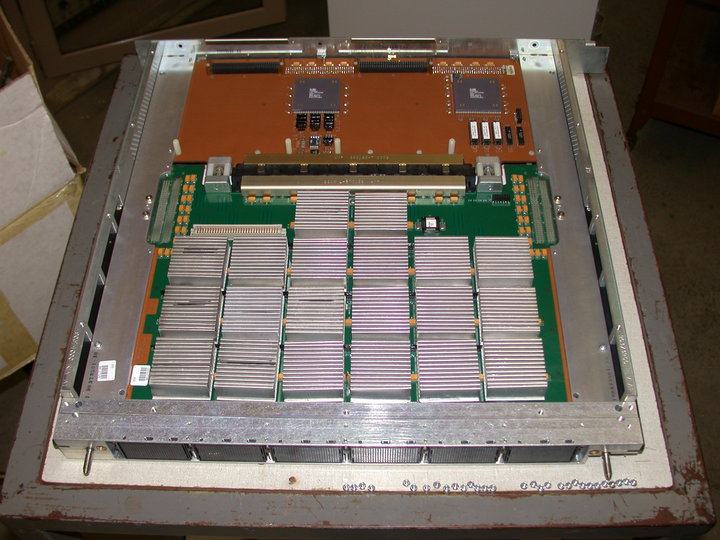

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called Exascale computing, exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the TOP500, world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector Processor

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, compression and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Multiprocessor

Multiprocessing (MP) is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined ( multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). A multiprocessor is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. A 2009 textbook defined multiprocessor system similarly, but noted that the processors may share "some or all of the system’s memory and I/O facilities"; it also gave tightly coupled system as a synonymous term. At the operating system level, ''multiprocessing'' is sometimes used to refer to the executio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Virtual Machine

In computing, a virtual machine (VM) is the virtualization or emulator, emulation of a computer system. Virtual machines are based on computer architectures and provide the functionality of a physical computer. Their implementations may involve specialized hardware, software, or a combination of the two. Virtual machines differ and are organized by their function, shown here: * ''System virtual machines'' (also called full virtualization VMs, or SysVMs) provide a substitute for a real machine. They provide the functionality needed to execute entire operating systems. A hypervisor uses native code, native execution to share and manage hardware, allowing for multiple environments that are isolated from one another yet exist on the same physical machine. Modern hypervisors use hardware-assisted virtualization, with virtualization-specific hardware features on the host CPUs providing assistance to hypervisors. * ''Process virtual machines'' are designed to execute computer programs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |